Compare commits

686 Commits

feat/add-t

...

3.38

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

aaed1dc3d5 | ||

|

|

c8dce94c03 | ||

|

|

06496d07b3 | ||

|

|

a97f98c9cc | ||

|

|

8d0406f74f | ||

|

|

c64d14701d | ||

|

|

00332ae444 | ||

|

|

e8deb3d8fe | ||

|

|

8b234c99cf | ||

|

|

1f986d9c45 | ||

|

|

bacb8fb3cd | ||

|

|

e4a90089ab | ||

|

|

674b9f3705 | ||

|

|

4941fb8aa0 | ||

|

|

183af0dfa5 | ||

|

|

45ac5429f8 | ||

|

|

c771977a95 | ||

|

|

668d7bbb2c | ||

|

|

926cfabb58 | ||

|

|

a9a8d05115 | ||

|

|

e368f4366a | ||

|

|

dc5bddbc17 | ||

|

|

358a480408 | ||

|

|

c96fdb3c7a | ||

|

|

c090abcc02 | ||

|

|

1ff02be35f | ||

|

|

10fbfb88f7 | ||

|

|

9753df72ed | ||

|

|

095cc3f792 | ||

|

|

656171037b | ||

|

|

7ac10f9442 | ||

|

|

3925ba27b4 | ||

|

|

44ba79aa31 | ||

|

|

14d0e31268 | ||

|

|

033acffad1 | ||

|

|

d29ff808a5 | ||

|

|

dc9b6d655b | ||

|

|

d340c85013 | ||

|

|

e328353664 | ||

|

|

02785af8fd | ||

|

|

736ae5d63e | ||

|

|

e1eeb617d2 | ||

|

|

23b6c7f0de | ||

|

|

997f97e1fc | ||

|

|

ff335ff1a0 | ||

|

|

cb3036ef81 | ||

|

|

f762906188 | ||

|

|

dde7920f8c | ||

|

|

1a0d24110a | ||

|

|

e79f6c4471 | ||

|

|

a8a7024a84 | ||

|

|

d93d002da0 | ||

|

|

baaa0479e8 | ||

|

|

cc3bd7a056 | ||

|

|

4ecefb3b71 | ||

|

|

f24b5aa251 | ||

|

|

de547da4cd | ||

|

|

0f884166a6 | ||

|

|

63379f759d | ||

|

|

8fdff20243 | ||

|

|

5dfa07ca03 | ||

|

|

343645be6a | ||

|

|

91bf21d7a8 | ||

|

|

be6516cfd3 | ||

|

|

61f1e516a3 | ||

|

|

73b2278b45 | ||

|

|

aa625e30b6 | ||

|

|

29a46fe4ce | ||

|

|

5b3ee49530 | ||

|

|

a9158a101f | ||

|

|

feed8abb34 | ||

|

|

70decc740f | ||

|

|

5b5c83f8c5 | ||

|

|

773c06f40d | ||

|

|

737e6ad5ed | ||

|

|

81bca9c94e | ||

|

|

eef0654de2 | ||

|

|

997a00b8a2 | ||

|

|

4d25232c5f | ||

|

|

135befa101 | ||

|

|

44cac3fc43 | ||

|

|

449fa3510e | ||

|

|

d958af8aad | ||

|

|

09f8d5cb2d | ||

|

|

aedc99cefd | ||

|

|

b32cab6e9a | ||

|

|

a95186965e | ||

|

|

7067de1bb2 | ||

|

|

f45d878d21 | ||

|

|

a0532b938d | ||

|

|

6ad12b7652 | ||

|

|

02887c6c9b | ||

|

|

1b645e1cc3 | ||

|

|

0a4b2a0488 | ||

|

|

d4ce6ddc52 | ||

|

|

5a5b989533 | ||

|

|

b57cffb0fa | ||

|

|

72aa95cacf | ||

|

|

14ef448937 | ||

|

|

1c10607c06 | ||

|

|

41e53a1f2a | ||

|

|

cde83e9a38 | ||

|

|

6c206b1c72 | ||

|

|

a474219e7b | ||

|

|

3b8d25eb31 | ||

|

|

0faa0aa668 | ||

|

|

0fcdcc93a2 | ||

|

|

461d5e72fe | ||

|

|

3f030a2121 | ||

|

|

7fb8e8662f | ||

|

|

dd3ab9cff2 | ||

|

|

97b518de12 | ||

|

|

d2a795c866 | ||

|

|

8a3d65be20 | ||

|

|

b2126d8ba5 | ||

|

|

6386411d21 | ||

|

|

4250244136 | ||

|

|

77c4f9993d | ||

|

|

c7c8417577 | ||

|

|

9d0985ded8 | ||

|

|

3663e10e33 | ||

|

|

5f37a82c3c | ||

|

|

026bf1dfd7 | ||

|

|

643a6e5080 | ||

|

|

5267502896 | ||

|

|

c3c152122d | ||

|

|

afeac097e5 | ||

|

|

e5cea64132 | ||

|

|

26da78cf15 | ||

|

|

179a1e1ca0 | ||

|

|

b379d275d1 | ||

|

|

133cdfb203 | ||

|

|

2b79edd9be | ||

|

|

3862a92e04 | ||

|

|

f4e3817fcc | ||

|

|

61f0f5d67c | ||

|

|

87f57551ea | ||

|

|

ee51efed69 | ||

|

|

5dab865681 | ||

|

|

8c0581eebc | ||

|

|

a72f9f422c | ||

|

|

1354a8c970 | ||

|

|

00a5115267 | ||

|

|

00282eab7b | ||

|

|

bec128de58 | ||

|

|

9edfa7b4fa | ||

|

|

a9af70e5f0 | ||

|

|

910caf593f | ||

|

|

02dc072dc7 | ||

|

|

78fb354452 | ||

|

|

66f5eca7fa | ||

|

|

be95396a57 | ||

|

|

59cbed429f | ||

|

|

d49df7aebb | ||

|

|

0aa9faad2e | ||

|

|

1337def888 | ||

|

|

4b100c558b | ||

|

|

1425a71ece | ||

|

|

a8524508fe | ||

|

|

a5ff973d53 | ||

|

|

337c9aa2c7 | ||

|

|

f1448403ac | ||

|

|

d0b5f77ec6 | ||

|

|

9cb22ffb60 | ||

|

|

f556962d82 | ||

|

|

d28448d519 | ||

|

|

c590a88ffd | ||

|

|

a1fc6c817b | ||

|

|

5554e52799 | ||

|

|

ca749eb4d2 | ||

|

|

41ceee3d24 | ||

|

|

5acfd52986 | ||

|

|

ec4c7b2f6a | ||

|

|

22a3d8f95f | ||

|

|

06b89ca277 | ||

|

|

9e5ffbd00a | ||

|

|

39e92ed778 | ||

|

|

68a3ec567a | ||

|

|

28231e81b3 | ||

|

|

b2ee0feeaa | ||

|

|

5541b6b366 | ||

|

|

408a5fe27e | ||

|

|

bffc73f976 | ||

|

|

bd6edfc9dd | ||

|

|

2cb24e8a94 | ||

|

|

a49779c4d2 | ||

|

|

15a5a5f5df | ||

|

|

b5e0558d6e | ||

|

|

4d683b23fc | ||

|

|

c13da606b2 | ||

|

|

c792f9277c | ||

|

|

b430f42622 | ||

|

|

fee822f5ae | ||

|

|

192659ecbd | ||

|

|

810431b9e2 | ||

|

|

02d845adf3 | ||

|

|

89c7b960fb | ||

|

|

ed1e399a56 | ||

|

|

8a3ce1ae57 | ||

|

|

d89ff649f8 | ||

|

|

24a73b5d1c | ||

|

|

4d0c40ff8a | ||

|

|

b5a2bed539 | ||

|

|

0efb79f571 | ||

|

|

df944b9a0f | ||

|

|

2c11846430 | ||

|

|

0035c01186 | ||

|

|

34be3384fe | ||

|

|

ebbc7b3335 | ||

|

|

4ccc8c3086 | ||

|

|

af9ebc9568 | ||

|

|

ca4b61c5f0 | ||

|

|

393839b3ab | ||

|

|

dadfc96e00 | ||

|

|

a0a33aef03 | ||

|

|

99ed81e0f5 | ||

|

|

5b697db219 | ||

|

|

8e5bf46e14 | ||

|

|

9f649b0900 | ||

|

|

abb15e06d3 | ||

|

|

11a317493e | ||

|

|

e8cece0c1b | ||

|

|

1ab882f81d | ||

|

|

b9338186e3 | ||

|

|

7c3cbff425 | ||

|

|

1ff0afc633 | ||

|

|

bfe7ee8fba | ||

|

|

49c73ed10e | ||

|

|

f571baacf9 | ||

|

|

6f02e1114c | ||

|

|

e230f43565 | ||

|

|

0d9593e71b | ||

|

|

20778ecfb0 | ||

|

|

2ea991d960 | ||

|

|

119c107834 | ||

|

|

800a0d0449 | ||

|

|

95c43f0189 | ||

|

|

9c77176c7f | ||

|

|

ddb6a55cd6 | ||

|

|

56a4f6fdd7 | ||

|

|

8a30f788b5 | ||

|

|

380a1c2c8c | ||

|

|

cd8e8335cf | ||

|

|

6e1beb54a4 | ||

|

|

9217c965dd | ||

|

|

a4d71ef487 | ||

|

|

518f332047 | ||

|

|

9257d497b8 | ||

|

|

07cf5de4f7 | ||

|

|

43ad69e48d | ||

|

|

c62e236cc6 | ||

|

|

15a2fbb293 | ||

|

|

16800c3fa0 | ||

|

|

ce09f41aa3 | ||

|

|

47dc2f036a | ||

|

|

f27a154bfd | ||

|

|

79757366e8 | ||

|

|

2cd9a417d6 | ||

|

|

deb05c6cc3 | ||

|

|

b6f171de51 | ||

|

|

a58d5f6999 | ||

|

|

e0b3f3eb45 | ||

|

|

4bbc8594a7 | ||

|

|

3a377300e1 | ||

|

|

33a07e3a86 | ||

|

|

212cafc1d7 | ||

|

|

2643b3cbcc | ||

|

|

d445229b6d | ||

|

|

dab5c451b0 | ||

|

|

7bdf06131a | ||

|

|

854648d5af | ||

|

|

c5f7b97359 | ||

|

|

dd8a727ad6 | ||

|

|

6c627fe422 | ||

|

|

ee980e1caf | ||

|

|

22bfaf6527 | ||

|

|

48ab48cc30 | ||

|

|

a0b14d4127 | ||

|

|

03f9fe1a70 | ||

|

|

8915b8d796 | ||

|

|

c77ffeeec0 | ||

|

|

4acf5660b2 | ||

|

|

2d9f0a668c | ||

|

|

9e6cb246cc | ||

|

|

14544ca63d | ||

|

|

26b347c04c | ||

|

|

36f75d1811 | ||

|

|

27fc787294 | ||

|

|

d23286d390 | ||

|

|

7c3ccc76c3 | ||

|

|

892dc5d4f3 | ||

|

|

e278692749 | ||

|

|

8d77dd2246 | ||

|

|

14ede2a585 | ||

|

|

5b525622f1 | ||

|

|

a24b11905c | ||

|

|

5d70858341 | ||

|

|

3daa006741 | ||

|

|

0bcc0c2101 | ||

|

|

b8850c808c | ||

|

|

f4f2c01ac1 | ||

|

|

7072e82dff | ||

|

|

53dc36c4cf | ||

|

|

5aadc3af00 | ||

|

|

8c28a698ed | ||

|

|

5ed6d8b202 | ||

|

|

b73dc7bf5e | ||

|

|

71d0f4ab63 | ||

|

|

d479dcde81 | ||

|

|

ae536017d5 | ||

|

|

67ddfce279 | ||

|

|

b1f39b34d7 | ||

|

|

6cf958ccce | ||

|

|

eaed3677d3 | ||

|

|

b9c88da54d | ||

|

|

104ae77f7a | ||

|

|

bfcb2ce61b | ||

|

|

63ba5fed09 | ||

|

|

98a8464933 | ||

|

|

7e3e6726e0 | ||

|

|

09567b2bb2 | ||

|

|

f3bd116184 | ||

|

|

7509737563 | ||

|

|

cfb815d879 | ||

|

|

44241fb967 | ||

|

|

c4b45129bd | ||

|

|

70741008ca | ||

|

|

6c2d2cae2a | ||

|

|

28f13d3311 | ||

|

|

4e31aaa8fb | ||

|

|

ba99f0c2cc | ||

|

|

e0a96b4937 | ||

|

|

82c055f527 | ||

|

|

f94008192c | ||

|

|

3895d5279e | ||

|

|

3d85ecc525 | ||

|

|

7da00796e5 | ||

|

|

6086419cb6 | ||

|

|

5bc1f2f2c0 | ||

|

|

32a83b211e | ||

|

|

bead7b3a7f | ||

|

|

815d6d6572 | ||

|

|

95ce812992 | ||

|

|

9a36f4748c | ||

|

|

50b7849a35 | ||

|

|

6f1245b27c | ||

|

|

cc87ed3899 | ||

|

|

1d9037fefe | ||

|

|

03016e2d16 | ||

|

|

3d41617f4e | ||

|

|

35151ffdd1 | ||

|

|

4527d41a7a | ||

|

|

553cba12f3 | ||

|

|

116e068ac3 | ||

|

|

1010dd2d28 | ||

|

|

7354242906 | ||

|

|

e7d0b158e9 | ||

|

|

330c4657b1 | ||

|

|

72a109f109 | ||

|

|

cf45c51dfb | ||

|

|

0b013adb34 | ||

|

|

7457d91f64 | ||

|

|

7fe1159426 | ||

|

|

c2665e3677 | ||

|

|

d63de803a4 | ||

|

|

11aca3513c | ||

|

|

561c9f40e5 | ||

|

|

54ed13aadf | ||

|

|

109cc21337 | ||

|

|

7e46b30fa5 | ||

|

|

0ba112c2c7 | ||

|

|

fc15d94170 | ||

|

|

dcb37d9c55 | ||

|

|

755b9d6342 | ||

|

|

3d6151c94f | ||

|

|

590bd8c4b9 | ||

|

|

e99aafd876 | ||

|

|

1f0adf8bcf | ||

|

|

dbd5d5fb43 | ||

|

|

a8b0e3641b | ||

|

|

9efb350be9 | ||

|

|

8d9820b3fb | ||

|

|

103f89551a | ||

|

|

6030d961ad | ||

|

|

ee08c9e17f | ||

|

|

48dd9a3240 | ||

|

|

e122e206a6 | ||

|

|

398b905758 | ||

|

|

dc2ec08fe3 | ||

|

|

3bf5edf5c9 | ||

|

|

134bca526c | ||

|

|

3393e58b06 | ||

|

|

eab6cdeee4 | ||

|

|

e8ec1ce8e3 | ||

|

|

b3581564ed | ||

|

|

29e1bd95fd | ||

|

|

8bff401c14 | ||

|

|

41798e9255 | ||

|

|

9e4f0228d1 | ||

|

|

76ee93c98c | ||

|

|

fb1a89efb7 | ||

|

|

aface43554 | ||

|

|

a35f0157b2 | ||

|

|

9b32162906 | ||

|

|

21bba62572 | ||

|

|

302327d6b3 | ||

|

|

5667e8bcbb | ||

|

|

ae66bd0e31 | ||

|

|

48dfadc02d | ||

|

|

3df6272bb6 | ||

|

|

e7f9bcda01 | ||

|

|

205044ca66 | ||

|

|

d497eb1f00 | ||

|

|

4e6f970ee9 | ||

|

|

0b6cdda6f5 | ||

|

|

a896ded763 | ||

|

|

fb5dd9ebc2 | ||

|

|

c8b7db6c38 | ||

|

|

44a3191be3 | ||

|

|

b4f7cdc9e7 | ||

|

|

8da07018d5 | ||

|

|

0c19a27065 | ||

|

|

3296b0ecdf | ||

|

|

0a07261124 | ||

|

|

33106d0ecf | ||

|

|

5bb887206a | ||

|

|

b30b0e27cb | ||

|

|

363736489c | ||

|

|

8dbf5e87a0 | ||

|

|

0b30f2cb50 | ||

|

|

ba5265dac4 | ||

|

|

ecb9c65917 | ||

|

|

8a98474600 | ||

|

|

b072216e67 | ||

|

|

cfb3181716 | ||

|

|

ab684cdc99 | ||

|

|

facadc3a44 | ||

|

|

281319d2da | ||

|

|

5cb203685c | ||

|

|

01fa37900b | ||

|

|

edbe744e17 | ||

|

|

2a32a1a4a8 | ||

|

|

404bdb21e6 | ||

|

|

b260c9a512 | ||

|

|

4b941adb6a | ||

|

|

bd752550a8 | ||

|

|

b8b71bb961 | ||

|

|

5aaf7a4092 | ||

|

|

030e02ffb8 | ||

|

|

d962aa03f4 | ||

|

|

9e4a2aae43 | ||

|

|

ee6eb685e7 | ||

|

|

09a38a32ce | ||

|

|

d13b19d43d | ||

|

|

e730dca1ad | ||

|

|

8da30640bb | ||

|

|

6f4eb88e07 | ||

|

|

d9592b9dab | ||

|

|

b87ada72aa | ||

|

|

83363ba1f0 | ||

|

|

23ebe7f718 | ||

|

|

e04264cfa3 | ||

|

|

8d29e5037f | ||

|

|

6926ed45b0 | ||

|

|

736b85b8bb | ||

|

|

9e3361bc31 | ||

|

|

6e10381020 | ||

|

|

a1d37d379c | ||

|

|

07d87db7a2 | ||

|

|

4e556673d2 | ||

|

|

f421304fc1 | ||

|

|

c9271b1686 | ||

|

|

12eb6863da | ||

|

|

4834874091 | ||

|

|

8759ebf200 | ||

|

|

d4715aebef | ||

|

|

0fe2ade7bb | ||

|

|

0c71565535 | ||

|

|

6a637091a2 | ||

|

|

31eba60012 | ||

|

|

51e58e9078 | ||

|

|

4a1e76730a | ||

|

|

5599bb028b | ||

|

|

552c6da0cc | ||

|

|

cc6817a891 | ||

|

|

fb48d1b485 | ||

|

|

1c336dad6b | ||

|

|

a4940d46cd | ||

|

|

499b2f44c1 | ||

|

|

2b200c9281 | ||

|

|

36a900c98f | ||

|

|

5236b03f66 | ||

|

|

8be35e3621 | ||

|

|

509f00fe89 | ||

|

|

a98b87f148 | ||

|

|

ae9b2b3b72 | ||

|

|

02e1ec0ae3 | ||

|

|

daefb0f120 | ||

|

|

ff0604e3b6 | ||

|

|

20e41e22fa | ||

|

|

a0e3bdd594 | ||

|

|

6580aaf3ad | ||

|

|

0b46701b60 | ||

|

|

0bb4effede | ||

|

|

b07082a52d | ||

|

|

04f267f5a7 | ||

|

|

03ccce2804 | ||

|

|

e894bd9f24 | ||

|

|

10e6988273 | ||

|

|

905b61e5d8 | ||

|

|

ee69d393ae | ||

|

|

cab39973ae | ||

|

|

d93f5d07bb | ||

|

|

ba00ffe1ae | ||

|

|

6afaf5eaf5 | ||

|

|

d30459cc34 | ||

|

|

e92fbb7b1b | ||

|

|

42d464b532 | ||

|

|

c2e9e5c63a | ||

|

|

bc36726925 | ||

|

|

7abbff8c31 | ||

|

|

6236f4bcf4 | ||

|

|

3c3e80f77f | ||

|

|

4aae2fb289 | ||

|

|

66ff07752f | ||

|

|

5cf92f2742 | ||

|

|

6d3fddc474 | ||

|

|

66d4ad6174 | ||

|

|

2a366a1607 | ||

|

|

d87a0995b4 | ||

|

|

9a73a41e04 | ||

|

|

ba041b36bc | ||

|

|

f5f9de69b4 | ||

|

|

71e56c62e8 | ||

|

|

0f496619fd | ||

|

|

5fdd6a441a | ||

|

|

00f287bb63 | ||

|

|

785268efa6 | ||

|

|

2c976d9394 | ||

|

|

1e32582642 | ||

|

|

6f8f6d07f5 | ||

|

|

3958111e76 | ||

|

|

86fcc4af74 | ||

|

|

2fd26756df | ||

|

|

478f4b74d8 | ||

|

|

73d0d2a1bb | ||

|

|

546db08ec4 | ||

|

|

0dd41a8670 | ||

|

|

82c0c89f46 | ||

|

|

c3798bf4c2 | ||

|

|

ff80b6ccb0 | ||

|

|

e729217116 | ||

|

|

94c695daca | ||

|

|

9f189f0420 | ||

|

|

ad09e53f60 | ||

|

|

092a7a5f3f | ||

|

|

f45649bd25 | ||

|

|

2595cc5ed7 | ||

|

|

2f62190c6f | ||

|

|

577314984c | ||

|

|

f0346b955b | ||

|

|

70139ded4a | ||

|

|

bf379900e1 | ||

|

|

9bafc90f5e | ||

|

|

fce0d9e88e | ||

|

|

2b3b154989 | ||

|

|

948d2440a1 | ||

|

|

5adbe1ce7a | ||

|

|

8157d34ffa | ||

|

|

3ec8cb2204 | ||

|

|

0daa826543 | ||

|

|

a66028da58 | ||

|

|

807c9e6872 | ||

|

|

e71f3774ba | ||

|

|

dd7314bf10 | ||

|

|

f33bc127dc | ||

|

|

db92b87782 | ||

|

|

eba41c8693 | ||

|

|

c855308162 | ||

|

|

73d971bed8 | ||

|

|

bcfe0c2874 | ||

|

|

931ff666ae | ||

|

|

18b6d86cc4 | ||

|

|

3c5efa0662 | ||

|

|

9b739bcbbf | ||

|

|

db89076e48 | ||

|

|

19b341ef18 | ||

|

|

be3713b1a3 | ||

|

|

99c4415cfb | ||

|

|

7b311f2ccf | ||

|

|

4aeabfe0a7 | ||

|

|

431ed02194 | ||

|

|

07f587ed83 | ||

|

|

0408341d82 | ||

|

|

5b3c9432f3 | ||

|

|

4a197e63f9 | ||

|

|

0876a12fe9 | ||

|

|

c43c7ecc03 | ||

|

|

4a6dee3044 | ||

|

|

019acdd840 | ||

|

|

1c98512720 | ||

|

|

23a09ad546 | ||

|

|

0836e8fe7c | ||

|

|

90196af8f8 | ||

|

|

566fe05772 | ||

|

|

18772c6292 | ||

|

|

6278bddc9b | ||

|

|

f74bf71735 | ||

|

|

efe9ed68b2 | ||

|

|

7c1e75865d | ||

|

|

a0aee41f1a | ||

|

|

2049dd75f4 | ||

|

|

0864c35ba9 | ||

|

|

92c9f66671 | ||

|

|

815784e809 | ||

|

|

2795d00d1e | ||

|

|

86dd0b4963 | ||

|

|

77a4f4819f | ||

|

|

b63d603482 | ||

|

|

e569b4e613 | ||

|

|

8a70997546 | ||

|

|

80d0a0f882 | ||

|

|

70b3997874 | ||

|

|

e8e4311068 | ||

|

|

c58b93ff51 | ||

|

|

7d8ebfe91b | ||

|

|

810381eab2 | ||

|

|

61dc6cf2de | ||

|

|

0205ebad2a | ||

|

|

09a94133ac | ||

|

|

1eb3c3b219 | ||

|

|

457845bb51 | ||

|

|

0c11b46585 | ||

|

|

c35100d9e9 | ||

|

|

847031cb04 | ||

|

|

f8d87bb452 | ||

|

|

f60b3505e0 | ||

|

|

addefbc511 | ||

|

|

c4314b25a3 | ||

|

|

921bb86127 | ||

|

|

b3a7fb9c3e | ||

|

|

c143c81a7e | ||

|

|

dd389ba0f8 | ||

|

|

46b1649ab8 | ||

|

|

89710412e4 | ||

|

|

931973b632 | ||

|

|

60aaa838e3 | ||

|

|

1246538bbb | ||

|

|

80518abf9d | ||

|

|

fc1ae2a18e | ||

|

|

3fd8d2049c | ||

|

|

35a6bcf20c | ||

|

|

0d75fc331e | ||

|

|

0a23e793e3 | ||

|

|

2c1c03e063 | ||

|

|

64059d2949 | ||

|

|

648aa7c4d3 | ||

|

|

274bb81a08 | ||

|

|

e2c90b4681 | ||

|

|

fa0a98ac6e | ||

|

|

e6e7b42415 | ||

|

|

0b7ef2e1d4 | ||

|

|

2fac67a9f9 | ||

|

|

8b9892de2e | ||

|

|

b3290dc909 | ||

|

|

3e3176eddb | ||

|

|

b1ef84894a | ||

|

|

c6cffc92c4 | ||

|

|

efb9fd2712 | ||

|

|

94b294ff93 | ||

|

|

99a9e33648 | ||

|

|

055d94a919 | ||

|

|

0978005240 | ||

|

|

1f796581ec | ||

|

|

f3a1716dad | ||

|

|

a1c3a0db1f | ||

|

|

9f80cc8a6b | ||

|

|

133786846e | ||

|

|

bdf297a5c6 | ||

|

|

6767254eb0 | ||

|

|

691cebd479 | ||

|

|

f3932cbf29 | ||

|

|

3f73a97037 | ||

|

|

226f1f5be4 | ||

|

|

7e45c07660 | ||

|

|

0c815036b9 |

@@ -1 +0,0 @@

|

|||||||

PYPI_TOKEN=your-pypi-token

|

|

||||||

70

.github/workflows/ci.yml

vendored

70

.github/workflows/ci.yml

vendored

@@ -1,70 +0,0 @@

|

|||||||

name: CI

|

|

||||||

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches: [ main, feat/*, fix/* ]

|

|

||||||

pull_request:

|

|

||||||

branches: [ main ]

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

validate-openapi:

|

|

||||||

name: Validate OpenAPI Specification

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v4

|

|

||||||

|

|

||||||

- name: Check if OpenAPI changed

|

|

||||||

id: openapi-changed

|

|

||||||

uses: tj-actions/changed-files@v44

|

|

||||||

with:

|

|

||||||

files: openapi.yaml

|

|

||||||

|

|

||||||

- name: Setup Node.js

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

uses: actions/setup-node@v4

|

|

||||||

with:

|

|

||||||

node-version: '18'

|

|

||||||

|

|

||||||

- name: Install Redoc CLI

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

npm install -g @redocly/cli

|

|

||||||

|

|

||||||

- name: Validate OpenAPI specification

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

redocly lint openapi.yaml

|

|

||||||

|

|

||||||

code-quality:

|

|

||||||

name: Code Quality Checks

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v4

|

|

||||||

with:

|

|

||||||

fetch-depth: 0 # Fetch all history for proper diff

|

|

||||||

|

|

||||||

- name: Get changed Python files

|

|

||||||

id: changed-py-files

|

|

||||||

uses: tj-actions/changed-files@v44

|

|

||||||

with:

|

|

||||||

files: |

|

|

||||||

**/*.py

|

|

||||||

files_ignore: |

|

|

||||||

comfyui_manager/legacy/**

|

|

||||||

|

|

||||||

- name: Setup Python

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

uses: actions/setup-python@v5

|

|

||||||

with:

|

|

||||||

python-version: '3.9'

|

|

||||||

|

|

||||||

- name: Install dependencies

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

pip install ruff

|

|

||||||

|

|

||||||

- name: Run ruff linting on changed files

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

echo "Changed files: ${{ steps.changed-py-files.outputs.all_changed_files }}"

|

|

||||||

echo "${{ steps.changed-py-files.outputs.all_changed_files }}" | xargs -r ruff check

|

|

||||||

2

.github/workflows/publish-to-pypi.yml

vendored

2

.github/workflows/publish-to-pypi.yml

vendored

@@ -4,7 +4,7 @@ on:

|

|||||||

workflow_dispatch:

|

workflow_dispatch:

|

||||||

push:

|

push:

|

||||||

branches:

|

branches:

|

||||||

- main

|

- draft-v4

|

||||||

paths:

|

paths:

|

||||||

- "pyproject.toml"

|

- "pyproject.toml"

|

||||||

|

|

||||||

|

|||||||

2

.github/workflows/publish.yml

vendored

2

.github/workflows/publish.yml

vendored

@@ -14,7 +14,7 @@ jobs:

|

|||||||

publish-node:

|

publish-node:

|

||||||

name: Publish Custom Node to registry

|

name: Publish Custom Node to registry

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

if: ${{ github.repository_owner == 'ltdrdata' || github.repository_owner == 'Comfy-Org' }}

|

if: ${{ github.repository_owner == 'ltdrdata' }}

|

||||||

steps:

|

steps:

|

||||||

- name: Check out code

|

- name: Check out code

|

||||||

uses: actions/checkout@v4

|

uses: actions/checkout@v4

|

||||||

|

|||||||

4

.gitignore

vendored

4

.gitignore

vendored

@@ -18,7 +18,3 @@ pip_overrides.json

|

|||||||

*.json

|

*.json

|

||||||

check2.sh

|

check2.sh

|

||||||

/venv/

|

/venv/

|

||||||

build

|

|

||||||

dist

|

|

||||||

*.egg-info

|

|

||||||

.env

|

|

||||||

@@ -1,47 +0,0 @@

|

|||||||

## Testing Changes

|

|

||||||

|

|

||||||

1. Activate the ComfyUI environment.

|

|

||||||

|

|

||||||

2. Build package locally after making changes.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# from inside the ComfyUI-Manager directory, with the ComfyUI environment activated

|

|

||||||

python -m build

|

|

||||||

```

|

|

||||||

|

|

||||||

3. Install the package locally in the ComfyUI environment.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# Uninstall existing package

|

|

||||||

pip uninstall comfyui-manager

|

|

||||||

|

|

||||||

# Install the locale package

|

|

||||||

pip install dist/comfyui-manager-*.whl

|

|

||||||

```

|

|

||||||

|

|

||||||

4. Start ComfyUI.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# after navigating to the ComfyUI directory

|

|

||||||

python main.py

|

|

||||||

```

|

|

||||||

|

|

||||||

## Manually Publish Test Version to PyPi

|

|

||||||

|

|

||||||

1. Set the `PYPI_TOKEN` environment variable in env file.

|

|

||||||

|

|

||||||

2. If manually publishing, you likely want to use a release candidate version, so set the version in [pyproject.toml](pyproject.toml) to something like `0.0.1rc1`.

|

|

||||||

|

|

||||||

3. Build the package.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

python -m build

|

|

||||||

```

|

|

||||||

|

|

||||||

4. Upload the package to PyPi.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

python -m twine upload dist/* --username __token__ --password $PYPI_TOKEN

|

|

||||||

```

|

|

||||||

|

|

||||||

5. View at https://pypi.org/project/comfyui-manager/

|

|

||||||

14

MANIFEST.in

14

MANIFEST.in

@@ -1,14 +0,0 @@

|

|||||||

include comfyui_manager/js/*

|

|

||||||

include comfyui_manager/*.json

|

|

||||||

include comfyui_manager/glob/*

|

|

||||||

include LICENSE.txt

|

|

||||||

include README.md

|

|

||||||

include requirements.txt

|

|

||||||

include pyproject.toml

|

|

||||||

include custom-node-list.json

|

|

||||||

include extension-node-list.json

|

|

||||||

include extras.json

|

|

||||||

include github-stats.json

|

|

||||||

include model-list.json

|

|

||||||

include alter-list.json

|

|

||||||

include comfyui_manager/channels.list.template

|

|

||||||

123

README.md

123

README.md

@@ -5,7 +5,7 @@

|

|||||||

|

|

||||||

|

|

||||||

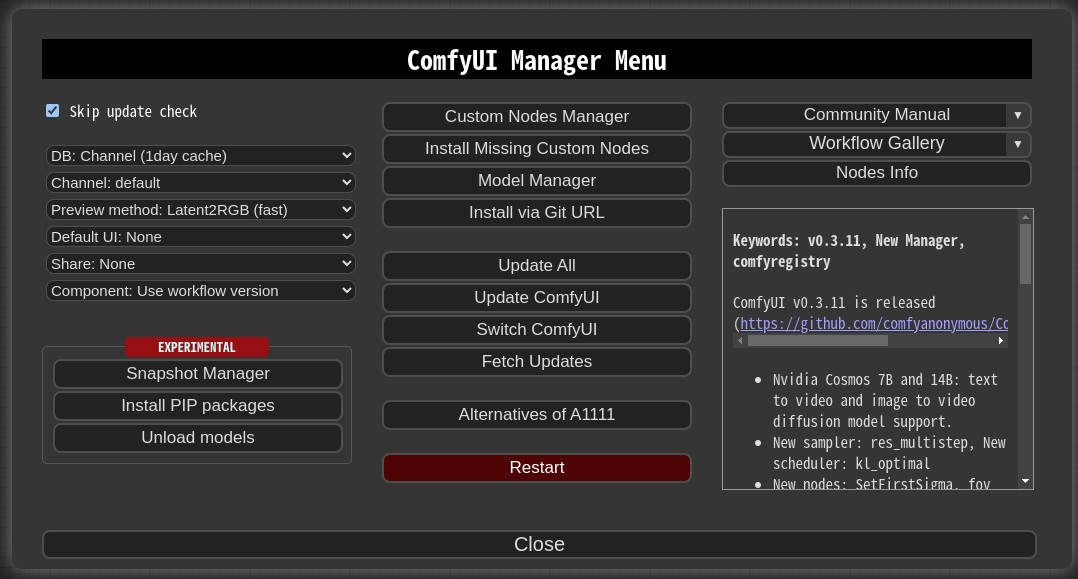

## NOTICE

|

## NOTICE

|

||||||

* V4.0: Modify the structure to be installable via pip instead of using git clone.

|

* V3.38: **Security patch** - Manager data migrated to protected path. See [Migration Guide](docs/en/v3.38-userdata-security-migration.md).

|

||||||

* V3.16: Support for `uv` has been added. Set `use_uv` in `config.ini`.

|

* V3.16: Support for `uv` has been added. Set `use_uv` in `config.ini`.

|

||||||

* V3.10: `double-click feature` is removed

|

* V3.10: `double-click feature` is removed

|

||||||

* This feature has been moved to https://github.com/ltdrdata/comfyui-connection-helper

|

* This feature has been moved to https://github.com/ltdrdata/comfyui-connection-helper

|

||||||

@@ -14,26 +14,78 @@

|

|||||||

|

|

||||||

## Installation

|

## Installation

|

||||||

|

|

||||||

* When installing the latest ComfyUI, it will be automatically installed as a dependency, so manual installation is no longer necessary.

|

### Installation[method1] (General installation method: ComfyUI-Manager only)

|

||||||

|

|

||||||

* Manual installation of the nightly version:

|

To install ComfyUI-Manager in addition to an existing installation of ComfyUI, you can follow the following steps:

|

||||||

* Clone to a temporary directory (**Note:** Do **not** clone into `ComfyUI/custom_nodes`.)

|

|

||||||

```

|

|

||||||

git clone https://github.com/Comfy-Org/ComfyUI-Manager

|

|

||||||

```

|

|

||||||

* Install via pip

|

|

||||||

```

|

|

||||||

cd ComfyUI-Manager

|

|

||||||

pip install .

|

|

||||||

```

|

|

||||||

|

|

||||||

|

1. Go to `ComfyUI/custom_nodes` dir in terminal (cmd)

|

||||||

|

2. `git clone https://github.com/ltdrdata/ComfyUI-Manager comfyui-manager`

|

||||||

|

3. Restart ComfyUI

|

||||||

|

|

||||||

|

|

||||||

|

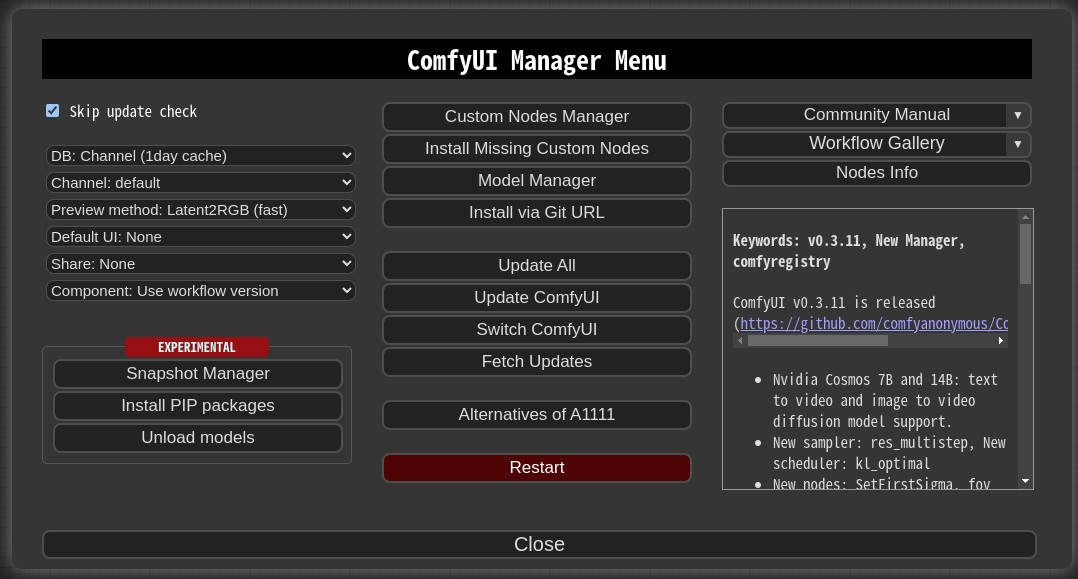

### Installation[method2] (Installation for portable ComfyUI version: ComfyUI-Manager only)

|

||||||

|

1. install git

|

||||||

|

- https://git-scm.com/download/win

|

||||||

|

- standalone version

|

||||||

|

- select option: use windows default console window

|

||||||

|

2. Download [scripts/install-manager-for-portable-version.bat](https://github.com/ltdrdata/ComfyUI-Manager/raw/main/scripts/install-manager-for-portable-version.bat) into installed `"ComfyUI_windows_portable"` directory

|

||||||

|

- Don't click. Right-click the link and choose 'Save As...'

|

||||||

|

3. Double-click `install-manager-for-portable-version.bat` batch file

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### Installation[method3] (Installation through comfy-cli: install ComfyUI and ComfyUI-Manager at once.)

|

||||||

|

> RECOMMENDED: comfy-cli provides various features to manage ComfyUI from the CLI.

|

||||||

|

|

||||||

|

* **prerequisite: python 3, git**

|

||||||

|

|

||||||

|

Windows:

|

||||||

|

```commandline

|

||||||

|

python -m venv venv

|

||||||

|

venv\Scripts\activate

|

||||||

|

pip install comfy-cli

|

||||||

|

comfy install

|

||||||

|

```

|

||||||

|

|

||||||

|

Linux/macOS:

|

||||||

|

```commandline

|

||||||

|

python -m venv venv

|

||||||

|

. venv/bin/activate

|

||||||

|

pip install comfy-cli

|

||||||

|

comfy install

|

||||||

|

```

|

||||||

* See also: https://github.com/Comfy-Org/comfy-cli

|

* See also: https://github.com/Comfy-Org/comfy-cli

|

||||||

|

|

||||||

|

|

||||||

## Front-end

|

### Installation[method4] (Installation for Linux+venv: ComfyUI + ComfyUI-Manager)

|

||||||

|

|

||||||

* The built-in front-end of ComfyUI-Manager is the legacy front-end. The front-end for ComfyUI-Manager is now provided via [ComfyUI Frontend](https://github.com/Comfy-Org/ComfyUI_frontend).

|

To install ComfyUI with ComfyUI-Manager on Linux using a venv environment, you can follow these steps:

|

||||||

* To enable the legacy front-end, set the environment variable `ENABLE_LEGACY_COMFYUI_MANAGER_FRONT` to `true` before running.

|

* **prerequisite: python-is-python3, python3-venv, git**

|

||||||

|

|

||||||

|

1. Download [scripts/install-comfyui-venv-linux.sh](https://github.com/ltdrdata/ComfyUI-Manager/raw/main/scripts/install-comfyui-venv-linux.sh) into empty install directory

|

||||||

|

- Don't click. Right-click the link and choose 'Save As...'

|

||||||

|

- ComfyUI will be installed in the subdirectory of the specified directory, and the directory will contain the generated executable script.

|

||||||

|

2. `chmod +x install-comfyui-venv-linux.sh`

|

||||||

|

3. `./install-comfyui-venv-linux.sh`

|

||||||

|

|

||||||

|

### Installation Precautions

|

||||||

|

* **DO**: `ComfyUI-Manager` files must be accurately located in the path `ComfyUI/custom_nodes/comfyui-manager`

|

||||||

|

* Installing in a compressed file format is not recommended.

|

||||||

|

* **DON'T**: Decompress directly into the `ComfyUI/custom_nodes` location, resulting in the Manager contents like `__init__.py` being placed directly in that directory.

|

||||||

|

* You have to remove all ComfyUI-Manager files from `ComfyUI/custom_nodes`

|

||||||

|

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager/ComfyUI-Manager`.

|

||||||

|

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager-main`.

|

||||||

|

* In such cases, `ComfyUI-Manager` may operate, but it won't be recognized within `ComfyUI-Manager`, and updates cannot be performed. It also poses the risk of duplicate installations. Remove it and install properly via `git clone` method.

|

||||||

|

|

||||||

|

|

||||||

|

You can execute ComfyUI by running either `./run_gpu.sh` or `./run_cpu.sh` depending on your system configuration.

|

||||||

|

|

||||||

|

## Colab Notebook

|

||||||

|

This repository provides Colab notebooks that allow you to install and use ComfyUI, including ComfyUI-Manager. To use ComfyUI, [click on this link](https://colab.research.google.com/github/ltdrdata/ComfyUI-Manager/blob/main/notebooks/comfyui_colab_with_manager.ipynb).

|

||||||

|

* Support for installing ComfyUI

|

||||||

|

* Support for basic installation of ComfyUI-Manager

|

||||||

|

* Support for automatically installing dependencies of custom nodes upon restarting Colab notebooks.

|

||||||

|

|

||||||

|

|

||||||

## How To Use

|

## How To Use

|

||||||

@@ -89,20 +141,27 @@

|

|||||||

|

|

||||||

|

|

||||||

## Paths

|

## Paths

|

||||||

In `ComfyUI-Manager` V3.0 and later, configuration files and dynamically generated files are located under `<USER_DIRECTORY>/default/ComfyUI-Manager/`.

|

Starting from V3.38, Manager uses a protected system path for enhanced security.

|

||||||

|

|

||||||

* <USER_DIRECTORY>

|

* <USER_DIRECTORY>

|

||||||

* If executed without any options, the path defaults to ComfyUI/user.

|

* If executed without any options, the path defaults to ComfyUI/user.

|

||||||

* It can be set using --user-directory <USER_DIRECTORY>.

|

* It can be set using --user-directory <USER_DIRECTORY>.

|

||||||

|

|

||||||

* Basic config files: `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini`

|

| ComfyUI Version | Manager Path |

|

||||||

* Configurable channel lists: `<USER_DIRECTORY>/default/ComfyUI-Manager/channels.ini`

|

|-----------------|--------------|

|

||||||

* Configurable pip overrides: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_overrides.json`

|

| v0.3.76+ (with System User API) | `<USER_DIRECTORY>/__manager/` |

|

||||||

* Configurable pip blacklist: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_blacklist.list`

|

| Older versions | `<USER_DIRECTORY>/default/ComfyUI-Manager/` |

|

||||||

* Configurable pip auto fix: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_auto_fix.list`

|

|

||||||

* Saved snapshot files: `<USER_DIRECTORY>/default/ComfyUI-Manager/snapshots`

|

* Basic config files: `config.ini`

|

||||||

* Startup script files: `<USER_DIRECTORY>/default/ComfyUI-Manager/startup-scripts`

|

* Configurable channel lists: `channels.list`

|

||||||

* Component files: `<USER_DIRECTORY>/default/ComfyUI-Manager/components`

|

* Configurable pip overrides: `pip_overrides.json`

|

||||||

|

* Configurable pip blacklist: `pip_blacklist.list`

|

||||||

|

* Configurable pip auto fix: `pip_auto_fix.list`

|

||||||

|

* Saved snapshot files: `snapshots/`

|

||||||

|

* Startup script files: `startup-scripts/`

|

||||||

|

* Component files: `components/`

|

||||||

|

|

||||||

|

> **Note**: See [Migration Guide](docs/en/v3.38-userdata-security-migration.md) for upgrade details.

|

||||||

|

|

||||||

|

|

||||||

## `extra_model_paths.yaml` Configuration

|

## `extra_model_paths.yaml` Configuration

|

||||||

@@ -125,7 +184,7 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

## cm-cli: command line tools for power user

|

## cm-cli: command line tools for power users

|

||||||

* A tool is provided that allows you to use the features of ComfyUI-Manager without running ComfyUI.

|

* A tool is provided that allows you to use the features of ComfyUI-Manager without running ComfyUI.

|

||||||

* For more details, please refer to the [cm-cli documentation](docs/en/cm-cli.md).

|

* For more details, please refer to the [cm-cli documentation](docs/en/cm-cli.md).

|

||||||

|

|

||||||

@@ -171,7 +230,7 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

* `<current timestamp>` Ensure that the timestamp is always unique.

|

* `<current timestamp>` Ensure that the timestamp is always unique.

|

||||||

* "components" should have the same structure as the content of the file stored in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

* "components" should have the same structure as the content of the file stored in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||||

* `<component name>`: The name should be in the format `<prefix>::<node name>`.

|

* `<component name>`: The name should be in the format `<prefix>::<node name>`.

|

||||||

* `<compnent nodeata>`: In the nodedata of the group node.

|

* `<component node data>`: In the node data of the group node.

|

||||||

* `<version>`: Only two formats are allowed: `major.minor.patch` or `major.minor`. (e.g. `1.0`, `2.2.1`)

|

* `<version>`: Only two formats are allowed: `major.minor.patch` or `major.minor`. (e.g. `1.0`, `2.2.1`)

|

||||||

* `<datetime>`: Saved time

|

* `<datetime>`: Saved time

|

||||||

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||||

@@ -189,7 +248,7 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

* Dragging and dropping or pasting a single component will add a node. However, when adding multiple components, nodes will not be added.

|

* Dragging and dropping or pasting a single component will add a node. However, when adding multiple components, nodes will not be added.

|

||||||

|

|

||||||

|

|

||||||

## Support of missing nodes installation

|

## Support for installing missing nodes

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -228,10 +287,10 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

* Logging to file feature

|

* Logging to file feature

|

||||||

* This feature is enabled by default and can be disabled by setting `file_logging = False` in the `config.ini`.

|

* This feature is enabled by default and can be disabled by setting `file_logging = False` in the `config.ini`.

|

||||||

|

|

||||||

* Fix node(recreate): When right-clicking on a node and selecting `Fix node (recreate)`, you can recreate the node. The widget's values are reset, while the connections maintain those with the same names.

|

* Fix node (recreate): When right-clicking on a node and selecting `Fix node (recreate)`, you can recreate the node. The widget's values are reset, while the connections maintain those with the same names.

|

||||||

* It is used to correct errors in nodes of old workflows created before, which are incompatible with the version changes of custom nodes.

|

* It is used to correct errors in nodes of old workflows created before, which are incompatible with the version changes of custom nodes.

|

||||||

|

|

||||||

* Double-Click Node Title: You can set the double click behavior of nodes in the ComfyUI-Manager menu.

|

* Double-Click Node Title: You can set the double-click behavior of nodes in the ComfyUI-Manager menu.

|

||||||

* `Copy All Connections`, `Copy Input Connections`: Double-clicking a node copies the connections of the nearest node.

|

* `Copy All Connections`, `Copy Input Connections`: Double-clicking a node copies the connections of the nearest node.

|

||||||

* This action targets the nearest node within a straight-line distance of 1000 pixels from the center of the node.

|

* This action targets the nearest node within a straight-line distance of 1000 pixels from the center of the node.

|

||||||

* In the case of `Copy All Connections`, it duplicates existing outputs, but since it does not allow duplicate connections, the existing output connections of the original node are disconnected.

|

* In the case of `Copy All Connections`, it duplicates existing outputs, but since it does not allow duplicate connections, the existing output connections of the original node are disconnected.

|

||||||

@@ -297,7 +356,7 @@ When you run the `scan.sh` script:

|

|||||||

|

|

||||||

* It updates the `github-stats.json`.

|

* It updates the `github-stats.json`.

|

||||||

* This uses the GitHub API, so set your token with `export GITHUB_TOKEN=your_token_here` to avoid quickly reaching the rate limit and malfunctioning.

|

* This uses the GitHub API, so set your token with `export GITHUB_TOKEN=your_token_here` to avoid quickly reaching the rate limit and malfunctioning.

|

||||||

* To skip this step, add the `--skip-update-stat` option.

|

* To skip this step, add the `--skip-stat-update` option.

|

||||||

|

|

||||||

* The `--skip-all` option applies both `--skip-update` and `--skip-stat-update`.

|

* The `--skip-all` option applies both `--skip-update` and `--skip-stat-update`.

|

||||||

|

|

||||||

@@ -305,9 +364,9 @@ When you run the `scan.sh` script:

|

|||||||

## Troubleshooting

|

## Troubleshooting

|

||||||

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini` file that is generated.

|

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini` file that is generated.

|

||||||

* If updating ComfyUI-Manager itself fails, please go to the **ComfyUI-Manager** directory and execute the command `git update-ref refs/remotes/origin/main a361cc1 && git fetch --all && git pull`.

|

* If updating ComfyUI-Manager itself fails, please go to the **ComfyUI-Manager** directory and execute the command `git update-ref refs/remotes/origin/main a361cc1 && git fetch --all && git pull`.

|

||||||

* If you encounter the error message `Overlapped Object has pending operation at deallocation on Comfyui Manager load` under Windows

|

* If you encounter the error message `Overlapped Object has pending operation at deallocation on ComfyUI Manager load` under Windows

|

||||||

* Edit `config.ini` file: add `windows_selector_event_loop_policy = True`

|

* Edit `config.ini` file: add `windows_selector_event_loop_policy = True`

|

||||||

* if `SSL: CERTIFICATE_VERIFY_FAILED` error is occured.

|

* If the `SSL: CERTIFICATE_VERIFY_FAILED` error occurs.

|

||||||

* Edit `config.ini` file: add `bypass_ssl = True`

|

* Edit `config.ini` file: add `bypass_ssl = True`

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

25

__init__.py

Normal file

25

__init__.py

Normal file

@@ -0,0 +1,25 @@

|

|||||||

|

"""

|

||||||

|

This file is the entry point for the ComfyUI-Manager package, handling CLI-only mode and initial setup.

|

||||||

|

"""

|

||||||

|

|

||||||

|

import os

|

||||||

|

import sys

|

||||||

|

|

||||||

|

cli_mode_flag = os.path.join(os.path.dirname(__file__), '.enable-cli-only-mode')

|

||||||

|

|

||||||

|

if not os.path.exists(cli_mode_flag):

|

||||||

|

sys.path.append(os.path.join(os.path.dirname(__file__), "glob"))

|

||||||

|

import manager_server # noqa: F401

|

||||||

|

import share_3rdparty # noqa: F401

|

||||||

|

import cm_global

|

||||||

|

|

||||||

|

if not cm_global.disable_front and not 'DISABLE_COMFYUI_MANAGER_FRONT' in os.environ:

|

||||||

|

WEB_DIRECTORY = "js"

|

||||||

|

else:

|

||||||

|

print("\n[ComfyUI-Manager] !! cli-only-mode is enabled !!\n")

|

||||||

|

|

||||||

|

NODE_CLASS_MAPPINGS = {}

|

||||||

|

__all__ = ['NODE_CLASS_MAPPINGS']

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

6

channels.list.template

Normal file

6

channels.list.template

Normal file

@@ -0,0 +1,6 @@

|

|||||||

|

default::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main

|

||||||

|

recent::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/new

|

||||||

|

legacy::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/legacy

|

||||||

|

forked::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/forked

|

||||||

|

dev::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/dev

|

||||||

|

tutorial::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/tutorial

|

||||||

4

check.sh

4

check.sh

@@ -37,7 +37,7 @@ find ~/.tmp/default -name "*.py" -print0 | xargs -0 grep -E "crypto|^_A="

|

|||||||

|

|

||||||

echo

|

echo

|

||||||

echo CHECK3

|

echo CHECK3

|

||||||

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*https\\?:"

|

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*[^#]*https\?:"

|

||||||

find ~/.tmp/default -name "requirements.txt" | xargs grep "\.whl"

|

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*[^#].*\.whl"

|

||||||

|

|

||||||

echo

|

echo

|

||||||

|

|||||||

@@ -15,41 +15,38 @@ import git

|

|||||||

import importlib

|

import importlib

|

||||||

|

|

||||||

|

|

||||||

from ..common import manager_util

|

sys.path.append(os.path.dirname(__file__))

|

||||||

|

sys.path.append(os.path.join(os.path.dirname(__file__), "glob"))

|

||||||

|

|

||||||

|

import manager_util

|

||||||

|

|

||||||

# read env vars

|

# read env vars

|

||||||

# COMFYUI_FOLDERS_BASE_PATH is not required in cm-cli.py

|

# COMFYUI_FOLDERS_BASE_PATH is not required in cm-cli.py

|

||||||

# `comfy_path` should be resolved before importing manager_core

|

# `comfy_path` should be resolved before importing manager_core

|

||||||

|

|

||||||

comfy_path = os.environ.get('COMFYUI_PATH')

|

comfy_path = os.environ.get('COMFYUI_PATH')

|

||||||

|

|

||||||

if comfy_path is None:

|

if comfy_path is None:

|

||||||

print("[bold red]cm-cli: environment variable 'COMFYUI_PATH' is not specified.[/bold red]")

|

try:

|

||||||

exit(-1)

|

import folder_paths

|

||||||

|

comfy_path = os.path.join(os.path.dirname(folder_paths.__file__))

|

||||||

|

except:

|

||||||

|

print("\n[bold yellow]WARN: The `COMFYUI_PATH` environment variable is not set. Assuming `custom_nodes/ComfyUI-Manager/../../` as the ComfyUI path.[/bold yellow]", file=sys.stderr)

|

||||||

|

comfy_path = os.path.abspath(os.path.join(manager_util.comfyui_manager_path, '..', '..'))

|

||||||

|

|

||||||

|

# This should be placed here

|

||||||

sys.path.append(comfy_path)

|

sys.path.append(comfy_path)

|

||||||

|

|

||||||

if not os.path.exists(os.path.join(comfy_path, 'folder_paths.py')):

|

|

||||||

print("[bold red]cm-cli: '{comfy_path}' is not a valid 'COMFYUI_PATH' location.[/bold red]")

|

|

||||||

exit(-1)

|

|

||||||

|

|

||||||

|

|

||||||

import utils.extra_config

|

import utils.extra_config

|

||||||

from ..common import cm_global

|

import cm_global

|

||||||

from ..legacy import manager_core as core

|

import manager_core as core

|

||||||

from ..common import context

|

from manager_core import unified_manager

|

||||||

from ..legacy.manager_core import unified_manager

|

import cnr_utils

|

||||||

from ..common import cnr_utils

|

|

||||||

|

|

||||||

comfyui_manager_path = os.path.abspath(os.path.dirname(__file__))

|

comfyui_manager_path = os.path.abspath(os.path.dirname(__file__))

|

||||||

|

|

||||||

cm_global.pip_blacklist = {'torch', 'torchaudio', 'torchsde', 'torchvision'}

|

cm_global.pip_blacklist = {'torch', 'torchaudio', 'torchsde', 'torchvision'}

|

||||||

cm_global.pip_downgrade_blacklist = ['torch', 'torchaudio', 'torchsde', 'torchvision', 'transformers', 'safetensors', 'kornia']

|

cm_global.pip_downgrade_blacklist = ['torch', 'torchaudio', 'torchsde', 'torchvision', 'transformers', 'safetensors', 'kornia']

|

||||||

|

|

||||||

if sys.version_info < (3, 13):

|

cm_global.pip_overrides = {}

|

||||||

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

|

||||||

else:

|

|

||||||

cm_global.pip_overrides = {}

|

|

||||||

|

|

||||||

if os.path.exists(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json")):

|

if os.path.exists(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json")):

|

||||||

with open(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json"), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

with open(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json"), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||||

@@ -69,7 +66,7 @@ def check_comfyui_hash():

|

|||||||

repo = git.Repo(comfy_path)

|

repo = git.Repo(comfy_path)

|

||||||

core.comfy_ui_revision = len(list(repo.iter_commits('HEAD')))

|

core.comfy_ui_revision = len(list(repo.iter_commits('HEAD')))

|

||||||

core.comfy_ui_commit_datetime = repo.head.commit.committed_datetime

|

core.comfy_ui_commit_datetime = repo.head.commit.committed_datetime

|

||||||

except Exception:

|

except:

|

||||||

print('[bold yellow]INFO: Frozen ComfyUI mode.[/bold yellow]')

|

print('[bold yellow]INFO: Frozen ComfyUI mode.[/bold yellow]')

|

||||||

core.comfy_ui_revision = 0

|

core.comfy_ui_revision = 0

|

||||||

core.comfy_ui_commit_datetime = 0

|

core.comfy_ui_commit_datetime = 0

|

||||||

@@ -85,7 +82,7 @@ def read_downgrade_blacklist():

|

|||||||

try:

|

try:

|

||||||

import configparser

|

import configparser

|

||||||

config = configparser.ConfigParser(strict=False)

|

config = configparser.ConfigParser(strict=False)

|

||||||

config.read(context.manager_config_path)

|

config.read(core.manager_config.path)

|

||||||

default_conf = config['default']

|

default_conf = config['default']

|

||||||

|

|

||||||

if 'downgrade_blacklist' in default_conf:

|

if 'downgrade_blacklist' in default_conf:

|

||||||

@@ -93,7 +90,7 @@ def read_downgrade_blacklist():

|

|||||||

items = [x.strip() for x in items if x != '']

|

items = [x.strip() for x in items if x != '']

|

||||||

cm_global.pip_downgrade_blacklist += items

|

cm_global.pip_downgrade_blacklist += items

|

||||||

cm_global.pip_downgrade_blacklist = list(set(cm_global.pip_downgrade_blacklist))

|

cm_global.pip_downgrade_blacklist = list(set(cm_global.pip_downgrade_blacklist))

|

||||||

except Exception:

|

except:

|

||||||

pass

|

pass

|

||||||

|

|

||||||

|

|

||||||

@@ -108,7 +105,7 @@ class Ctx:

|

|||||||

self.no_deps = False

|

self.no_deps = False

|

||||||

self.mode = 'cache'

|

self.mode = 'cache'

|

||||||

self.user_directory = None

|

self.user_directory = None

|

||||||

self.custom_nodes_paths = [os.path.join(context.comfy_base_path, 'custom_nodes')]

|

self.custom_nodes_paths = [os.path.join(core.comfy_base_path, 'custom_nodes')]

|

||||||

self.manager_files_directory = os.path.dirname(__file__)

|

self.manager_files_directory = os.path.dirname(__file__)

|

||||||

|

|

||||||

if Ctx.folder_paths is None:

|

if Ctx.folder_paths is None:

|

||||||

@@ -146,17 +143,14 @@ class Ctx:

|

|||||||

if os.path.exists(extra_model_paths_yaml):

|

if os.path.exists(extra_model_paths_yaml):

|

||||||

utils.extra_config.load_extra_path_config(extra_model_paths_yaml)

|

utils.extra_config.load_extra_path_config(extra_model_paths_yaml)

|

||||||

|

|

||||||

context.update_user_directory(user_directory)

|

core.update_user_directory(user_directory)

|

||||||

|

|

||||||

if os.path.exists(context.manager_pip_overrides_path):

|

if os.path.exists(core.manager_pip_overrides_path):

|

||||||

with open(context.manager_pip_overrides_path, 'r', encoding="UTF-8", errors="ignore") as json_file:

|

with open(core.manager_pip_overrides_path, 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||||

cm_global.pip_overrides = json.load(json_file)

|

cm_global.pip_overrides = json.load(json_file)

|

||||||

|

|

||||||

if sys.version_info < (3, 13):

|

if os.path.exists(core.manager_pip_blacklist_path):

|

||||||

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

with open(core.manager_pip_blacklist_path, 'r', encoding="UTF-8", errors="ignore") as f:

|

||||||

|

|

||||||

if os.path.exists(context.manager_pip_blacklist_path):

|

|

||||||

with open(context.manager_pip_blacklist_path, 'r', encoding="UTF-8", errors="ignore") as f:

|

|

||||||

for x in f.readlines():

|

for x in f.readlines():

|

||||||

y = x.strip()

|

y = x.strip()

|

||||||

if y != '':

|

if y != '':

|

||||||

@@ -169,15 +163,15 @@ class Ctx:

|

|||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_startup_scripts_path():

|

def get_startup_scripts_path():

|

||||||

return os.path.join(context.manager_startup_script_path, "install-scripts.txt")

|

return os.path.join(core.manager_startup_script_path, "install-scripts.txt")

|

||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_restore_snapshot_path():

|

def get_restore_snapshot_path():

|

||||||

return os.path.join(context.manager_startup_script_path, "restore-snapshot.json")

|

return os.path.join(core.manager_startup_script_path, "restore-snapshot.json")

|

||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_snapshot_path():

|

def get_snapshot_path():

|

||||||

return context.manager_snapshot_path

|

return core.manager_snapshot_path

|

||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_custom_nodes_paths():

|

def get_custom_nodes_paths():

|

||||||

@@ -444,11 +438,8 @@ def show_list(kind, simple=False):

|

|||||||

flag = kind in ['all', 'cnr', 'installed', 'enabled']

|

flag = kind in ['all', 'cnr', 'installed', 'enabled']

|

||||||

for k, v in unified_manager.active_nodes.items():

|

for k, v in unified_manager.active_nodes.items():

|

||||||

if flag:

|

if flag:

|

||||||

cnr = unified_manager.cnr_map.get(k)

|

cnr = unified_manager.cnr_map[k]

|

||||||

if cnr:

|

processed[k] = "[ ENABLED ] ", cnr['name'], k, cnr['publisher']['name'], v[0]

|

||||||

processed[k] = "[ ENABLED ] ", cnr['name'], k, cnr['publisher']['name'], v[0]

|

|

||||||

else:

|

|

||||||

processed[k] = None

|

|

||||||

else:

|

else:

|

||||||

processed[k] = None

|

processed[k] = None

|

||||||

|

|

||||||

@@ -468,11 +459,8 @@ def show_list(kind, simple=False):

|

|||||||

continue

|

continue

|

||||||

|

|

||||||

if flag:

|

if flag:

|

||||||

cnr = unified_manager.cnr_map.get(k) # NOTE: can this be None if removed from CNR after installed

|

cnr = unified_manager.cnr_map[k]

|

||||||

if cnr:

|

processed[k] = "[ DISABLED ] ", cnr['name'], k, cnr['publisher']['name'], ", ".join(list(v.keys()))

|

||||||

processed[k] = "[ DISABLED ] ", cnr['name'], k, cnr['publisher']['name'], ", ".join(list(v.keys()))

|

|

||||||

else:

|

|

||||||

processed[k] = None

|

|

||||||

else:

|

else:

|

||||||

processed[k] = None

|

processed[k] = None

|

||||||

|

|

||||||

@@ -481,11 +469,8 @@ def show_list(kind, simple=False):

|

|||||||

continue

|

continue

|

||||||

|

|

||||||

if flag:

|

if flag:

|

||||||

cnr = unified_manager.cnr_map.get(k)

|

cnr = unified_manager.cnr_map[k]

|

||||||

if cnr:

|

processed[k] = "[ DISABLED ] ", cnr['name'], k, cnr['publisher']['name'], 'nightly'

|

||||||

processed[k] = "[ DISABLED ] ", cnr['name'], k, cnr['publisher']['name'], 'nightly'

|

|

||||||

else:

|

|

||||||

processed[k] = None

|

|

||||||

else:

|

else:

|

||||||

processed[k] = None

|

processed[k] = None

|

||||||

|

|

||||||

@@ -505,12 +490,9 @@ def show_list(kind, simple=False):

|

|||||||

continue

|

continue

|

||||||

|

|

||||||

if flag:

|

if flag:

|

||||||

cnr = unified_manager.cnr_map.get(k)

|

cnr = unified_manager.cnr_map[k]

|

||||||

if cnr:

|

ver_spec = v['latest_version']['version'] if 'latest_version' in v else '0.0.0'

|

||||||

ver_spec = v['latest_version']['version'] if 'latest_version' in v else '0.0.0'

|

processed[k] = "[ NOT INSTALLED ] ", cnr['name'], k, cnr['publisher']['name'], ver_spec

|

||||||

processed[k] = "[ NOT INSTALLED ] ", cnr['name'], k, cnr['publisher']['name'], ver_spec

|

|

||||||

else:

|

|

||||||

processed[k] = None

|

|

||||||

else:

|

else:

|

||||||

processed[k] = None

|

processed[k] = None

|

||||||

|

|

||||||

@@ -676,7 +658,7 @@ def install(

|

|||||||

cmd_ctx.set_channel_mode(channel, mode)

|

cmd_ctx.set_channel_mode(channel, mode)

|

||||||

cmd_ctx.set_no_deps(no_deps)

|

cmd_ctx.set_no_deps(no_deps)

|

||||||

|

|

||||||

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, context.manager_files_path)

|

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, core.manager_files_path)

|

||||||

for_each_nodes(nodes, act=install_node, exit_on_fail=exit_on_fail)

|

for_each_nodes(nodes, act=install_node, exit_on_fail=exit_on_fail)

|

||||||

pip_fixer.fix_broken()

|

pip_fixer.fix_broken()

|

||||||

|

|

||||||

@@ -714,7 +696,7 @@ def reinstall(

|

|||||||

cmd_ctx.set_channel_mode(channel, mode)

|

cmd_ctx.set_channel_mode(channel, mode)

|

||||||

cmd_ctx.set_no_deps(no_deps)

|

cmd_ctx.set_no_deps(no_deps)

|

||||||

|

|

||||||

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, context.manager_files_path)

|

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, core.manager_files_path)

|

||||||

for_each_nodes(nodes, act=reinstall_node)

|

for_each_nodes(nodes, act=reinstall_node)

|

||||||

pip_fixer.fix_broken()

|

pip_fixer.fix_broken()

|

||||||

|

|

||||||

@@ -768,7 +750,7 @@ def update(

|

|||||||

if 'all' in nodes:

|

if 'all' in nodes:

|

||||||

asyncio.run(auto_save_snapshot())

|

asyncio.run(auto_save_snapshot())

|

||||||

|

|

||||||

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, context.manager_files_path)

|

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, core.manager_files_path)

|

||||||

|

|

||||||

for x in nodes:

|