Compare commits

28 Commits

manager-ca

...

readme-for

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

e089896df9 | ||

|

|

0014eec124 | ||

|

|

e0b3f3eb45 | ||

|

|

4bbc8594a7 | ||

|

|

3a377300e1 | ||

|

|

33a07e3a86 | ||

|

|

212cafc1d7 | ||

|

|

2643b3cbcc | ||

|

|

d445229b6d | ||

|

|

dab5c451b0 | ||

|

|

7bdf06131a | ||

|

|

854648d5af | ||

|

|

c5f7b97359 | ||

|

|

dd8a727ad6 | ||

|

|

6c627fe422 | ||

|

|

ee980e1caf | ||

|

|

22bfaf6527 | ||

|

|

48ab48cc30 | ||

|

|

a0b14d4127 | ||

|

|

03f9fe1a70 | ||

|

|

8915b8d796 | ||

|

|

c77ffeeec0 | ||

|

|

4acf5660b2 | ||

|

|

2d9f0a668c | ||

|

|

9e6cb246cc | ||

|

|

14544ca63d | ||

|

|

26b347c04c | ||

|

|

36f75d1811 |

@@ -1 +0,0 @@

|

|||||||

PYPI_TOKEN=your-pypi-token

|

|

||||||

70

.github/workflows/ci.yml

vendored

70

.github/workflows/ci.yml

vendored

@@ -1,70 +0,0 @@

|

|||||||

name: CI

|

|

||||||

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches: [ main, feat/*, fix/* ]

|

|

||||||

pull_request:

|

|

||||||

branches: [ main ]

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

validate-openapi:

|

|

||||||

name: Validate OpenAPI Specification

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v4

|

|

||||||

|

|

||||||

- name: Check if OpenAPI changed

|

|

||||||

id: openapi-changed

|

|

||||||

uses: tj-actions/changed-files@v44

|

|

||||||

with:

|

|

||||||

files: openapi.yaml

|

|

||||||

|

|

||||||

- name: Setup Node.js

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

uses: actions/setup-node@v4

|

|

||||||

with:

|

|

||||||

node-version: '18'

|

|

||||||

|

|

||||||

- name: Install Redoc CLI

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

npm install -g @redocly/cli

|

|

||||||

|

|

||||||

- name: Validate OpenAPI specification

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

redocly lint openapi.yaml

|

|

||||||

|

|

||||||

code-quality:

|

|

||||||

name: Code Quality Checks

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v4

|

|

||||||

with:

|

|

||||||

fetch-depth: 0 # Fetch all history for proper diff

|

|

||||||

|

|

||||||

- name: Get changed Python files

|

|

||||||

id: changed-py-files

|

|

||||||

uses: tj-actions/changed-files@v44

|

|

||||||

with:

|

|

||||||

files: |

|

|

||||||

**/*.py

|

|

||||||

files_ignore: |

|

|

||||||

comfyui_manager/legacy/**

|

|

||||||

|

|

||||||

- name: Setup Python

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

uses: actions/setup-python@v5

|

|

||||||

with:

|

|

||||||

python-version: '3.9'

|

|

||||||

|

|

||||||

- name: Install dependencies

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

pip install ruff

|

|

||||||

|

|

||||||

- name: Run ruff linting on changed files

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

echo "Changed files: ${{ steps.changed-py-files.outputs.all_changed_files }}"

|

|

||||||

echo "${{ steps.changed-py-files.outputs.all_changed_files }}" | xargs -r ruff check

|

|

||||||

32

.github/workflows/publish-to-pypi.yml

vendored

32

.github/workflows/publish-to-pypi.yml

vendored

@@ -4,7 +4,7 @@ on:

|

|||||||

workflow_dispatch:

|

workflow_dispatch:

|

||||||

push:

|

push:

|

||||||

branches:

|

branches:

|

||||||

- manager-v4

|

- draft-v4

|

||||||

paths:

|

paths:

|

||||||

- "pyproject.toml"

|

- "pyproject.toml"

|

||||||

|

|

||||||

@@ -21,7 +21,7 @@ jobs:

|

|||||||

- name: Set up Python

|

- name: Set up Python

|

||||||

uses: actions/setup-python@v4

|

uses: actions/setup-python@v4

|

||||||

with:

|

with:

|

||||||

python-version: '3.x'

|

python-version: '3.9'

|

||||||

|

|

||||||

- name: Install build dependencies

|

- name: Install build dependencies

|

||||||

run: |

|

run: |

|

||||||

@@ -31,28 +31,28 @@ jobs:

|

|||||||

- name: Get current version

|

- name: Get current version

|

||||||

id: current_version

|

id: current_version

|

||||||

run: |

|

run: |

|

||||||

CURRENT_VERSION=$(grep -oP '^version = "\K[^"]+' pyproject.toml)

|

CURRENT_VERSION=$(grep -oP 'version = "\K[^"]+' pyproject.toml)

|

||||||

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

||||||

echo "Current version: $CURRENT_VERSION"

|

echo "Current version: $CURRENT_VERSION"

|

||||||

|

|

||||||

- name: Build package

|

- name: Build package

|

||||||

run: python -m build

|

run: python -m build

|

||||||

|

|

||||||

# - name: Create GitHub Release

|

- name: Create GitHub Release

|

||||||

# id: create_release

|

id: create_release

|

||||||

# uses: softprops/action-gh-release@v2

|

uses: softprops/action-gh-release@v2

|

||||||

# env:

|

env:

|

||||||

# GITHUB_TOKEN: ${{ github.token }}

|

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||||

# with:

|

with:

|

||||||

# files: dist/*

|

files: dist/*

|

||||||

# tag_name: v${{ steps.current_version.outputs.version }}

|

tag_name: v${{ steps.current_version.outputs.version }}

|

||||||

# draft: false

|

draft: false

|

||||||

# prerelease: false

|

prerelease: false

|

||||||

# generate_release_notes: true

|

generate_release_notes: true

|

||||||

|

|

||||||

- name: Publish to PyPI

|

- name: Publish to PyPI

|

||||||

uses: pypa/gh-action-pypi-publish@76f52bc884231f62b9a034ebfe128415bbaabdfc

|

uses: pypa/gh-action-pypi-publish@release/v1

|

||||||

with:

|

with:

|

||||||

password: ${{ secrets.PYPI_TOKEN }}

|

password: ${{ secrets.PYPI_TOKEN }}

|

||||||

skip-existing: true

|

skip-existing: true

|

||||||

verbose: true

|

verbose: true

|

||||||

25

.github/workflows/publish.yml

vendored

Normal file

25

.github/workflows/publish.yml

vendored

Normal file

@@ -0,0 +1,25 @@

|

|||||||

|

name: Publish to Comfy registry

|

||||||

|

on:

|

||||||

|

workflow_dispatch:

|

||||||

|

push:

|

||||||

|

branches:

|

||||||

|

- main-blocked

|

||||||

|

paths:

|

||||||

|

- "pyproject.toml"

|

||||||

|

|

||||||

|

permissions:

|

||||||

|

issues: write

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

publish-node:

|

||||||

|

name: Publish Custom Node to registry

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

if: ${{ github.repository_owner == 'ltdrdata' }}

|

||||||

|

steps:

|

||||||

|

- name: Check out code

|

||||||

|

uses: actions/checkout@v4

|

||||||

|

- name: Publish Custom Node

|

||||||

|

uses: Comfy-Org/publish-node-action@v1

|

||||||

|

with:

|

||||||

|

## Add your own personal access token to your Github Repository secrets and reference it here.

|

||||||

|

personal_access_token: ${{ secrets.REGISTRY_ACCESS_TOKEN }}

|

||||||

@@ -1,56 +0,0 @@

|

|||||||

# Example: GitHub Actions workflow to auto-update test durations

|

|

||||||

# Rename to .github/workflows/update-test-durations.yml to enable

|

|

||||||

|

|

||||||

name: Update Test Durations

|

|

||||||

|

|

||||||

on:

|

|

||||||

schedule:

|

|

||||||

# Run weekly on Sundays at 2 AM UTC

|

|

||||||

- cron: '0 2 * * 0'

|

|

||||||

workflow_dispatch: # Allow manual trigger

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

update-durations:

|

|

||||||

runs-on: self-hosted

|

|

||||||

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v4

|

|

||||||

|

|

||||||

- name: Set up Python

|

|

||||||

uses: actions/setup-python@v4

|

|

||||||

with:

|

|

||||||

python-version: '3.9'

|

|

||||||

|

|

||||||

- name: Install dependencies

|

|

||||||

run: |

|

|

||||||

python -m pip install --upgrade pip

|

|

||||||

pip install -e .

|

|

||||||

pip install pytest pytest-split

|

|

||||||

|

|

||||||

- name: Update test durations

|

|

||||||

run: |

|

|

||||||

chmod +x tests/update_test_durations.sh

|

|

||||||

./tests/update_test_durations.sh

|

|

||||||

|

|

||||||

- name: Check for changes

|

|

||||||

id: check_changes

|

|

||||||

run: |

|

|

||||||

if git diff --quiet .test_durations; then

|

|

||||||

echo "changed=false" >> $GITHUB_OUTPUT

|

|

||||||

else

|

|

||||||

echo "changed=true" >> $GITHUB_OUTPUT

|

|

||||||

fi

|

|

||||||

|

|

||||||

- name: Create Pull Request

|

|

||||||

if: steps.check_changes.outputs.changed == 'true'

|

|

||||||

uses: peter-evans/create-pull-request@v5

|

|

||||||

with:

|

|

||||||

token: ${{ secrets.GITHUB_TOKEN }}

|

|

||||||

commit-message: 'chore: update test duration data'

|

|

||||||

title: 'Update test duration data'

|

|

||||||

body: |

|

|

||||||

Automated update of `.test_durations` file for optimal parallel test distribution.

|

|

||||||

|

|

||||||

This ensures pytest-split can effectively balance test load across parallel environments.

|

|

||||||

branch: auto/update-test-durations

|

|

||||||

delete-branch: true

|

|

||||||

13

.gitignore

vendored

13

.gitignore

vendored

@@ -17,15 +17,4 @@ github-stats-cache.json

|

|||||||

pip_overrides.json

|

pip_overrides.json

|

||||||

*.json

|

*.json

|

||||||

check2.sh

|

check2.sh

|

||||||

/venv/

|

/venv/

|

||||||

build

|

|

||||||

dist

|

|

||||||

*.egg-info

|

|

||||||

.env

|

|

||||||

.git

|

|

||||||

.claude

|

|

||||||

.hypothesis

|

|

||||||

|

|

||||||

# Test artifacts

|

|

||||||

tests/tmp/

|

|

||||||

tests/env/

|

|

||||||

170

CLAUDE.md

170

CLAUDE.md

@@ -1,170 +0,0 @@

|

|||||||

# CLAUDE.md - Development Guidelines

|

|

||||||

|

|

||||||

## Project Context

|

|

||||||

This is ComfyUI Manager, a Python package that provides management functions for ComfyUI custom nodes, models, and extensions. The project follows modern Python packaging standards and maintains both current (`glob`) and legacy implementations.

|

|

||||||

|

|

||||||

## Code Architecture

|

|

||||||

- **Current Development**: Work in `comfyui_manager/glob/` package

|

|

||||||

- **Legacy Code**: `comfyui_manager/legacy/` (reference only, do not modify unless explicitly asked)

|

|

||||||

- **Common Utilities**: `comfyui_manager/common/` for shared functionality

|

|

||||||

- **Data Models**: `comfyui_manager/data_models/` for API schemas and types

|

|

||||||

|

|

||||||

## Development Workflow for API Changes

|

|

||||||

When modifying data being sent or received:

|

|

||||||

1. Update `openapi.yaml` file first

|

|

||||||

2. Verify YAML syntax using `yaml.safe_load`

|

|

||||||

3. Regenerate types following `data_models/README.md` instructions

|

|

||||||

4. Verify new data model generation

|

|

||||||

5. Verify syntax of generated type files

|

|

||||||

6. Run formatting and linting on generated files

|

|

||||||

7. Update `__init__.py` files in `data_models` to export new models

|

|

||||||

8. Make changes to rest of codebase

|

|

||||||

9. Run CI tests to verify changes

|

|

||||||

|

|

||||||

## Coding Standards

|

|

||||||

### Python Style

|

|

||||||

- Follow PEP 8 coding standards

|

|

||||||

- Use type hints for all function parameters and return values

|

|

||||||

- Target Python 3.9+ compatibility

|

|

||||||

- Line length: 120 characters (as configured in ruff)

|

|

||||||

|

|

||||||

### Security Guidelines

|

|

||||||

- Never hardcode API keys, tokens, or sensitive credentials

|

|

||||||

- Use environment variables for configuration

|

|

||||||

- Validate all user input and file paths

|

|

||||||

- Use prepared statements for database operations

|

|

||||||

- Implement proper error handling without exposing internal details

|

|

||||||

- Follow principle of least privilege for file/network access

|

|

||||||

|

|

||||||

### Code Quality

|

|

||||||

- Write descriptive variable and function names

|

|

||||||

- Include docstrings for public functions and classes

|

|

||||||

- Handle exceptions gracefully with specific error messages

|

|

||||||

- Use logging instead of print statements for debugging

|

|

||||||

- Maintain test coverage for new functionality

|

|

||||||

|

|

||||||

## Dependencies & Tools

|

|

||||||

### Core Dependencies

|

|

||||||

- GitPython, PyGithub for Git operations

|

|

||||||

- typer, rich for CLI interface

|

|

||||||

- transformers, huggingface-hub for AI model handling

|

|

||||||

- uv for fast package management

|

|

||||||

|

|

||||||

### Development Tools

|

|

||||||

- **Linting**: ruff (configured in pyproject.toml)

|

|

||||||

- **Testing**: pytest with coverage

|

|

||||||

- **Pre-commit**: pre-commit hooks for code quality

|

|

||||||

- **Type Checking**: Use type hints, consider mypy for strict checking

|

|

||||||

|

|

||||||

## File Organization

|

|

||||||

- Keep business logic in appropriate modules under `glob/`

|

|

||||||

- Place utility functions in `common/` for reusability

|

|

||||||

- Store UI/frontend code in `js/` directory

|

|

||||||

- Maintain documentation in `docs/` with multilingual support

|

|

||||||

|

|

||||||

### Large Data Files Policy

|

|

||||||

- **NEVER read .json files directly** - These contain large datasets that cause unnecessary token consumption

|

|

||||||

- Use `JSON_REFERENCE.md` for understanding JSON file structures and schemas

|

|

||||||

- Work with processed/filtered data through APIs when possible

|

|

||||||

- For structure analysis, refer to data models in `comfyui_manager/data_models/` instead

|

|

||||||

|

|

||||||

## Git Workflow

|

|

||||||

- Work on feature branches, not main directly

|

|

||||||

- Write clear, descriptive commit messages

|

|

||||||

- Run tests and linting before committing

|

|

||||||

- Keep commits atomic and focused

|

|

||||||

|

|

||||||

## Testing Requirements

|

|

||||||

|

|

||||||

### ⚠️ Critical: Always Reinstall Before Testing

|

|

||||||

**ALWAYS run `uv pip install .` before executing tests** to ensure latest code changes are installed.

|

|

||||||

|

|

||||||

### Test Execution Workflow

|

|

||||||

```bash

|

|

||||||

# 1. Reinstall package (REQUIRED)

|

|

||||||

uv pip install .

|

|

||||||

|

|

||||||

# 2. Clean Python cache

|

|

||||||

find comfyui_manager -type d -name "__pycache__" -exec rm -rf {} + 2>/dev/null

|

|

||||||

find tests/env -type d -name "__pycache__" -exec rm -rf {} + 2>/dev/null

|

|

||||||

|

|

||||||

# 3. Stop any running servers

|

|

||||||

pkill -f "ComfyUI/main.py"

|

|

||||||

sleep 2

|

|

||||||

|

|

||||||

# 4. Start ComfyUI test server

|

|

||||||

cd tests/env

|

|

||||||

python ComfyUI/main.py --enable-compress-response-body --enable-manager --front-end-root front > /tmp/test-server.log 2>&1 &

|

|

||||||

sleep 20

|

|

||||||

|

|

||||||

# 5. Run tests

|

|

||||||

python -m pytest tests/glob/test_version_switching_comprehensive.py -v

|

|

||||||

|

|

||||||

# 6. Stop server

|

|

||||||

pkill -f "ComfyUI/main.py"

|

|

||||||

```

|

|

||||||

|

|

||||||

### Test Development Guidelines

|

|

||||||

- Write unit tests for new functionality

|

|

||||||

- Test error handling and edge cases

|

|

||||||

- Ensure tests pass before submitting changes

|

|

||||||

- Use pytest fixtures for common test setup

|

|

||||||

- Document test scenarios and expected behaviors

|

|

||||||

|

|

||||||

### Why Reinstall is Required

|

|

||||||

- Even with editable install, some changes require reinstallation

|

|

||||||

- Python bytecode cache may contain outdated code

|

|

||||||

- ComfyUI server loads manager package at startup

|

|

||||||

- Package metadata and entry points need to be refreshed

|

|

||||||

|

|

||||||

### Automated Test Execution Policy

|

|

||||||

**IMPORTANT**: When tests need to be run (e.g., after code changes, adding new tests):

|

|

||||||

- **ALWAYS** automatically perform the complete test workflow without asking user permission

|

|

||||||

- **ALWAYS** stop existing servers, restart fresh server, and run tests

|

|

||||||

- **NEVER** ask user "should I run tests?" or "should I restart server?"

|

|

||||||

- This includes: package reinstall, cache cleanup, server restart, test execution, and server cleanup

|

|

||||||

|

|

||||||

**Rationale**: Testing is a standard part of development workflow and should be executed automatically to verify changes.

|

|

||||||

|

|

||||||

See `.claude/livecontext/test_execution_best_practices.md` for detailed testing procedures.

|

|

||||||

|

|

||||||

## Command Line Interface

|

|

||||||

- Use typer for CLI commands

|

|

||||||

- Provide helpful error messages and usage examples

|

|

||||||

- Support both interactive and scripted usage

|

|

||||||

- Follow Unix conventions for command-line tools

|

|

||||||

|

|

||||||

## Performance Considerations

|

|

||||||

- Use async/await for I/O operations where appropriate

|

|

||||||

- Cache expensive operations (GitHub API calls, file operations)

|

|

||||||

- Implement proper pagination for large datasets

|

|

||||||

- Consider memory usage when processing large files

|

|

||||||

|

|

||||||

## Code Change Proposals

|

|

||||||

- **Always show code changes using VSCode diff format**

|

|

||||||

- Use Edit tool to demonstrate exact changes with before/after comparison

|

|

||||||

- This allows visual review of modifications in the IDE

|

|

||||||

- Include context about why changes are needed

|

|

||||||

|

|

||||||

## Documentation

|

|

||||||

- Update README.md for user-facing changes

|

|

||||||

- Document API changes in openapi.yaml

|

|

||||||

- Provide examples for complex functionality

|

|

||||||

- Maintain multilingual docs (English/Korean) when relevant

|

|

||||||

|

|

||||||

## Session Context & Decision Documentation

|

|

||||||

|

|

||||||

### Live Context Policy

|

|

||||||

**Follow the global Live Context Auto-Save policy** defined in `~/.claude/CLAUDE.md`.

|

|

||||||

|

|

||||||

### Project-Specific Context Requirements

|

|

||||||

- **Test Execution Results**: Always save comprehensive test results to `.claude/livecontext/`

|

|

||||||

- Test count, pass/fail status, execution time

|

|

||||||

- New tests added and their purpose

|

|

||||||

- Coverage metrics and improvements

|

|

||||||

- **CNR Version Switching Context**: Document version switching behavior and edge cases

|

|

||||||

- Update vs Install operation differences

|

|

||||||

- Old version handling (preserved vs deleted)

|

|

||||||

- State management insights

|

|

||||||

- **API Changes**: Document OpenAPI schema changes and data model updates

|

|

||||||

- **Architecture Decisions**: Document manager_core.py and manager_server.py design choices

|

|

||||||

@@ -1,47 +0,0 @@

|

|||||||

## Testing Changes

|

|

||||||

|

|

||||||

1. Activate the ComfyUI environment.

|

|

||||||

|

|

||||||

2. Build package locally after making changes.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# from inside the ComfyUI-Manager directory, with the ComfyUI environment activated

|

|

||||||

python -m build

|

|

||||||

```

|

|

||||||

|

|

||||||

3. Install the package locally in the ComfyUI environment.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# Uninstall existing package

|

|

||||||

pip uninstall comfyui-manager

|

|

||||||

|

|

||||||

# Install the locale package

|

|

||||||

pip install dist/comfyui-manager-*.whl

|

|

||||||

```

|

|

||||||

|

|

||||||

4. Start ComfyUI.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# after navigating to the ComfyUI directory

|

|

||||||

python main.py

|

|

||||||

```

|

|

||||||

|

|

||||||

## Manually Publish Test Version to PyPi

|

|

||||||

|

|

||||||

1. Set the `PYPI_TOKEN` environment variable in env file.

|

|

||||||

|

|

||||||

2. If manually publishing, you likely want to use a release candidate version, so set the version in [pyproject.toml](pyproject.toml) to something like `0.0.1rc1`.

|

|

||||||

|

|

||||||

3. Build the package.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

python -m build

|

|

||||||

```

|

|

||||||

|

|

||||||

4. Upload the package to PyPi.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

python -m twine upload dist/* --username __token__ --password $PYPI_TOKEN

|

|

||||||

```

|

|

||||||

|

|

||||||

5. View at https://pypi.org/project/comfyui-manager/

|

|

||||||

@@ -1,187 +0,0 @@

|

|||||||

# ComfyUI Manager Documentation Index

|

|

||||||

|

|

||||||

**Last Updated**: 2025-11-04

|

|

||||||

**Purpose**: Navigate all project documentation organized by purpose and audience

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## 📖 Quick Links

|

|

||||||

|

|

||||||

- **Getting Started**: [README.md](README.md)

|

|

||||||

- **User Documentation**: [docs/](docs/)

|

|

||||||

- **Test Documentation**: [tests/glob/](tests/glob/)

|

|

||||||

- **Contributing**: [CONTRIBUTING.md](CONTRIBUTING.md)

|

|

||||||

- **Development**: [CLAUDE.md](CLAUDE.md)

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## 📚 Documentation Structure

|

|

||||||

|

|

||||||

### Root Level

|

|

||||||

|

|

||||||

| Document | Purpose | Audience |

|

|

||||||

|----------|---------|----------|

|

|

||||||

| [README.md](README.md) | Project overview and quick start | Everyone |

|

|

||||||

| [CONTRIBUTING.md](CONTRIBUTING.md) | Contribution guidelines | Contributors |

|

|

||||||

| [CLAUDE.md](CLAUDE.md) | Development guidelines for AI-assisted development | Developers |

|

|

||||||

| [JSON_REFERENCE.md](JSON_REFERENCE.md) | JSON file schema reference | Developers |

|

|

||||||

|

|

||||||

### User Documentation (`docs/`)

|

|

||||||

|

|

||||||

| Document | Purpose | Language |

|

|

||||||

|----------|---------|----------|

|

|

||||||

| [docs/README.md](docs/README.md) | Documentation overview | English |

|

|

||||||

| [docs/PACKAGE_VERSION_MANAGEMENT.md](docs/PACKAGE_VERSION_MANAGEMENT.md) | Package version management guide | English |

|

|

||||||

| [docs/SECURITY_ENHANCED_INSTALLATION.md](docs/SECURITY_ENHANCED_INSTALLATION.md) | Security features for URL installation | English |

|

|

||||||

| [docs/en/cm-cli.md](docs/en/cm-cli.md) | CLI usage guide | English |

|

|

||||||

| [docs/en/use_aria2.md](docs/en/use_aria2.md) | Aria2 download configuration | English |

|

|

||||||

| [docs/ko/cm-cli.md](docs/ko/cm-cli.md) | CLI usage guide | Korean |

|

|

||||||

|

|

||||||

### Package Documentation

|

|

||||||

|

|

||||||

| Package | Document | Purpose |

|

|

||||||

|---------|----------|---------|

|

|

||||||

| comfyui_manager | [comfyui_manager/README.md](comfyui_manager/README.md) | Package overview |

|

|

||||||

| common | [comfyui_manager/common/README.md](comfyui_manager/common/README.md) | Common utilities documentation |

|

|

||||||

| data_models | [comfyui_manager/data_models/README.md](comfyui_manager/data_models/README.md) | Data model generation guide |

|

|

||||||

| glob | [comfyui_manager/glob/CLAUDE.md](comfyui_manager/glob/CLAUDE.md) | Glob module development guide |

|

|

||||||

| js | [comfyui_manager/js/README.md](comfyui_manager/js/README.md) | JavaScript components |

|

|

||||||

|

|

||||||

### Test Documentation (`tests/`)

|

|

||||||

|

|

||||||

| Document | Purpose | Status |

|

|

||||||

|----------|---------|--------|

|

|

||||||

| [tests/TEST.md](tests/TEST.md) | Testing overview | ✅ |

|

|

||||||

| [tests/glob/README.md](tests/glob/README.md) | Glob API endpoint tests | ✅ Translated |

|

|

||||||

| [tests/glob/TESTING_GUIDE.md](tests/glob/TESTING_GUIDE.md) | Test execution guide | ✅ |

|

|

||||||

| [tests/glob/TEST_INDEX.md](tests/glob/TEST_INDEX.md) | Test documentation unified index | ✅ Translated |

|

|

||||||

| [tests/glob/TEST_LOG.md](tests/glob/TEST_LOG.md) | Test execution log | ✅ Translated |

|

|

||||||

|

|

||||||

### Node Database

|

|

||||||

|

|

||||||

| Document | Purpose |

|

|

||||||

|----------|---------|

|

|

||||||

| [node_db/README.md](node_db/README.md) | Node database information |

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## 🔒 Internal Documentation (`docs/internal/`)

|

|

||||||

|

|

||||||

### CLI Migration (`docs/internal/cli_migration/`)

|

|

||||||

|

|

||||||

Historical documentation for CLI migration from legacy to glob module (completed).

|

|

||||||

|

|

||||||

| Document | Purpose |

|

|

||||||

|----------|---------|

|

|

||||||

| [README.md](docs/internal/cli_migration/README.md) | Migration plan overview |

|

|

||||||

| [CLI_COMPATIBILITY_ANALYSIS.md](docs/internal/cli_migration/CLI_COMPATIBILITY_ANALYSIS.md) | Legacy vs Glob compatibility analysis |

|

|

||||||

| [CLI_IMPLEMENTATION_CONTEXT.md](docs/internal/cli_migration/CLI_IMPLEMENTATION_CONTEXT.md) | Implementation context |

|

|

||||||

| [CLI_IMPLEMENTATION_TODO.md](docs/internal/cli_migration/CLI_IMPLEMENTATION_TODO.md) | Implementation checklist |

|

|

||||||

| [CLI_PURE_GLOB_MIGRATION_PLAN.md](docs/internal/cli_migration/CLI_PURE_GLOB_MIGRATION_PLAN.md) | Technical migration specification |

|

|

||||||

| [CLI_GLOB_API_REFERENCE.md](docs/internal/cli_migration/CLI_GLOB_API_REFERENCE.md) | Glob API reference |

|

|

||||||

| [CLI_IMPLEMENTATION_CONSTRAINTS.md](docs/internal/cli_migration/CLI_IMPLEMENTATION_CONSTRAINTS.md) | Migration constraints |

|

|

||||||

| [CLI_TESTING_CHECKLIST.md](docs/internal/cli_migration/CLI_TESTING_CHECKLIST.md) | Testing checklist |

|

|

||||||

| [CLI_SHOW_LIST_REVISION.md](docs/internal/cli_migration/CLI_SHOW_LIST_REVISION.md) | show_list implementation plan |

|

|

||||||

|

|

||||||

### Test Planning (`docs/internal/test_planning/`)

|

|

||||||

|

|

||||||

Internal test planning documents (in Korean).

|

|

||||||

|

|

||||||

| Document | Purpose | Language |

|

|

||||||

|----------|---------|----------|

|

|

||||||

| [TEST_PLAN_ADDITIONAL.md](docs/internal/test_planning/TEST_PLAN_ADDITIONAL.md) | Additional test scenarios | Korean |

|

|

||||||

| [COMPLEX_SCENARIOS_TEST_PLAN.md](docs/internal/test_planning/COMPLEX_SCENARIOS_TEST_PLAN.md) | Complex multi-version test scenarios | Korean |

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## 📋 Documentation by Audience

|

|

||||||

|

|

||||||

### For Users

|

|

||||||

1. [README.md](README.md) - Start here

|

|

||||||

2. [docs/en/cm-cli.md](docs/en/cm-cli.md) - CLI usage

|

|

||||||

3. [docs/PACKAGE_VERSION_MANAGEMENT.md](docs/PACKAGE_VERSION_MANAGEMENT.md) - Version management

|

|

||||||

|

|

||||||

### For Contributors

|

|

||||||

1. [CONTRIBUTING.md](CONTRIBUTING.md) - Contribution process

|

|

||||||

2. [CLAUDE.md](CLAUDE.md) - Development guidelines

|

|

||||||

3. [comfyui_manager/data_models/README.md](comfyui_manager/data_models/README.md) - Data model workflow

|

|

||||||

|

|

||||||

### For Developers

|

|

||||||

1. [CLAUDE.md](CLAUDE.md) - Development workflow

|

|

||||||

2. [comfyui_manager/glob/CLAUDE.md](comfyui_manager/glob/CLAUDE.md) - Glob module guide

|

|

||||||

3. [JSON_REFERENCE.md](JSON_REFERENCE.md) - Schema reference

|

|

||||||

4. [docs/PACKAGE_VERSION_MANAGEMENT.md](docs/PACKAGE_VERSION_MANAGEMENT.md) - Package management internals

|

|

||||||

|

|

||||||

### For Testers

|

|

||||||

1. [tests/TEST.md](tests/TEST.md) - Testing overview

|

|

||||||

2. [tests/glob/TEST_INDEX.md](tests/glob/TEST_INDEX.md) - Test documentation index

|

|

||||||

3. [tests/glob/TESTING_GUIDE.md](tests/glob/TESTING_GUIDE.md) - Test execution guide

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## 🔄 Documentation Maintenance

|

|

||||||

|

|

||||||

### When to Update

|

|

||||||

- **README.md**: Project structure or main features change

|

|

||||||

- **CLAUDE.md**: Development workflow changes

|

|

||||||

- **Test Documentation**: New tests added or test structure changes

|

|

||||||

- **User Documentation**: User-facing features change

|

|

||||||

- **This Index**: New documentation added or reorganized

|

|

||||||

|

|

||||||

### Documentation Standards

|

|

||||||

- Use clear, descriptive titles

|

|

||||||

- Include "Last Updated" date

|

|

||||||

- Specify target audience

|

|

||||||

- Provide examples where applicable

|

|

||||||

- Keep language simple and accessible

|

|

||||||

- Translate user-facing docs to Korean when possible

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## 🗂️ File Organization

|

|

||||||

|

|

||||||

```

|

|

||||||

comfyui-manager/

|

|

||||||

├── DOCUMENTATION_INDEX.md (this file)

|

|

||||||

├── README.md

|

|

||||||

├── CONTRIBUTING.md

|

|

||||||

├── CLAUDE.md

|

|

||||||

├── JSON_REFERENCE.md

|

|

||||||

├── docs/

|

|

||||||

│ ├── README.md

|

|

||||||

│ ├── PACKAGE_VERSION_MANAGEMENT.md

|

|

||||||

│ ├── SECURITY_ENHANCED_INSTALLATION.md

|

|

||||||

│ ├── en/

|

|

||||||

│ │ ├── cm-cli.md

|

|

||||||

│ │ └── use_aria2.md

|

|

||||||

│ ├── ko/

|

|

||||||

│ │ └── cm-cli.md

|

|

||||||

│ └── internal/

|

|

||||||

│ ├── cli_migration/ (9 files - completed migration docs)

|

|

||||||

│ └── test_planning/ (2 files - Korean test plans)

|

|

||||||

├── comfyui_manager/

|

|

||||||

│ ├── README.md

|

|

||||||

│ ├── common/README.md

|

|

||||||

│ ├── data_models/README.md

|

|

||||||

│ ├── glob/CLAUDE.md

|

|

||||||

│ └── js/README.md

|

|

||||||

├── tests/

|

|

||||||

│ ├── TEST.md

|

|

||||||

│ └── glob/

|

|

||||||

│ ├── README.md

|

|

||||||

│ ├── TESTING_GUIDE.md

|

|

||||||

│ ├── TEST_INDEX.md

|

|

||||||

│ └── TEST_LOG.md

|

|

||||||

└── node_db/

|

|

||||||

└── README.md

|

|

||||||

```

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

**Total Documentation Files**: 36 files organized across 6 categories

|

|

||||||

|

|

||||||

**Translation Status**:

|

|

||||||

- ✅ Core user documentation: English

|

|

||||||

- ✅ CLI guide: English + Korean

|

|

||||||

- ✅ Test documentation: English (translated from Korean)

|

|

||||||

- 📝 Internal planning docs: Korean (preserved as-is for historical reference)

|

|

||||||

14

MANIFEST.in

14

MANIFEST.in

@@ -1,14 +0,0 @@

|

|||||||

include comfyui_manager/js/*

|

|

||||||

include comfyui_manager/*.json

|

|

||||||

include comfyui_manager/glob/*

|

|

||||||

include LICENSE.txt

|

|

||||||

include README.md

|

|

||||||

include requirements.txt

|

|

||||||

include pyproject.toml

|

|

||||||

include custom-node-list.json

|

|

||||||

include extension-node-list.json

|

|

||||||

include extras.json

|

|

||||||

include github-stats.json

|

|

||||||

include model-list.json

|

|

||||||

include alter-list.json

|

|

||||||

include comfyui_manager/channels.list.template

|

|

||||||

162

README.md

162

README.md

@@ -1,39 +1,100 @@

|

|||||||

# ComfyUI Manager

|

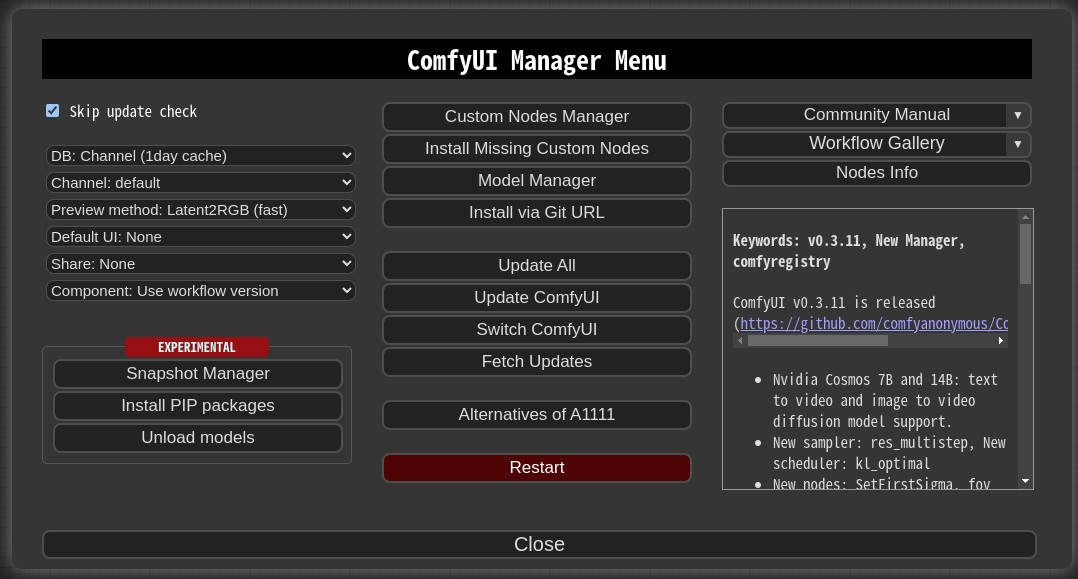

# ComfyUI Manager (V3.0)

|

||||||

|

|

||||||

|

## Introducing the New ComfyUI Manager (V4.0)

|

||||||

|

|

||||||

|

This branch is a temporary branch maintained for users of the older ComfyUI. It will be kept for a limited time and then replaced by the [manager-v4](https://github.com/Comfy-Org/ComfyUI-Manager/tree/manager-v4) branch. (This branch will be renamed to the `manager-v3` branch.)

|

||||||

|

|

||||||

|

Previously, **ComfyUI Manager** functioned as a somewhat independent extension of ComfyUI, requiring users to install it via `git clone`. This branch will continue to exist for a while to ensure that using `git clone` with older versions of ComfyUI does not cause problems.

|

||||||

|

|

||||||

|

The new **ComfyUI Manager** is now managed as an optional dependency of ComfyUI. This means that if you are using the new ComfyUI, you no longer need to visit this repository to use **ComfyUI Manager**.

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

* **ComfyUI Manager** is now available as a package on PyPI: [https://pypi.org/project/comfyui-manager](https://pypi.org/project/comfyui-manager)

|

||||||

|

* Even if the **ComfyUI Manager** dependency is installed, you must enable it by adding the `--enable-manager` option when running ComfyUI.

|

||||||

|

* Once the new **ComfyUI Manager** is enabled, any copy of **comfyui-manager** installed under `ComfyUI/custom_nodes` will be disabled.

|

||||||

|

* Please make all future contributions for feature improvements and bug fixes to the manager-v4 branch.

|

||||||

|

* For now, custom node registration will continue in this branch as well, but it will eventually be fully replaced by registration through https://registry.comfy.org via `pyproject.toml` ([guide](https://docs.comfy.org/registry/overview)).

|

||||||

|

---

|

||||||

|

|

||||||

**ComfyUI-Manager** is an extension designed to enhance the usability of [ComfyUI](https://github.com/comfyanonymous/ComfyUI). It offers management functions to **install, remove, disable, and enable** various custom nodes of ComfyUI. Furthermore, this extension provides a hub feature and convenience functions to access a wide range of information within ComfyUI.

|

**ComfyUI-Manager** is an extension designed to enhance the usability of [ComfyUI](https://github.com/comfyanonymous/ComfyUI). It offers management functions to **install, remove, disable, and enable** various custom nodes of ComfyUI. Furthermore, this extension provides a hub feature and convenience functions to access a wide range of information within ComfyUI.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## NOTICE

|

|

||||||

* V4.0: Modify the structure to be installable via pip instead of using git clone.

|

|

||||||

* V3.16: Support for `uv` has been added. Set `use_uv` in `config.ini`.

|

|

||||||

* V3.10: `double-click feature` is removed

|

|

||||||

* This feature has been moved to https://github.com/ltdrdata/comfyui-connection-helper

|

|

||||||

* V3.3.2: Overhauled. Officially supports [https://registry.comfy.org/](https://registry.comfy.org/).

|

|

||||||

* You can see whole nodes info on [ComfyUI Nodes Info](https://ltdrdata.github.io/) page.

|

|

||||||

|

|

||||||

## Installation

|

## Installation

|

||||||

|

|

||||||

* When installing the latest ComfyUI, it will be automatically installed as a dependency, so manual installation is no longer necessary.

|

### Installation[method1] (General installation method: ComfyUI-Manager only)

|

||||||

|

|

||||||

* Manual installation of the nightly version:

|

To install ComfyUI-Manager in addition to an existing installation of ComfyUI, you can follow the following steps:

|

||||||

* Clone to a temporary directory (**Note:** Do **not** clone into `ComfyUI/custom_nodes`.)

|

|

||||||

```

|

|

||||||

git clone https://github.com/Comfy-Org/ComfyUI-Manager

|

|

||||||

```

|

|

||||||

* Install via pip

|

|

||||||

```

|

|

||||||

cd ComfyUI-Manager

|

|

||||||

pip install .

|

|

||||||

```

|

|

||||||

|

|

||||||

|

1. goto `ComfyUI/custom_nodes` dir in terminal(cmd)

|

||||||

|

2. `git clone https://github.com/ltdrdata/ComfyUI-Manager comfyui-manager`

|

||||||

|

3. Restart ComfyUI

|

||||||

|

|

||||||

|

|

||||||

|

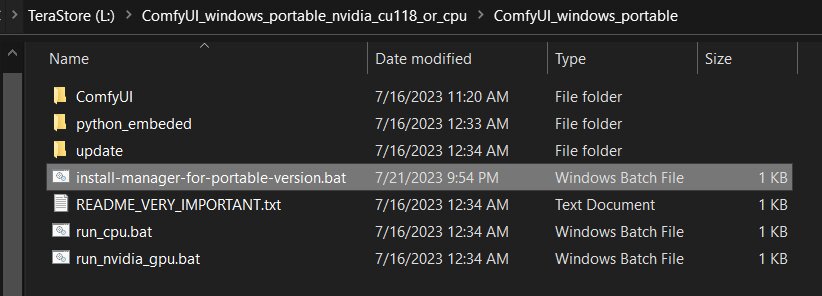

### Installation[method2] (Installation for portable ComfyUI version: ComfyUI-Manager only)

|

||||||

|

1. install git

|

||||||

|

- https://git-scm.com/download/win

|

||||||

|

- standalone version

|

||||||

|

- select option: use windows default console window

|

||||||

|

2. Download [scripts/install-manager-for-portable-version.bat](https://github.com/ltdrdata/ComfyUI-Manager/raw/main/scripts/install-manager-for-portable-version.bat) into installed `"ComfyUI_windows_portable"` directory

|

||||||

|

- Don't click. Right click the link and use save as...

|

||||||

|

3. double click `install-manager-for-portable-version.bat` batch file

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### Installation[method3] (Installation through comfy-cli: install ComfyUI and ComfyUI-Manager at once.)

|

||||||

|

> RECOMMENDED: comfy-cli provides various features to manage ComfyUI from the CLI.

|

||||||

|

|

||||||

|

* **prerequisite: python 3, git**

|

||||||

|

|

||||||

|

Windows:

|

||||||

|

```commandline

|

||||||

|

python -m venv venv

|

||||||

|

venv\Scripts\activate

|

||||||

|

pip install comfy-cli

|

||||||

|

comfy install

|

||||||

|

```

|

||||||

|

|

||||||

|

Linux/OSX:

|

||||||

|

```commandline

|

||||||

|

python -m venv venv

|

||||||

|

. venv/bin/activate

|

||||||

|

pip install comfy-cli

|

||||||

|

comfy install

|

||||||

|

```

|

||||||

* See also: https://github.com/Comfy-Org/comfy-cli

|

* See also: https://github.com/Comfy-Org/comfy-cli

|

||||||

|

|

||||||

|

|

||||||

## Front-end

|

### Installation[method4] (Installation for linux+venv: ComfyUI + ComfyUI-Manager)

|

||||||

|

|

||||||

* The built-in front-end of ComfyUI-Manager is the legacy front-end. The front-end for ComfyUI-Manager is now provided via [ComfyUI Frontend](https://github.com/Comfy-Org/ComfyUI_frontend).

|

To install ComfyUI with ComfyUI-Manager on Linux using a venv environment, you can follow these steps:

|

||||||

* To enable the legacy front-end, set the environment variable `ENABLE_LEGACY_COMFYUI_MANAGER_FRONT` to `true` before running.

|

* **prerequisite: python-is-python3, python3-venv, git**

|

||||||

|

|

||||||

|

1. Download [scripts/install-comfyui-venv-linux.sh](https://github.com/ltdrdata/ComfyUI-Manager/raw/main/scripts/install-comfyui-venv-linux.sh) into empty install directory

|

||||||

|

- Don't click. Right click the link and use save as...

|

||||||

|

- ComfyUI will be installed in the subdirectory of the specified directory, and the directory will contain the generated executable script.

|

||||||

|

2. `chmod +x install-comfyui-venv-linux.sh`

|

||||||

|

3. `./install-comfyui-venv-linux.sh`

|

||||||

|

|

||||||

|

### Installation Precautions

|

||||||

|

* **DO**: `ComfyUI-Manager` files must be accurately located in the path `ComfyUI/custom_nodes/comfyui-manager`

|

||||||

|

* Installing in a compressed file format is not recommended.

|

||||||

|

* **DON'T**: Decompress directly into the `ComfyUI/custom_nodes` location, resulting in the Manager contents like `__init__.py` being placed directly in that directory.

|

||||||

|

* You have to remove all ComfyUI-Manager files from `ComfyUI/custom_nodes`

|

||||||

|

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager/ComfyUI-Manager`.

|

||||||

|

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager-main`.

|

||||||

|

* In such cases, `ComfyUI-Manager` may operate, but it won't be recognized within `ComfyUI-Manager`, and updates cannot be performed. It also poses the risk of duplicate installations. Remove it and install properly via `git clone` method.

|

||||||

|

|

||||||

|

|

||||||

|

You can execute ComfyUI by running either `./run_gpu.sh` or `./run_cpu.sh` depending on your system configuration.

|

||||||

|

|

||||||

|

## Colab Notebook

|

||||||

|

This repository provides Colab notebooks that allow you to install and use ComfyUI, including ComfyUI-Manager. To use ComfyUI, [click on this link](https://colab.research.google.com/github/ltdrdata/ComfyUI-Manager/blob/main/notebooks/comfyui_colab_with_manager.ipynb).

|

||||||

|

* Support for installing ComfyUI

|

||||||

|

* Support for basic installation of ComfyUI-Manager

|

||||||

|

* Support for automatically installing dependencies of custom nodes upon restarting Colab notebooks.

|

||||||

|

|

||||||

|

|

||||||

## How To Use

|

## How To Use

|

||||||

@@ -215,14 +276,13 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

downgrade_blacklist = <Set a list of packages to prevent downgrades. List them separated by commas.>

|

downgrade_blacklist = <Set a list of packages to prevent downgrades. List them separated by commas.>

|

||||||

security_level = <Set the security level => strong|normal|normal-|weak>

|

security_level = <Set the security level => strong|normal|normal-|weak>

|

||||||

always_lazy_install = <Whether to perform dependency installation on restart even in environments other than Windows.>

|

always_lazy_install = <Whether to perform dependency installation on restart even in environments other than Windows.>

|

||||||

network_mode = <Set the network mode => public|private|offline|personal_cloud>

|

network_mode = <Set the network mode => public|private|offline>

|

||||||

```

|

```

|

||||||

|

|

||||||

* network_mode:

|

* network_mode:

|

||||||

- public: An environment that uses a typical public network.

|

- public: An environment that uses a typical public network.

|

||||||

- private: An environment that uses a closed network, where a private node DB is configured via `channel_url`. (Uses cache if available)

|

- private: An environment that uses a closed network, where a private node DB is configured via `channel_url`. (Uses cache if available)

|

||||||

- offline: An environment that does not use any external connections when using an offline network. (Uses cache if available)

|

- offline: An environment that does not use any external connections when using an offline network. (Uses cache if available)

|

||||||

- personal_cloud: Applies relaxed security features in cloud environments such as Google Colab or Runpod, where strong security is not required.

|

|

||||||

|

|

||||||

|

|

||||||

## Additional Feature

|

## Additional Feature

|

||||||

@@ -313,33 +373,31 @@ When you run the `scan.sh` script:

|

|||||||

|

|

||||||

|

|

||||||

## Security policy

|

## Security policy

|

||||||

|

* Edit `config.ini` file: add `security_level = <LEVEL>`

|

||||||

The security settings are applied based on whether the ComfyUI server's listener is non-local and whether the network mode is set to `personal_cloud`.

|

* `strong`

|

||||||

|

* doesn't allow `high` and `middle` level risky feature

|

||||||

* **non-local**: When the server is launched with `--listen` and is bound to a network range other than the local `127.` range, allowing remote IP access.

|

* `normal`

|

||||||

* **personal\_cloud**: When the `network_mode` is set to `personal_cloud`.

|

* doesn't allow `high` level risky feature

|

||||||

|

* `middle` level risky feature is available

|

||||||

|

* `normal-`

|

||||||

### Risky Level Table

|

* doesn't allow `high` level risky feature if `--listen` is specified and not starts with `127.`

|

||||||

|

* `middle` level risky feature is available

|

||||||

| Risky Level | features |

|

* `weak`

|

||||||

|-------------|---------------------------------------------------------------------------------------------------------------------------------------|

|

* all feature is available

|

||||||

| high+ | * `Install via git url`, `pip install`<BR>* Installation of nodepack registered not in the `default channel`. |

|

|

||||||

| high | * Fix nodepack |

|

* `high` level risky features

|

||||||

| middle+ | * Uninstall/Update<BR>* Installation of nodepack registered in the `default channel`.<BR>* Restore/Remove Snapshot<BR>* Install model |

|

* `Install via git url`, `pip install`

|

||||||

| middle | * Restart |

|

* Installation of custom nodes registered not in the `default channel`.

|

||||||

| low | * Update ComfyUI |

|

* Fix custom nodes

|

||||||

|

|

||||||

|

* `middle` level risky features

|

||||||

### Security Level Table

|

* Uninstall/Update

|

||||||

|

* Installation of custom nodes registered in the `default channel`.

|

||||||

| Security Level | local | non-local (personal_cloud) | non-local (not personal_cloud) |

|

* Restore/Remove Snapshot

|

||||||

|----------------|--------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------|--------------------------------|

|

* Restart

|

||||||

| strong | * Only `weak` level risky features are allowed | * Only `weak` level risky features are allowed | * Only `weak` level risky features are allowed |

|

|

||||||

| normal | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+`, `high` and `middle+` level risky features are not allowed<BR>* `middle` level risky features are available

|

* `low` level risky features

|

||||||

| normal- | * All features are available | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+`, `high` and `middle+` level risky features are not allowed<BR>* `middle` level risky features are available

|

* Update ComfyUI

|

||||||

| weak | * All features are available | * All features are available | * `high+` and `middle+` level risky features are not allowed<BR>* `high`, `middle` and `low` level risky features are available

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# Disclaimer

|

# Disclaimer

|

||||||

|

|||||||

25

__init__.py

Normal file

25

__init__.py

Normal file

@@ -0,0 +1,25 @@

|

|||||||

|

"""

|

||||||

|

This file is the entry point for the ComfyUI-Manager package, handling CLI-only mode and initial setup.

|

||||||

|

"""

|

||||||

|

|

||||||

|

import os

|

||||||

|

import sys

|

||||||

|

|

||||||

|

cli_mode_flag = os.path.join(os.path.dirname(__file__), '.enable-cli-only-mode')

|

||||||

|

|

||||||

|

if not os.path.exists(cli_mode_flag):

|

||||||

|

sys.path.append(os.path.join(os.path.dirname(__file__), "glob"))

|

||||||

|

import manager_server # noqa: F401

|

||||||

|

import share_3rdparty # noqa: F401

|

||||||

|

import cm_global

|

||||||

|

|

||||||

|

if not cm_global.disable_front and not 'DISABLE_COMFYUI_MANAGER_FRONT' in os.environ:

|

||||||

|

WEB_DIRECTORY = "js"

|

||||||

|

else:

|

||||||

|

print("\n[ComfyUI-Manager] !! cli-only-mode is enabled !!\n")

|

||||||

|

|

||||||

|

NODE_CLASS_MAPPINGS = {}

|

||||||

|

__all__ = ['NODE_CLASS_MAPPINGS']

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

6

channels.list.template

Normal file

6

channels.list.template

Normal file

@@ -0,0 +1,6 @@

|

|||||||

|

default::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main

|

||||||

|

recent::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/new

|

||||||

|

legacy::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/legacy

|

||||||

|

forked::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/forked

|

||||||

|

dev::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/dev

|

||||||

|

tutorial::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/tutorial

|

||||||

@@ -15,31 +15,31 @@ import git

|

|||||||

import importlib

|

import importlib

|

||||||

|

|

||||||

|

|

||||||

from ..common import manager_util

|

sys.path.append(os.path.dirname(__file__))

|

||||||

|

sys.path.append(os.path.join(os.path.dirname(__file__), "glob"))

|

||||||

|

|

||||||

|

import manager_util

|

||||||

|

|

||||||

# read env vars

|

# read env vars

|

||||||

# COMFYUI_FOLDERS_BASE_PATH is not required in cm-cli.py

|

# COMFYUI_FOLDERS_BASE_PATH is not required in cm-cli.py

|

||||||

# `comfy_path` should be resolved before importing manager_core

|

# `comfy_path` should be resolved before importing manager_core

|

||||||

|

|

||||||

comfy_path = os.environ.get('COMFYUI_PATH')

|

comfy_path = os.environ.get('COMFYUI_PATH')

|

||||||

|

|

||||||

if comfy_path is None:

|

if comfy_path is None:

|

||||||

print("[bold red]cm-cli: environment variable 'COMFYUI_PATH' is not specified.[/bold red]")

|

try:

|

||||||

exit(-1)

|

import folder_paths

|

||||||

|

comfy_path = os.path.join(os.path.dirname(folder_paths.__file__))

|

||||||

|

except:

|

||||||

|

print("\n[bold yellow]WARN: The `COMFYUI_PATH` environment variable is not set. Assuming `custom_nodes/ComfyUI-Manager/../../` as the ComfyUI path.[/bold yellow]", file=sys.stderr)

|

||||||

|

comfy_path = os.path.abspath(os.path.join(manager_util.comfyui_manager_path, '..', '..'))

|

||||||

|

|

||||||

|

# This should be placed here

|

||||||

sys.path.append(comfy_path)

|

sys.path.append(comfy_path)

|

||||||

|

|

||||||

if not os.path.exists(os.path.join(comfy_path, 'folder_paths.py')):

|

|

||||||

print("[bold red]cm-cli: '{comfy_path}' is not a valid 'COMFYUI_PATH' location.[/bold red]")

|

|

||||||

exit(-1)

|

|

||||||

|

|

||||||

|

|

||||||

import utils.extra_config

|

import utils.extra_config

|

||||||

from ..common import cm_global

|

import cm_global

|

||||||

from ..glob import manager_core as core

|

import manager_core as core

|

||||||

from ..common import context

|

from manager_core import unified_manager

|

||||||

from ..glob.manager_core import unified_manager

|

import cnr_utils

|

||||||

from ..common import cnr_utils

|

|

||||||

|

|

||||||

comfyui_manager_path = os.path.abspath(os.path.dirname(__file__))

|

comfyui_manager_path = os.path.abspath(os.path.dirname(__file__))

|

||||||

|

|

||||||

@@ -66,7 +66,7 @@ def check_comfyui_hash():

|

|||||||

repo = git.Repo(comfy_path)

|

repo = git.Repo(comfy_path)

|

||||||

core.comfy_ui_revision = len(list(repo.iter_commits('HEAD')))

|

core.comfy_ui_revision = len(list(repo.iter_commits('HEAD')))

|

||||||

core.comfy_ui_commit_datetime = repo.head.commit.committed_datetime

|

core.comfy_ui_commit_datetime = repo.head.commit.committed_datetime

|

||||||

except Exception:

|

except:

|

||||||

print('[bold yellow]INFO: Frozen ComfyUI mode.[/bold yellow]')

|

print('[bold yellow]INFO: Frozen ComfyUI mode.[/bold yellow]')

|

||||||

core.comfy_ui_revision = 0

|

core.comfy_ui_revision = 0

|

||||||

core.comfy_ui_commit_datetime = 0

|

core.comfy_ui_commit_datetime = 0

|

||||||

@@ -82,7 +82,7 @@ def read_downgrade_blacklist():

|

|||||||

try:

|

try:

|

||||||

import configparser

|

import configparser

|

||||||

config = configparser.ConfigParser(strict=False)

|

config = configparser.ConfigParser(strict=False)

|

||||||

config.read(context.manager_config_path)

|

config.read(core.manager_config.path)

|

||||||

default_conf = config['default']

|

default_conf = config['default']

|

||||||

|

|

||||||

if 'downgrade_blacklist' in default_conf:

|

if 'downgrade_blacklist' in default_conf:

|

||||||

@@ -90,7 +90,7 @@ def read_downgrade_blacklist():

|

|||||||

items = [x.strip() for x in items if x != '']

|

items = [x.strip() for x in items if x != '']

|

||||||

cm_global.pip_downgrade_blacklist += items

|

cm_global.pip_downgrade_blacklist += items

|

||||||

cm_global.pip_downgrade_blacklist = list(set(cm_global.pip_downgrade_blacklist))

|

cm_global.pip_downgrade_blacklist = list(set(cm_global.pip_downgrade_blacklist))

|

||||||

except Exception:

|

except:

|

||||||

pass

|

pass

|

||||||

|

|

||||||

|

|

||||||

@@ -105,7 +105,7 @@ class Ctx:

|

|||||||

self.no_deps = False

|

self.no_deps = False

|

||||||

self.mode = 'cache'

|

self.mode = 'cache'

|

||||||

self.user_directory = None

|

self.user_directory = None

|

||||||

self.custom_nodes_paths = [os.path.join(context.comfy_base_path, 'custom_nodes')]

|

self.custom_nodes_paths = [os.path.join(core.comfy_base_path, 'custom_nodes')]

|

||||||

self.manager_files_directory = os.path.dirname(__file__)

|

self.manager_files_directory = os.path.dirname(__file__)

|

||||||

|

|

||||||

if Ctx.folder_paths is None:

|

if Ctx.folder_paths is None:

|

||||||

@@ -129,7 +129,8 @@ class Ctx:

|

|||||||

if channel is not None:

|

if channel is not None:

|

||||||

self.channel = channel

|

self.channel = channel

|

||||||

|

|

||||||

unified_manager.reload()

|

asyncio.run(unified_manager.reload(cache_mode=self.mode, dont_wait=False))

|

||||||

|

asyncio.run(unified_manager.load_nightly(self.channel, self.mode))

|

||||||

|

|

||||||

def set_no_deps(self, no_deps):

|

def set_no_deps(self, no_deps):

|

||||||

self.no_deps = no_deps

|

self.no_deps = no_deps

|

||||||

@@ -142,14 +143,14 @@ class Ctx:

|

|||||||

if os.path.exists(extra_model_paths_yaml):

|

if os.path.exists(extra_model_paths_yaml):

|

||||||

utils.extra_config.load_extra_path_config(extra_model_paths_yaml)

|

utils.extra_config.load_extra_path_config(extra_model_paths_yaml)

|

||||||

|

|

||||||

context.update_user_directory(user_directory)

|

core.update_user_directory(user_directory)

|

||||||

|

|

||||||

if os.path.exists(context.manager_pip_overrides_path):

|

if os.path.exists(core.manager_pip_overrides_path):

|

||||||

with open(context.manager_pip_overrides_path, 'r', encoding="UTF-8", errors="ignore") as json_file:

|

with open(core.manager_pip_overrides_path, 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||||

cm_global.pip_overrides = json.load(json_file)

|

cm_global.pip_overrides = json.load(json_file)

|

||||||

|

|

||||||

if os.path.exists(context.manager_pip_blacklist_path):

|

if os.path.exists(core.manager_pip_blacklist_path):

|

||||||

with open(context.manager_pip_blacklist_path, 'r', encoding="UTF-8", errors="ignore") as f:

|

with open(core.manager_pip_blacklist_path, 'r', encoding="UTF-8", errors="ignore") as f:

|

||||||

for x in f.readlines():

|

for x in f.readlines():

|

||||||

y = x.strip()

|

y = x.strip()

|

||||||

if y != '':

|

if y != '':

|

||||||

@@ -162,15 +163,15 @@ class Ctx:

|

|||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_startup_scripts_path():

|

def get_startup_scripts_path():

|

||||||

return os.path.join(context.manager_startup_script_path, "install-scripts.txt")

|

return os.path.join(core.manager_startup_script_path, "install-scripts.txt")

|

||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_restore_snapshot_path():

|

def get_restore_snapshot_path():

|

||||||

return os.path.join(context.manager_startup_script_path, "restore-snapshot.json")

|

return os.path.join(core.manager_startup_script_path, "restore-snapshot.json")

|

||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_snapshot_path():

|

def get_snapshot_path():

|

||||||

return context.manager_snapshot_path

|

return core.manager_snapshot_path

|

||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def get_custom_nodes_paths():

|

def get_custom_nodes_paths():

|

||||||

@@ -187,14 +188,9 @@ def install_node(node_spec_str, is_all=False, cnt_msg='', **kwargs):

|

|||||||

exit_on_fail = kwargs.get('exit_on_fail', False)

|

exit_on_fail = kwargs.get('exit_on_fail', False)

|

||||||

print(f"install_node exit on fail:{exit_on_fail}...")

|

print(f"install_node exit on fail:{exit_on_fail}...")

|

||||||

|

|

||||||

if unified_manager.is_url_like(node_spec_str):

|

if core.is_valid_url(node_spec_str):

|

||||||

# install via git URLs

|

# install via urls

|

||||||

repo_name = os.path.basename(node_spec_str)

|

res = asyncio.run(core.gitclone_install(node_spec_str, no_deps=cmd_ctx.no_deps))

|

||||||

if repo_name.endswith('.git'):

|

|

||||||

repo_name = repo_name[:-4]

|

|

||||||

res = asyncio.run(unified_manager.repo_install(

|

|

||||||

node_spec_str, repo_name, instant_execution=True, no_deps=cmd_ctx.no_deps

|

|

||||||

))

|

|

||||||

if not res.result:

|

if not res.result:

|

||||||

print(res.msg)

|

print(res.msg)

|

||||||

print(f"[bold red]ERROR: An error occurred while installing '{node_spec_str}'.[/bold red]")

|

print(f"[bold red]ERROR: An error occurred while installing '{node_spec_str}'.[/bold red]")

|

||||||

@@ -228,7 +224,7 @@ def install_node(node_spec_str, is_all=False, cnt_msg='', **kwargs):

|

|||||||