Compare commits

2 Commits

manager-v4

...

draft-v4-s

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

24ca0ab538 | ||

|

|

62da330182 |

32

.github/workflows/publish-to-pypi.yml

vendored

32

.github/workflows/publish-to-pypi.yml

vendored

@@ -4,7 +4,7 @@ on:

|

|||||||

workflow_dispatch:

|

workflow_dispatch:

|

||||||

push:

|

push:

|

||||||

branches:

|

branches:

|

||||||

- manager-v4

|

- main

|

||||||

paths:

|

paths:

|

||||||

- "pyproject.toml"

|

- "pyproject.toml"

|

||||||

|

|

||||||

@@ -21,7 +21,7 @@ jobs:

|

|||||||

- name: Set up Python

|

- name: Set up Python

|

||||||

uses: actions/setup-python@v4

|

uses: actions/setup-python@v4

|

||||||

with:

|

with:

|

||||||

python-version: '3.x'

|

python-version: '3.9'

|

||||||

|

|

||||||

- name: Install build dependencies

|

- name: Install build dependencies

|

||||||

run: |

|

run: |

|

||||||

@@ -31,28 +31,28 @@ jobs:

|

|||||||

- name: Get current version

|

- name: Get current version

|

||||||

id: current_version

|

id: current_version

|

||||||

run: |

|

run: |

|

||||||

CURRENT_VERSION=$(grep -oP '^version = "\K[^"]+' pyproject.toml)

|

CURRENT_VERSION=$(grep -oP 'version = "\K[^"]+' pyproject.toml)

|

||||||

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

||||||

echo "Current version: $CURRENT_VERSION"

|

echo "Current version: $CURRENT_VERSION"

|

||||||

|

|

||||||

- name: Build package

|

- name: Build package

|

||||||

run: python -m build

|

run: python -m build

|

||||||

|

|

||||||

# - name: Create GitHub Release

|

- name: Create GitHub Release

|

||||||

# id: create_release

|

id: create_release

|

||||||

# uses: softprops/action-gh-release@v2

|

uses: softprops/action-gh-release@v2

|

||||||

# env:

|

env:

|

||||||

# GITHUB_TOKEN: ${{ github.token }}

|

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||||

# with:

|

with:

|

||||||

# files: dist/*

|

files: dist/*

|

||||||

# tag_name: v${{ steps.current_version.outputs.version }}

|

tag_name: v${{ steps.current_version.outputs.version }}

|

||||||

# draft: false

|

draft: false

|

||||||

# prerelease: false

|

prerelease: false

|

||||||

# generate_release_notes: true

|

generate_release_notes: true

|

||||||

|

|

||||||

- name: Publish to PyPI

|

- name: Publish to PyPI

|

||||||

uses: pypa/gh-action-pypi-publish@76f52bc884231f62b9a034ebfe128415bbaabdfc

|

uses: pypa/gh-action-pypi-publish@release/v1

|

||||||

with:

|

with:

|

||||||

password: ${{ secrets.PYPI_TOKEN }}

|

password: ${{ secrets.PYPI_TOKEN }}

|

||||||

skip-existing: true

|

skip-existing: true

|

||||||

verbose: true

|

verbose: true

|

||||||

25

.github/workflows/publish.yml

vendored

Normal file

25

.github/workflows/publish.yml

vendored

Normal file

@@ -0,0 +1,25 @@

|

|||||||

|

name: Publish to Comfy registry

|

||||||

|

on:

|

||||||

|

workflow_dispatch:

|

||||||

|

push:

|

||||||

|

branches:

|

||||||

|

- main-blocked

|

||||||

|

paths:

|

||||||

|

- "pyproject.toml"

|

||||||

|

|

||||||

|

permissions:

|

||||||

|

issues: write

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

publish-node:

|

||||||

|

name: Publish Custom Node to registry

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

if: ${{ github.repository_owner == 'ltdrdata' || github.repository_owner == 'Comfy-Org' }}

|

||||||

|

steps:

|

||||||

|

- name: Check out code

|

||||||

|

uses: actions/checkout@v4

|

||||||

|

- name: Publish Custom Node

|

||||||

|

uses: Comfy-Org/publish-node-action@v1

|

||||||

|

with:

|

||||||

|

## Add your own personal access token to your Github Repository secrets and reference it here.

|

||||||

|

personal_access_token: ${{ secrets.REGISTRY_ACCESS_TOKEN }}

|

||||||

50

README.md

50

README.md

@@ -89,20 +89,20 @@

|

|||||||

|

|

||||||

|

|

||||||

## Paths

|

## Paths

|

||||||

In `ComfyUI-Manager` V4.0.3b4 and later, configuration files and dynamically generated files are located under `<USER_DIRECTORY>/__manager/`.

|

In `ComfyUI-Manager` V3.0 and later, configuration files and dynamically generated files are located under `<USER_DIRECTORY>/default/ComfyUI-Manager/`.

|

||||||

|

|

||||||

* <USER_DIRECTORY>

|

* <USER_DIRECTORY>

|

||||||

* If executed without any options, the path defaults to ComfyUI/user.

|

* If executed without any options, the path defaults to ComfyUI/user.

|

||||||

* It can be set using --user-directory <USER_DIRECTORY>.

|

* It can be set using --user-directory <USER_DIRECTORY>.

|

||||||

|

|

||||||

* Basic config files: `<USER_DIRECTORY>/__manager/config.ini`

|

* Basic config files: `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini`

|

||||||

* Configurable channel lists: `<USER_DIRECTORY>/__manager/channels.ini`

|

* Configurable channel lists: `<USER_DIRECTORY>/default/ComfyUI-Manager/channels.ini`

|

||||||

* Configurable pip overrides: `<USER_DIRECTORY>/__manager/pip_overrides.json`

|

* Configurable pip overrides: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_overrides.json`

|

||||||

* Configurable pip blacklist: `<USER_DIRECTORY>/__manager/pip_blacklist.list`

|

* Configurable pip blacklist: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_blacklist.list`

|

||||||

* Configurable pip auto fix: `<USER_DIRECTORY>/__manager/pip_auto_fix.list`

|

* Configurable pip auto fix: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_auto_fix.list`

|

||||||

* Saved snapshot files: `<USER_DIRECTORY>/__manager/snapshots`

|

* Saved snapshot files: `<USER_DIRECTORY>/default/ComfyUI-Manager/snapshots`

|

||||||

* Startup script files: `<USER_DIRECTORY>/__manager/startup-scripts`

|

* Startup script files: `<USER_DIRECTORY>/default/ComfyUI-Manager/startup-scripts`

|

||||||

* Component files: `<USER_DIRECTORY>/__manager/components`

|

* Component files: `<USER_DIRECTORY>/default/ComfyUI-Manager/components`

|

||||||

|

|

||||||

|

|

||||||

## `extra_model_paths.yaml` Configuration

|

## `extra_model_paths.yaml` Configuration

|

||||||

@@ -115,17 +115,17 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

|

|

||||||

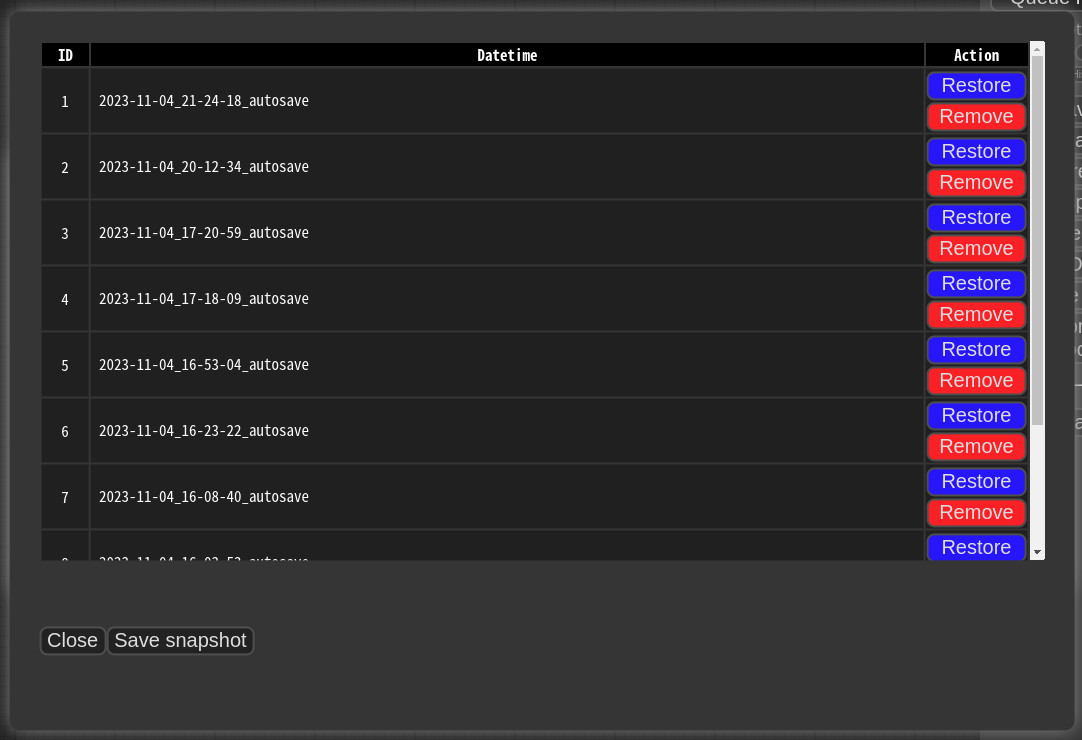

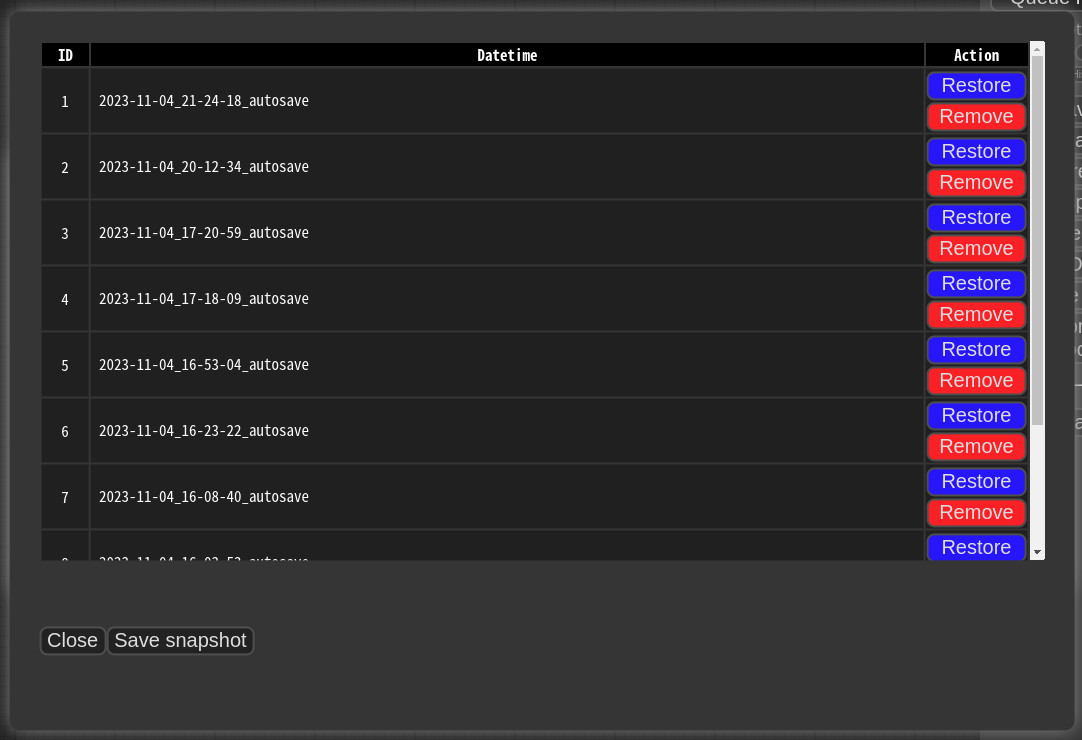

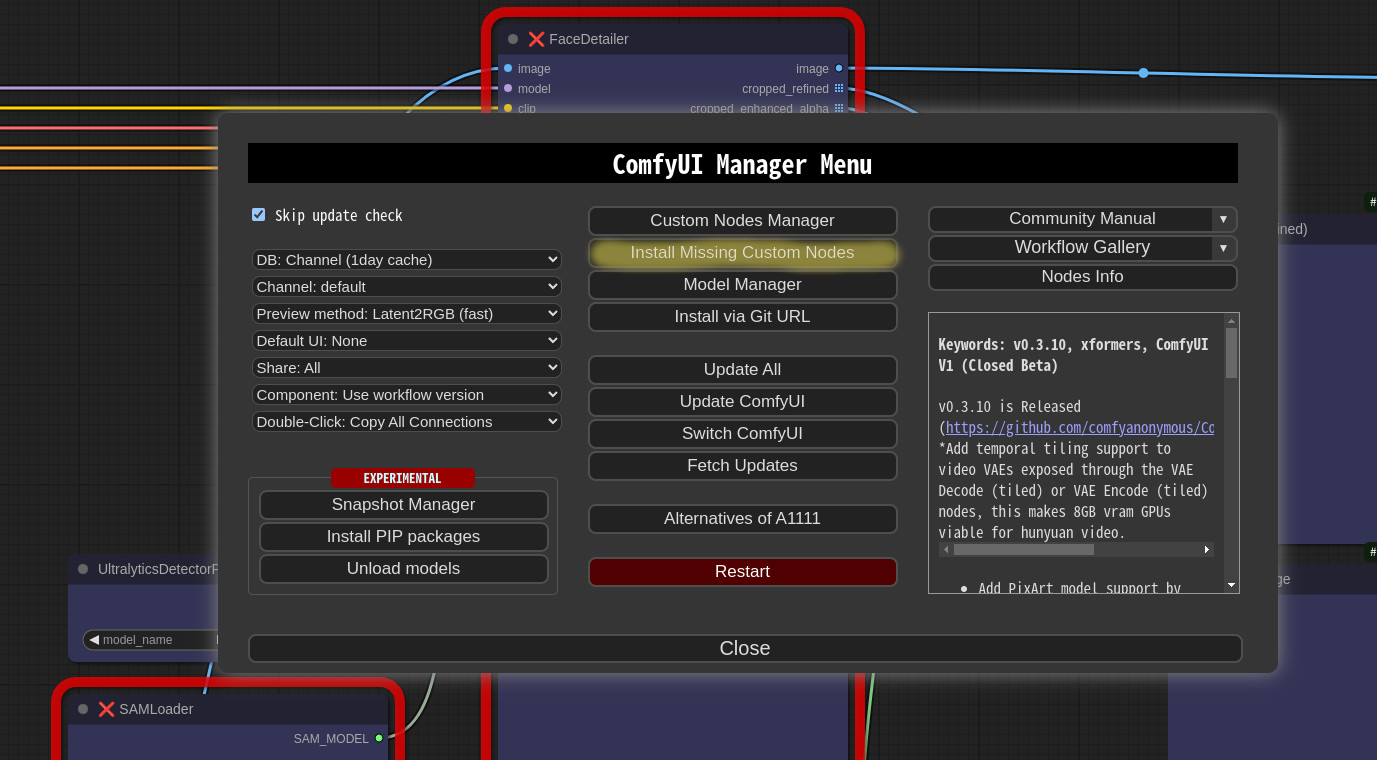

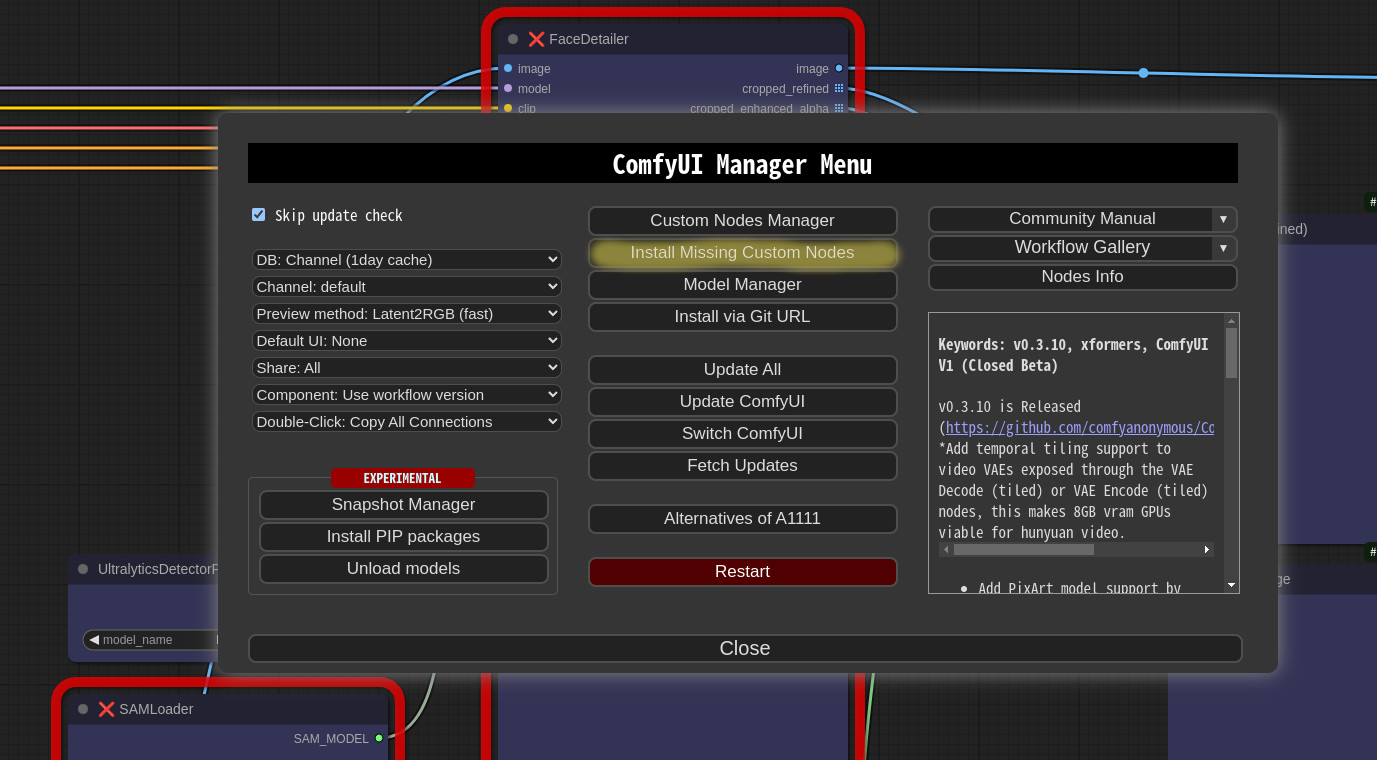

## Snapshot-Manager

|

## Snapshot-Manager

|

||||||

* When you press `Save snapshot` or use `Update All` on `Manager Menu`, the current installation status snapshot is saved.

|

* When you press `Save snapshot` or use `Update All` on `Manager Menu`, the current installation status snapshot is saved.

|

||||||

* Snapshot file dir: `<USER_DIRECTORY>/__manager/snapshots`

|

* Snapshot file dir: `<USER_DIRECTORY>/default/ComfyUI-Manager/snapshots`

|

||||||

* You can rename snapshot file.

|

* You can rename snapshot file.

|

||||||

* Press the "Restore" button to revert to the installation status of the respective snapshot.

|

* Press the "Restore" button to revert to the installation status of the respective snapshot.

|

||||||

* However, for custom nodes not managed by Git, snapshot support is incomplete.

|

* However, for custom nodes not managed by Git, snapshot support is incomplete.

|

||||||

* When you press `Restore`, it will take effect on the next ComfyUI startup.

|

* When you press `Restore`, it will take effect on the next ComfyUI startup.

|

||||||

* The selected snapshot file is saved in `<USER_DIRECTORY>/__manager/startup-scripts/restore-snapshot.json`, and upon restarting ComfyUI, the snapshot is applied and then deleted.

|

* The selected snapshot file is saved in `<USER_DIRECTORY>/default/ComfyUI-Manager/startup-scripts/restore-snapshot.json`, and upon restarting ComfyUI, the snapshot is applied and then deleted.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## cm-cli: command line tools for power users

|

## cm-cli: command line tools for power user

|

||||||

* A tool is provided that allows you to use the features of ComfyUI-Manager without running ComfyUI.

|

* A tool is provided that allows you to use the features of ComfyUI-Manager without running ComfyUI.

|

||||||

* For more details, please refer to the [cm-cli documentation](docs/en/cm-cli.md).

|

* For more details, please refer to the [cm-cli documentation](docs/en/cm-cli.md).

|

||||||

|

|

||||||

@@ -169,12 +169,12 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

* `<current timestamp>` Ensure that the timestamp is always unique.

|

* `<current timestamp>` Ensure that the timestamp is always unique.

|

||||||

* "components" should have the same structure as the content of the file stored in `<USER_DIRECTORY>/__manager/components`.

|

* "components" should have the same structure as the content of the file stored in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||||

* `<component name>`: The name should be in the format `<prefix>::<node name>`.

|

* `<component name>`: The name should be in the format `<prefix>::<node name>`.

|

||||||

* `<component node data>`: In the node data of the group node.

|

* `<compnent nodeata>`: In the nodedata of the group node.

|

||||||

* `<version>`: Only two formats are allowed: `major.minor.patch` or `major.minor`. (e.g. `1.0`, `2.2.1`)

|

* `<version>`: Only two formats are allowed: `major.minor.patch` or `major.minor`. (e.g. `1.0`, `2.2.1`)

|

||||||

* `<datetime>`: Saved time

|

* `<datetime>`: Saved time

|

||||||

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in `<USER_DIRECTORY>/__manager/components`.

|

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||||

* `<category>`: If there is neither a category nor a packname, it is saved in the components category.

|

* `<category>`: If there is neither a category nor a packname, it is saved in the components category.

|

||||||

```

|

```

|

||||||

"version":"1.0",

|

"version":"1.0",

|

||||||

@@ -189,7 +189,7 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

* Dragging and dropping or pasting a single component will add a node. However, when adding multiple components, nodes will not be added.

|

* Dragging and dropping or pasting a single component will add a node. However, when adding multiple components, nodes will not be added.

|

||||||

|

|

||||||

|

|

||||||

## Support for installing missing nodes

|

## Support of missing nodes installation

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -229,10 +229,10 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

* Logging to file feature

|

* Logging to file feature

|

||||||

* This feature is enabled by default and can be disabled by setting `file_logging = False` in the `config.ini`.

|

* This feature is enabled by default and can be disabled by setting `file_logging = False` in the `config.ini`.

|

||||||

|

|

||||||

* Fix node (recreate): When right-clicking on a node and selecting `Fix node (recreate)`, you can recreate the node. The widget's values are reset, while the connections maintain those with the same names.

|

* Fix node(recreate): When right-clicking on a node and selecting `Fix node (recreate)`, you can recreate the node. The widget's values are reset, while the connections maintain those with the same names.

|

||||||

* It is used to correct errors in nodes of old workflows created before, which are incompatible with the version changes of custom nodes.

|

* It is used to correct errors in nodes of old workflows created before, which are incompatible with the version changes of custom nodes.

|

||||||

|

|

||||||

* Double-Click Node Title: You can set the double-click behavior of nodes in the ComfyUI-Manager menu.

|

* Double-Click Node Title: You can set the double click behavior of nodes in the ComfyUI-Manager menu.

|

||||||

* `Copy All Connections`, `Copy Input Connections`: Double-clicking a node copies the connections of the nearest node.

|

* `Copy All Connections`, `Copy Input Connections`: Double-clicking a node copies the connections of the nearest node.

|

||||||

* This action targets the nearest node within a straight-line distance of 1000 pixels from the center of the node.

|

* This action targets the nearest node within a straight-line distance of 1000 pixels from the center of the node.

|

||||||

* In the case of `Copy All Connections`, it duplicates existing outputs, but since it does not allow duplicate connections, the existing output connections of the original node are disconnected.

|

* In the case of `Copy All Connections`, it duplicates existing outputs, but since it does not allow duplicate connections, the existing output connections of the original node are disconnected.

|

||||||

@@ -298,17 +298,17 @@ When you run the `scan.sh` script:

|

|||||||

|

|

||||||

* It updates the `github-stats.json`.

|

* It updates the `github-stats.json`.

|

||||||

* This uses the GitHub API, so set your token with `export GITHUB_TOKEN=your_token_here` to avoid quickly reaching the rate limit and malfunctioning.

|

* This uses the GitHub API, so set your token with `export GITHUB_TOKEN=your_token_here` to avoid quickly reaching the rate limit and malfunctioning.

|

||||||

* To skip this step, add the `--skip-stat-update` option.

|

* To skip this step, add the `--skip-update-stat` option.

|

||||||

|

|

||||||

* The `--skip-all` option applies both `--skip-update` and `--skip-stat-update`.

|

* The `--skip-all` option applies both `--skip-update` and `--skip-stat-update`.

|

||||||

|

|

||||||

|

|

||||||

## Troubleshooting

|

## Troubleshooting

|

||||||

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the `<USER_DIRECTORY>/__manager/config.ini` file that is generated.

|

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini` file that is generated.

|

||||||

* If updating ComfyUI-Manager itself fails, please go to the **ComfyUI-Manager** directory and execute the command `git update-ref refs/remotes/origin/main a361cc1 && git fetch --all && git pull`.

|

* If updating ComfyUI-Manager itself fails, please go to the **ComfyUI-Manager** directory and execute the command `git update-ref refs/remotes/origin/main a361cc1 && git fetch --all && git pull`.

|

||||||

* If you encounter the error message `Overlapped Object has pending operation at deallocation on ComfyUI Manager load` under Windows

|

* If you encounter the error message `Overlapped Object has pending operation at deallocation on Comfyui Manager load` under Windows

|

||||||

* Edit `config.ini` file: add `windows_selector_event_loop_policy = True`

|

* Edit `config.ini` file: add `windows_selector_event_loop_policy = True`

|

||||||

* If the `SSL: CERTIFICATE_VERIFY_FAILED` error occurs.

|

* if `SSL: CERTIFICATE_VERIFY_FAILED` error is occured.

|

||||||

* Edit `config.ini` file: add `bypass_ssl = True`

|

* Edit `config.ini` file: add `bypass_ssl = True`

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

4

check.sh

4

check.sh

@@ -37,7 +37,7 @@ find ~/.tmp/default -name "*.py" -print0 | xargs -0 grep -E "crypto|^_A="

|

|||||||

|

|

||||||

echo

|

echo

|

||||||

echo CHECK3

|

echo CHECK3

|

||||||

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*[^#]*https\?:"

|

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*https\\?:"

|

||||||

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*[^#].*\.whl"

|

find ~/.tmp/default -name "requirements.txt" | xargs grep "\.whl"

|

||||||

|

|

||||||

echo

|

echo

|

||||||

|

|||||||

@@ -15,7 +15,7 @@ def start():

|

|||||||

logging.info('[START] ComfyUI-Manager')

|

logging.info('[START] ComfyUI-Manager')

|

||||||

from .common import cm_global # noqa: F401

|

from .common import cm_global # noqa: F401

|

||||||

|

|

||||||

if args.enable_manager:

|

if not args.disable_manager:

|

||||||

if args.enable_manager_legacy_ui:

|

if args.enable_manager_legacy_ui:

|

||||||

try:

|

try:

|

||||||

from .legacy import manager_server # noqa: F401

|

from .legacy import manager_server # noqa: F401

|

||||||

@@ -42,7 +42,7 @@ def should_be_disabled(fullpath:str) -> bool:

|

|||||||

1. Disables the legacy ComfyUI-Manager.

|

1. Disables the legacy ComfyUI-Manager.

|

||||||

2. The blocklist can be expanded later based on policies.

|

2. The blocklist can be expanded later based on policies.

|

||||||

"""

|

"""

|

||||||

if args.enable_manager:

|

if not args.disable_manager:

|

||||||

# In cases where installation is done via a zip archive, the directory name may not be comfyui-manager, and it may not contain a git repository.

|

# In cases where installation is done via a zip archive, the directory name may not be comfyui-manager, and it may not contain a git repository.

|

||||||

# It is assumed that any installed legacy ComfyUI-Manager will have at least 'comfyui-manager' in its directory name.

|

# It is assumed that any installed legacy ComfyUI-Manager will have at least 'comfyui-manager' in its directory name.

|

||||||

dir_name = os.path.basename(fullpath).lower()

|

dir_name = os.path.basename(fullpath).lower()

|

||||||

|

|||||||

@@ -11,7 +11,6 @@ from . import manager_util

|

|||||||

|

|

||||||

import requests

|

import requests

|

||||||

import toml

|

import toml

|

||||||

import logging

|

|

||||||

|

|

||||||

base_url = "https://api.comfy.org"

|

base_url = "https://api.comfy.org"

|

||||||

|

|

||||||

@@ -24,7 +23,7 @@ async def get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

try:

|

try:

|

||||||

return await _get_cnr_data(cache_mode, dont_wait)

|

return await _get_cnr_data(cache_mode, dont_wait)

|

||||||

except asyncio.TimeoutError:

|

except asyncio.TimeoutError:

|

||||||

logging.info("A timeout occurred during the fetch process from ComfyRegistry.")

|

print("A timeout occurred during the fetch process from ComfyRegistry.")

|

||||||

return await _get_cnr_data(cache_mode=True, dont_wait=True) # timeout fallback

|

return await _get_cnr_data(cache_mode=True, dont_wait=True) # timeout fallback

|

||||||

|

|

||||||

async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

||||||

@@ -80,12 +79,12 @@ async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

full_nodes[x['id']] = x

|

full_nodes[x['id']] = x

|

||||||

|

|

||||||

if page % 5 == 0:

|

if page % 5 == 0:

|

||||||

logging.info(f"FETCH ComfyRegistry Data: {page}/{sub_json_obj['totalPages']}")

|

print(f"FETCH ComfyRegistry Data: {page}/{sub_json_obj['totalPages']}")

|

||||||

|

|

||||||

page += 1

|

page += 1

|

||||||

time.sleep(0.5)

|

time.sleep(0.5)

|

||||||

|

|

||||||

logging.info("FETCH ComfyRegistry Data [DONE]")

|

print("FETCH ComfyRegistry Data [DONE]")

|

||||||

|

|

||||||

for v in full_nodes.values():

|

for v in full_nodes.values():

|

||||||

if 'latest_version' not in v:

|

if 'latest_version' not in v:

|

||||||

@@ -101,7 +100,7 @@ async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

if cache_state == 'not-cached':

|

if cache_state == 'not-cached':

|

||||||

return {}

|

return {}

|

||||||

else:

|

else:

|

||||||

logging.info("[ComfyUI-Manager] The ComfyRegistry cache update is still in progress, so an outdated cache is being used.")

|

print("[ComfyUI-Manager] The ComfyRegistry cache update is still in progress, so an outdated cache is being used.")

|

||||||

with open(manager_util.get_cache_path(uri), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

with open(manager_util.get_cache_path(uri), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||||

return json.load(json_file)['nodes']

|

return json.load(json_file)['nodes']

|

||||||

|

|

||||||

@@ -115,7 +114,7 @@ async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

return json_obj['nodes']

|

return json_obj['nodes']

|

||||||

except Exception:

|

except Exception:

|

||||||

res = {}

|

res = {}

|

||||||

logging.warning("Cannot connect to comfyregistry.")

|

print("Cannot connect to comfyregistry.")

|

||||||

finally:

|

finally:

|

||||||

if cache_mode:

|

if cache_mode:

|

||||||

is_cache_loading = False

|

is_cache_loading = False

|

||||||

@@ -212,7 +211,6 @@ def read_cnr_info(fullpath):

|

|||||||

|

|

||||||

project = data.get('project', {})

|

project = data.get('project', {})

|

||||||

name = project.get('name').strip().lower()

|

name = project.get('name').strip().lower()

|

||||||

original_name = project.get('name')

|

|

||||||

|

|

||||||

# normalize version

|

# normalize version

|

||||||

# for example: 2.5 -> 2.5.0

|

# for example: 2.5 -> 2.5.0

|

||||||

@@ -224,7 +222,6 @@ def read_cnr_info(fullpath):

|

|||||||

if name and version: # repository is optional

|

if name and version: # repository is optional

|

||||||

return {

|

return {

|

||||||

"id": name,

|

"id": name,

|

||||||

"original_name": original_name,

|

|

||||||

"version": version,

|

"version": version,

|

||||||

"url": repository

|

"url": repository

|

||||||

}

|

}

|

||||||

@@ -241,7 +238,7 @@ def generate_cnr_id(fullpath, cnr_id):

|

|||||||

with open(cnr_id_path, "w") as f:

|

with open(cnr_id_path, "w") as f:

|

||||||

return f.write(cnr_id)

|

return f.write(cnr_id)

|

||||||

except Exception:

|

except Exception:

|

||||||

logging.error(f"[ComfyUI Manager] unable to create file: {cnr_id_path}")

|

print(f"[ComfyUI Manager] unable to create file: {cnr_id_path}")

|

||||||

|

|

||||||

|

|

||||||

def read_cnr_id(fullpath):

|

def read_cnr_id(fullpath):

|

||||||

|

|||||||

@@ -34,7 +34,7 @@ manager_pip_blacklist_path = None

|

|||||||

manager_components_path = None

|

manager_components_path = None

|

||||||

manager_batch_history_path = None

|

manager_batch_history_path = None

|

||||||

|

|

||||||

def update_user_directory(manager_dir):

|

def update_user_directory(user_dir):

|

||||||

global manager_files_path

|

global manager_files_path

|

||||||

global manager_config_path

|

global manager_config_path

|

||||||

global manager_channel_list_path

|

global manager_channel_list_path

|

||||||

@@ -45,7 +45,7 @@ def update_user_directory(manager_dir):

|

|||||||

global manager_components_path

|

global manager_components_path

|

||||||

global manager_batch_history_path

|

global manager_batch_history_path

|

||||||

|

|

||||||

manager_files_path = manager_dir

|

manager_files_path = os.path.abspath(os.path.join(user_dir, 'default', 'ComfyUI-Manager'))

|

||||||

if not os.path.exists(manager_files_path):

|

if not os.path.exists(manager_files_path):

|

||||||

os.makedirs(manager_files_path)

|

os.makedirs(manager_files_path)

|

||||||

|

|

||||||

@@ -73,7 +73,7 @@ def update_user_directory(manager_dir):

|

|||||||

|

|

||||||

try:

|

try:

|

||||||

import folder_paths

|

import folder_paths

|

||||||

update_user_directory(folder_paths.get_system_user_directory("manager"))

|

update_user_directory(folder_paths.get_user_directory())

|

||||||

|

|

||||||

except Exception:

|

except Exception:

|

||||||

# fallback:

|

# fallback:

|

||||||

|

|||||||

@@ -15,8 +15,7 @@ import re

|

|||||||

import logging

|

import logging

|

||||||

import platform

|

import platform

|

||||||

import shlex

|

import shlex

|

||||||

from functools import lru_cache

|

from packaging import version

|

||||||

|

|

||||||

|

|

||||||

cache_lock = threading.Lock()

|

cache_lock = threading.Lock()

|

||||||

session_lock = threading.Lock()

|

session_lock = threading.Lock()

|

||||||

@@ -39,64 +38,18 @@ def add_python_path_to_env():

|

|||||||

os.environ['PATH'] = os.path.dirname(sys.executable)+sep+os.environ['PATH']

|

os.environ['PATH'] = os.path.dirname(sys.executable)+sep+os.environ['PATH']

|

||||||

|

|

||||||

|

|

||||||

@lru_cache(maxsize=2)

|

|

||||||

def get_pip_cmd(force_uv=False):

|

|

||||||

"""

|

|

||||||

Get the base pip command, with automatic fallback to uv if pip is unavailable.

|

|

||||||

|

|

||||||

Args:

|

|

||||||

force_uv (bool): If True, use uv directly without trying pip

|

|

||||||

|

|

||||||

Returns:

|

|

||||||

list: Base command for pip operations

|

|

||||||

"""

|

|

||||||

embedded = 'python_embeded' in sys.executable

|

|

||||||

|

|

||||||

# Try pip first (unless forcing uv)

|

|

||||||

if not force_uv:

|

|

||||||

try:

|

|

||||||

test_cmd = [sys.executable] + (['-s'] if embedded else []) + ['-m', 'pip', '--version']

|

|

||||||

subprocess.check_output(test_cmd, stderr=subprocess.DEVNULL, timeout=5)

|

|

||||||

return [sys.executable] + (['-s'] if embedded else []) + ['-m', 'pip']

|

|

||||||

except Exception:

|

|

||||||

logging.warning("[ComfyUI-Manager] python -m pip not available. Falling back to uv.")

|

|

||||||

|

|

||||||

# Try uv (either forced or pip failed)

|

|

||||||

import shutil

|

|

||||||

|

|

||||||

# Try uv as Python module

|

|

||||||

try:

|

|

||||||

test_cmd = [sys.executable] + (['-s'] if embedded else []) + ['-m', 'uv', '--version']

|

|

||||||

subprocess.check_output(test_cmd, stderr=subprocess.DEVNULL, timeout=5)

|

|

||||||

logging.info("[ComfyUI-Manager] Using uv as Python module for pip operations.")

|

|

||||||

return [sys.executable] + (['-s'] if embedded else []) + ['-m', 'uv', 'pip']

|

|

||||||

except Exception:

|

|

||||||

pass

|

|

||||||

|

|

||||||

# Try standalone uv

|

|

||||||

if shutil.which('uv'):

|

|

||||||

logging.info("[ComfyUI-Manager] Using standalone uv for pip operations.")

|

|

||||||

return ['uv', 'pip']

|

|

||||||

|

|

||||||

# Nothing worked

|

|

||||||

logging.error("[ComfyUI-Manager] Neither python -m pip nor uv are available. Cannot proceed with package operations.")

|

|

||||||

raise Exception("Neither pip nor uv are available for package management")

|

|

||||||

|

|

||||||

|

|

||||||

def make_pip_cmd(cmd):

|

def make_pip_cmd(cmd):

|

||||||

"""

|

if 'python_embeded' in sys.executable:

|

||||||

Create a pip command by combining the cached base pip command with the given arguments.

|

if use_uv:

|

||||||

|

return [sys.executable, '-s', '-m', 'uv', 'pip'] + cmd

|

||||||

Args:

|

else:

|

||||||

cmd (list): List of pip command arguments (e.g., ['install', 'package'])

|

return [sys.executable, '-s', '-m', 'pip'] + cmd

|

||||||

|

else:

|

||||||

Returns:

|

# FIXED: https://github.com/ltdrdata/ComfyUI-Manager/issues/1667

|

||||||

list: Complete command list ready for subprocess execution

|

if use_uv:

|

||||||

"""

|

return [sys.executable, '-m', 'uv', 'pip'] + cmd

|

||||||

global use_uv

|

else:

|

||||||

base_cmd = get_pip_cmd(force_uv=use_uv)

|

return [sys.executable, '-m', 'pip'] + cmd

|

||||||

return base_cmd + cmd

|

|

||||||

|

|

||||||

|

|

||||||

# DON'T USE StrictVersion - cannot handle pre_release version

|

# DON'T USE StrictVersion - cannot handle pre_release version

|

||||||

# try:

|

# try:

|

||||||

@@ -105,62 +58,32 @@ def make_pip_cmd(cmd):

|

|||||||

# print(f"[ComfyUI-Manager] 'distutils' package not found. Activating fallback mode for compatibility.")

|

# print(f"[ComfyUI-Manager] 'distutils' package not found. Activating fallback mode for compatibility.")

|

||||||

class StrictVersion:

|

class StrictVersion:

|

||||||

def __init__(self, version_string):

|

def __init__(self, version_string):

|

||||||

|

self.obj = version.parse(version_string)

|

||||||

self.version_string = version_string

|

self.version_string = version_string

|

||||||

self.major = 0

|

self.major = self.obj.major

|

||||||

self.minor = 0

|

self.minor = self.obj.minor

|

||||||

self.patch = 0

|

self.patch = self.obj.micro

|

||||||

self.pre_release = None

|

|

||||||

self.parse_version_string()

|

|

||||||

|

|

||||||

def parse_version_string(self):

|

|

||||||

parts = self.version_string.split('.')

|

|

||||||

if not parts:

|

|

||||||

raise ValueError("Version string must not be empty")

|

|

||||||

|

|

||||||

self.major = int(parts[0])

|

|

||||||

self.minor = int(parts[1]) if len(parts) > 1 else 0

|

|

||||||

self.patch = int(parts[2]) if len(parts) > 2 else 0

|

|

||||||

|

|

||||||

# Handling pre-release versions if present

|

|

||||||

if len(parts) > 3:

|

|

||||||

self.pre_release = parts[3]

|

|

||||||

|

|

||||||

def __str__(self):

|

def __str__(self):

|

||||||

version = f"{self.major}.{self.minor}.{self.patch}"

|

return self.version_string

|

||||||

if self.pre_release:

|

|

||||||

version += f"-{self.pre_release}"

|

|

||||||

return version

|

|

||||||

|

|

||||||

def __eq__(self, other):

|

def __eq__(self, other):

|

||||||

return (self.major, self.minor, self.patch, self.pre_release) == \

|

return self.obj == other.obj

|

||||||

(other.major, other.minor, other.patch, other.pre_release)

|

|

||||||

|

|

||||||

def __lt__(self, other):

|

def __lt__(self, other):

|

||||||

if (self.major, self.minor, self.patch) == (other.major, other.minor, other.patch):

|

return self.obj < other.obj

|

||||||

return self.pre_release_compare(self.pre_release, other.pre_release) < 0

|

|

||||||

return (self.major, self.minor, self.patch) < (other.major, other.minor, other.patch)

|

|

||||||

|

|

||||||

@staticmethod

|

|

||||||

def pre_release_compare(pre1, pre2):

|

|

||||||

if pre1 == pre2:

|

|

||||||

return 0

|

|

||||||

if pre1 is None:

|

|

||||||

return 1

|

|

||||||

if pre2 is None:

|

|

||||||

return -1

|

|

||||||

return -1 if pre1 < pre2 else 1

|

|

||||||

|

|

||||||

def __le__(self, other):

|

def __le__(self, other):

|

||||||

return self == other or self < other

|

return self.obj == other.obj or self.obj < other.obj

|

||||||

|

|

||||||

def __gt__(self, other):

|

def __gt__(self, other):

|

||||||

return not self <= other

|

return not self.obj <= other.obj

|

||||||

|

|

||||||

def __ge__(self, other):

|

def __ge__(self, other):

|

||||||

return not self < other

|

return not self.obj < other.obj

|

||||||

|

|

||||||

def __ne__(self, other):

|

def __ne__(self, other):

|

||||||

return not self == other

|

return not self.obj == other.obj

|

||||||

|

|

||||||

|

|

||||||

def simple_hash(input_string):

|

def simple_hash(input_string):

|

||||||

|

|||||||

136

comfyui_manager/common/snapshot_util.py

Normal file

136

comfyui_manager/common/snapshot_util.py

Normal file

@@ -0,0 +1,136 @@

|

|||||||

|

from . import manager_util

|

||||||

|

from . import git_utils

|

||||||

|

import json

|

||||||

|

import yaml

|

||||||

|

import logging

|

||||||

|

|

||||||

|

def read_snapshot(snapshot_path):

|

||||||

|

try:

|

||||||

|

|

||||||

|

with open(snapshot_path, 'r', encoding="UTF-8") as snapshot_file:

|

||||||

|

if snapshot_path.endswith('.json'):

|

||||||

|

info = json.load(snapshot_file)

|

||||||

|

elif snapshot_path.endswith('.yaml'):

|

||||||

|

info = yaml.load(snapshot_file, Loader=yaml.SafeLoader)

|

||||||

|

info = info['custom_nodes']

|

||||||

|

|

||||||

|

return info

|

||||||

|

except Exception as e:

|

||||||

|

logging.warning(f"Failed to read snapshot file: {snapshot_path}\nError: {e}")

|

||||||

|

|

||||||

|

return None

|

||||||

|

|

||||||

|

|

||||||

|

def diff_snapshot(a, b):

|

||||||

|

if not a or not b:

|

||||||

|

return None

|

||||||

|

|

||||||

|

nodepack_diff = {

|

||||||

|

'added': {},

|

||||||

|

'removed': [],

|

||||||

|

'upgraded': {},

|

||||||

|

'downgraded': {},

|

||||||

|

'changed': []

|

||||||

|

}

|

||||||

|

|

||||||

|

pip_diff = {

|

||||||

|

'added': {},

|

||||||

|

'upgraded': {},

|

||||||

|

'downgraded': {}

|

||||||

|

}

|

||||||

|

|

||||||

|

# check: comfyui

|

||||||

|

if a.get('comfyui') != b.get('comfyui'):

|

||||||

|

nodepack_diff['changed'].append('comfyui')

|

||||||

|

|

||||||

|

# check: cnr nodes

|

||||||

|

a_cnrs = a.get('cnr_custom_nodes', {})

|

||||||

|

b_cnrs = b.get('cnr_custom_nodes', {})

|

||||||

|

|

||||||

|

if 'comfyui-manager' in a_cnrs:

|

||||||

|

del a_cnrs['comfyui-manager']

|

||||||

|

if 'comfyui-manager' in b_cnrs:

|

||||||

|

del b_cnrs['comfyui-manager']

|

||||||

|

|

||||||

|

for k, v in a_cnrs.items():

|

||||||

|

if k not in b_cnrs.keys():

|

||||||

|

nodepack_diff['removed'].append(k)

|

||||||

|

elif a_cnrs[k] != b_cnrs[k]:

|

||||||

|

a_ver = manager_util.StrictVersion(a_cnrs[k])

|

||||||

|

b_ver = manager_util.StrictVersion(b_cnrs[k])

|

||||||

|

if a_ver < b_ver:

|

||||||

|

nodepack_diff['upgraded'][k] = {'from': a_cnrs[k], 'to': b_cnrs[k]}

|

||||||

|

elif a_ver > b_ver:

|

||||||

|

nodepack_diff['downgraded'][k] = {'from': a_cnrs[k], 'to': b_cnrs[k]}

|

||||||

|

|

||||||

|

added_cnrs = set(b_cnrs.keys()) - set(a_cnrs.keys())

|

||||||

|

for k in added_cnrs:

|

||||||

|

nodepack_diff['added'][k] = b_cnrs[k]

|

||||||

|

|

||||||

|

# check: git custom nodes

|

||||||

|

a_gits = a.get('git_custom_nodes', {})

|

||||||

|

b_gits = b.get('git_custom_nodes', {})

|

||||||

|

|

||||||

|

a_gits = {git_utils.normalize_url(k): v for k, v in a_gits.items() if k.lower() != 'comfyui-manager'}

|

||||||

|

b_gits = {git_utils.normalize_url(k): v for k, v in b_gits.items() if k.lower() != 'comfyui-manager'}

|

||||||

|

|

||||||

|

for k, v in a_gits.items():

|

||||||

|

if k not in b_gits.keys():

|

||||||

|

nodepack_diff['removed'].append(k)

|

||||||

|

elif not v['disabled'] and b_gits[k]['disabled']:

|

||||||

|

nodepack_diff['removed'].append(k)

|

||||||

|

elif v['disabled'] and not b_gits[k]['disabled']:

|

||||||

|

nodepack_diff['added'].append(k)

|

||||||

|

elif v['hash'] != b_gits[k]['hash']:

|

||||||

|

a_date = v.get('commit_timestamp')

|

||||||

|

b_date = b_gits[k].get('commit_timestamp')

|

||||||

|

if a_date is not None and b_date is not None:

|

||||||

|

if a_date < b_date:

|

||||||

|

nodepack_diff['upgraded'].append(k)

|

||||||

|

elif a_date > b_date:

|

||||||

|

nodepack_diff['downgraded'].append(k)

|

||||||

|

else:

|

||||||

|

nodepack_diff['changed'].append(k)

|

||||||

|

|

||||||

|

# check: pip packages

|

||||||

|

a_pip = a.get('pips', {})

|

||||||

|

b_pip = b.get('pips', {})

|

||||||

|

for k, v in a_pip.items():

|

||||||

|

if '==' in k:

|

||||||

|

package_name, version = k.split('==', 1)

|

||||||

|

else:

|

||||||

|

package_name, version = k, None

|

||||||

|

|

||||||

|

for k2, v2 in b_pip.items():

|

||||||

|

if '==' in k2:

|

||||||

|

package_name2, version2 = k2.split('==', 1)

|

||||||

|

else:

|

||||||

|

package_name2, version2 = k2, None

|

||||||

|

|

||||||

|

if package_name.lower() == package_name2.lower():

|

||||||

|

if version != version2:

|

||||||

|

a_ver = manager_util.StrictVersion(version) if version else None

|

||||||

|

b_ver = manager_util.StrictVersion(version2) if version2 else None

|

||||||

|

if a_ver and b_ver:

|

||||||

|

if a_ver < b_ver:

|

||||||

|

pip_diff['upgraded'][package_name] = {'from': version, 'to': version2}

|

||||||

|

elif a_ver > b_ver:

|

||||||

|

pip_diff['downgraded'][package_name] = {'from': version, 'to': version2}

|

||||||

|

elif not a_ver and b_ver:

|

||||||

|

pip_diff['added'][package_name] = version2

|

||||||

|

|

||||||

|

a_pip_names = {k.split('==', 1)[0].lower() for k in a_pip.keys()}

|

||||||

|

|

||||||

|

for k in b_pip.keys():

|

||||||

|

if '==' in k:

|

||||||

|

package_name = k.split('==', 1)[0]

|

||||||

|

package_version = k.split('==', 1)[1]

|

||||||

|

else:

|

||||||

|

package_name = k

|

||||||

|

package_version = None

|

||||||

|

|

||||||

|

if package_name.lower() not in a_pip_names:

|

||||||

|

if package_version:

|

||||||

|

pip_diff['added'][package_name] = package_version

|

||||||

|

|

||||||

|

return {'nodepack_diff': nodepack_diff, 'pip_diff': pip_diff}

|

||||||

@@ -30,12 +30,6 @@ from .generated_models import (

|

|||||||

InstalledModelInfo,

|

InstalledModelInfo,

|

||||||

ComfyUIVersionInfo,

|

ComfyUIVersionInfo,

|

||||||

|

|

||||||

# Import Fail Info Models

|

|

||||||

ImportFailInfoBulkRequest,

|

|

||||||

ImportFailInfoBulkResponse,

|

|

||||||

ImportFailInfoItem,

|

|

||||||

ImportFailInfoItem1,

|

|

||||||

|

|

||||||

# Other models

|

# Other models

|

||||||

OperationType,

|

OperationType,

|

||||||

OperationResult,

|

OperationResult,

|

||||||

@@ -94,12 +88,6 @@ __all__ = [

|

|||||||

"InstalledModelInfo",

|

"InstalledModelInfo",

|

||||||

"ComfyUIVersionInfo",

|

"ComfyUIVersionInfo",

|

||||||

|

|

||||||

# Import Fail Info Models

|

|

||||||

"ImportFailInfoBulkRequest",

|

|

||||||

"ImportFailInfoBulkResponse",

|

|

||||||

"ImportFailInfoItem",

|

|

||||||

"ImportFailInfoItem1",

|

|

||||||

|

|

||||||

# Other models

|

# Other models

|

||||||

"OperationType",

|

"OperationType",

|

||||||

"OperationResult",

|

"OperationResult",

|

||||||

|

|||||||

@@ -1,6 +1,6 @@

|

|||||||

# generated by datamodel-codegen:

|

# generated by datamodel-codegen:

|

||||||

# filename: openapi.yaml

|

# filename: openapi.yaml

|

||||||

# timestamp: 2025-07-31T04:52:26+00:00

|

# timestamp: 2025-06-27T04:01:45+00:00

|

||||||

|

|

||||||

from __future__ import annotations

|

from __future__ import annotations

|

||||||

|

|

||||||

@@ -454,24 +454,6 @@ class BatchExecutionRecord(BaseModel):

|

|||||||

)

|

)

|

||||||

|

|

||||||

|

|

||||||

class ImportFailInfoBulkRequest(BaseModel):

|

|

||||||

cnr_ids: Optional[List[str]] = Field(

|

|

||||||

None, description="A list of CNR IDs to check."

|

|

||||||

)

|

|

||||||

urls: Optional[List[str]] = Field(

|

|

||||||

None, description="A list of repository URLs to check."

|

|

||||||

)

|

|

||||||

|

|

||||||

|

|

||||||

class ImportFailInfoItem1(BaseModel):

|

|

||||||

error: Optional[str] = None

|

|

||||||

traceback: Optional[str] = None

|

|

||||||

|

|

||||||

|

|

||||||

class ImportFailInfoItem(RootModel[Optional[ImportFailInfoItem1]]):

|

|

||||||

root: Optional[ImportFailInfoItem1]

|

|

||||||

|

|

||||||

|

|

||||||

class QueueTaskItem(BaseModel):

|

class QueueTaskItem(BaseModel):

|

||||||

ui_id: str = Field(..., description="Unique identifier for the task")

|

ui_id: str = Field(..., description="Unique identifier for the task")

|

||||||

client_id: str = Field(..., description="Client identifier that initiated the task")

|

client_id: str = Field(..., description="Client identifier that initiated the task")

|

||||||

@@ -555,7 +537,3 @@ class HistoryResponse(BaseModel):

|

|||||||

history: Optional[Dict[str, TaskHistoryItem]] = Field(

|

history: Optional[Dict[str, TaskHistoryItem]] = Field(

|

||||||

None, description="Map of task IDs to their history items"

|

None, description="Map of task IDs to their history items"

|

||||||

)

|

)

|

||||||

|

|

||||||

|

|

||||||

class ImportFailInfoBulkResponse(RootModel[Optional[Dict[str, ImportFailInfoItem]]]):

|

|

||||||

root: Optional[Dict[str, ImportFailInfoItem]] = None

|

|

||||||

|

|||||||

@@ -41,12 +41,11 @@ from ..common.enums import NetworkMode, SecurityLevel, DBMode

|

|||||||

from ..common import context

|

from ..common import context

|

||||||

|

|

||||||

|

|

||||||

version_code = [4, 0, 3]

|

version_code = [4, 0]

|

||||||

version_str = f"V{version_code[0]}.{version_code[1]}" + (f'.{version_code[2]}' if len(version_code) > 2 else '')

|

version_str = f"V{version_code[0]}.{version_code[1]}" + (f'.{version_code[2]}' if len(version_code) > 2 else '')

|

||||||

|

|

||||||

|

|

||||||

DEFAULT_CHANNEL = "https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main"

|

DEFAULT_CHANNEL = "https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main"

|

||||||

DEFAULT_CHANNEL_LEGACY = "https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main"

|

|

||||||

|

|

||||||

|

|

||||||

default_custom_nodes_path = None

|

default_custom_nodes_path = None

|

||||||

@@ -154,8 +153,14 @@ def check_invalid_nodes():

|

|||||||

cached_config = None

|

cached_config = None

|

||||||

js_path = None

|

js_path = None

|

||||||

|

|

||||||

|

comfy_ui_required_revision = 1930

|

||||||

|

comfy_ui_required_commit_datetime = datetime(2024, 1, 24, 0, 0, 0)

|

||||||

|

|

||||||

|

comfy_ui_revision = "Unknown"

|

||||||

|

comfy_ui_commit_datetime = datetime(1900, 1, 1, 0, 0, 0)

|

||||||

|

|

||||||

channel_dict = None

|

channel_dict = None

|

||||||

valid_channels = {'default', 'local', DEFAULT_CHANNEL, DEFAULT_CHANNEL_LEGACY}

|

valid_channels = {'default', 'local'}

|

||||||

channel_list = None

|

channel_list = None

|

||||||

|

|

||||||

|

|

||||||

@@ -1003,6 +1008,7 @@ class UnifiedManager:

|

|||||||

"""

|

"""

|

||||||

|

|

||||||

result = ManagedResult('enable')

|

result = ManagedResult('enable')

|

||||||

|

|

||||||

if 'comfyui-manager' in node_id.lower():

|

if 'comfyui-manager' in node_id.lower():

|

||||||

return result.fail(f"ignored: enabling '{node_id}'")

|

return result.fail(f"ignored: enabling '{node_id}'")

|

||||||

|

|

||||||

@@ -1473,7 +1479,7 @@ def identify_node_pack_from_path(fullpath):

|

|||||||

# cnr

|

# cnr

|

||||||

cnr = cnr_utils.read_cnr_info(fullpath)

|

cnr = cnr_utils.read_cnr_info(fullpath)

|

||||||

if cnr is not None:

|

if cnr is not None:

|

||||||

return module_name, cnr['version'], cnr['original_name'], None

|

return module_name, cnr['version'], cnr['id'], None

|

||||||

|

|

||||||

return None

|

return None

|

||||||

else:

|

else:

|

||||||

@@ -1523,10 +1529,7 @@ def get_installed_node_packs():

|

|||||||

if info is None:

|

if info is None:

|

||||||

continue

|

continue

|

||||||

|

|

||||||

# NOTE: don't add disabled nodepack if there is enabled nodepack

|

res[info[0]] = { 'ver': info[1], 'cnr_id': info[2], 'aux_id': info[3], 'enabled': False }

|

||||||

original_name = info[0].split('@')[0]

|

|

||||||

if original_name not in res:

|

|

||||||

res[info[0]] = { 'ver': info[1], 'cnr_id': info[2], 'aux_id': info[3], 'enabled': False }

|

|

||||||

|

|

||||||

return res

|

return res

|

||||||

|

|

||||||

@@ -1783,6 +1786,16 @@ def try_install_script(url, repo_path, install_cmd, instant_execution=False):

|

|||||||

print(f"\n## ComfyUI-Manager: EXECUTE => {install_cmd}")

|

print(f"\n## ComfyUI-Manager: EXECUTE => {install_cmd}")

|

||||||

code = manager_funcs.run_script(install_cmd, cwd=repo_path)

|

code = manager_funcs.run_script(install_cmd, cwd=repo_path)

|

||||||

|

|

||||||

|

if platform.system() != "Windows":

|

||||||

|

try:

|

||||||

|

if not os.environ.get('__COMFYUI_DESKTOP_VERSION__') and comfy_ui_commit_datetime.date() < comfy_ui_required_commit_datetime.date():

|

||||||

|

print("\n\n###################################################################")

|

||||||

|

print(f"[WARN] ComfyUI-Manager: Your ComfyUI version ({comfy_ui_revision})[{comfy_ui_commit_datetime.date()}] is too old. Please update to the latest version.")

|

||||||

|

print("[WARN] The extension installation feature may not work properly in the current installed ComfyUI version on Windows environment.")

|

||||||

|

print("###################################################################\n\n")

|

||||||

|

except Exception:

|

||||||

|

pass

|

||||||

|

|

||||||

if code != 0:

|

if code != 0:

|

||||||

if url is None:

|

if url is None:

|

||||||

url = os.path.dirname(repo_path)

|

url = os.path.dirname(repo_path)

|

||||||

@@ -1901,27 +1914,6 @@ def execute_install_script(url, repo_path, lazy_mode=False, instant_execution=Fa

|

|||||||

return True

|

return True

|

||||||

|

|

||||||

|

|

||||||

def install_manager_requirements(repo_path):

|

|

||||||

"""

|

|

||||||

Install packages from manager_requirements.txt if it exists.

|

|

||||||

This is specifically for ComfyUI's manager_requirements.txt.

|

|

||||||

"""

|

|

||||||

manager_requirements_path = os.path.join(repo_path, "manager_requirements.txt")

|

|

||||||

if not os.path.exists(manager_requirements_path):

|

|

||||||

return

|

|

||||||

|

|

||||||

logging.info("[ComfyUI-Manager] Installing manager_requirements.txt")

|

|

||||||

with open(manager_requirements_path, "r") as f:

|

|

||||||

for line in f:

|

|

||||||

line = line.strip()

|

|

||||||

if line and not line.startswith('#'):

|

|

||||||

if '#' in line:

|

|

||||||

line = line.split('#')[0].strip()

|

|

||||||

if line:

|

|

||||||

install_cmd = manager_util.make_pip_cmd(["install", line])

|

|

||||||

subprocess.run(install_cmd)

|

|

||||||

|

|

||||||

|

|

||||||

def git_repo_update_check_with(path, do_fetch=False, do_update=False, no_deps=False):

|

def git_repo_update_check_with(path, do_fetch=False, do_update=False, no_deps=False):

|

||||||

"""

|

"""

|

||||||

|

|

||||||

@@ -2455,7 +2447,6 @@ def update_to_stable_comfyui(repo_path):

|

|||||||

else:

|

else:

|

||||||

logging.info(f"[ComfyUI-Manager] Updating ComfyUI: {current_tag} -> {latest_tag}")

|

logging.info(f"[ComfyUI-Manager] Updating ComfyUI: {current_tag} -> {latest_tag}")

|

||||||

repo.git.checkout(latest_tag)

|

repo.git.checkout(latest_tag)

|

||||||

execute_install_script("ComfyUI", repo_path, instant_execution=False, no_deps=False)

|

|

||||||

return 'updated', latest_tag

|

return 'updated', latest_tag

|

||||||

except Exception:

|

except Exception:

|

||||||

traceback.print_exc()

|

traceback.print_exc()

|

||||||

@@ -2655,8 +2646,8 @@ async def get_current_snapshot(custom_nodes_only = False):

|

|||||||

commit_hash = git_utils.get_commit_hash(fullpath)

|

commit_hash = git_utils.get_commit_hash(fullpath)

|

||||||

url = git_utils.git_url(fullpath)

|

url = git_utils.git_url(fullpath)

|

||||||

git_custom_nodes[url] = dict(hash=commit_hash, disabled=is_disabled)

|

git_custom_nodes[url] = dict(hash=commit_hash, disabled=is_disabled)

|

||||||

except Exception:

|

except Exception as e:

|

||||||

print(f"Failed to extract snapshots for the custom node '{path}'.")

|

print(f"Failed to extract snapshots for the custom node '{path}'. / {e}")

|

||||||

|

|

||||||

elif path.endswith('.py'):

|

elif path.endswith('.py'):

|

||||||

is_disabled = path.endswith(".py.disabled")

|

is_disabled = path.endswith(".py.disabled")

|

||||||

|

|||||||

@@ -47,7 +47,7 @@ from ..common import manager_util

|

|||||||

from ..common import cm_global

|

from ..common import cm_global

|

||||||

from ..common import manager_downloader

|

from ..common import manager_downloader

|

||||||

from ..common import context

|

from ..common import context

|

||||||

|

from ..common import snapshot_util

|

||||||

|

|

||||||

|

|

||||||

from ..data_models import (

|

from ..data_models import (

|

||||||

@@ -61,7 +61,6 @@ from ..data_models import (

|

|||||||

ManagerMessageName,

|

ManagerMessageName,

|

||||||

BatchExecutionRecord,

|

BatchExecutionRecord,

|

||||||

ComfyUISystemState,

|

ComfyUISystemState,

|

||||||

ImportFailInfoBulkRequest,

|

|

||||||

BatchOperation,

|

BatchOperation,

|

||||||

InstalledNodeInfo,

|

InstalledNodeInfo,

|

||||||

ComfyUIVersionInfo,

|

ComfyUIVersionInfo,

|

||||||

@@ -968,8 +967,6 @@ async def task_worker():

|

|||||||

logging.error("ComfyUI update failed")

|

logging.error("ComfyUI update failed")

|

||||||

return "fail"

|

return "fail"

|

||||||

elif res == "updated":

|

elif res == "updated":

|

||||||

core.install_manager_requirements(repo_path)

|

|

||||||

|

|

||||||

if is_stable:

|

if is_stable:

|

||||||

logging.info("ComfyUI is updated to latest stable version.")

|

logging.info("ComfyUI is updated to latest stable version.")

|

||||||

return "success-stable-" + latest_tag

|

return "success-stable-" + latest_tag

|

||||||

@@ -1596,6 +1593,46 @@ async def save_snapshot(request):

|

|||||||

return web.Response(status=400)

|

return web.Response(status=400)

|

||||||

|

|

||||||

|

|

||||||

|

@routes.get("/v2/snapshot/diff")

|

||||||

|

async def get_snapshot_diff(request):

|

||||||

|

try:

|

||||||

|

from_id = request.rel_url.query.get("from")

|

||||||

|

to_id = request.rel_url.query.get("to")

|

||||||

|

|

||||||

|

if (from_id is not None and '..' in from_id) or (to_id is not None and '..' in to_id):

|

||||||

|

logging.error("/v2/snapshot/diff: invalid 'from' or 'to' parameter.")

|

||||||

|

return web.Response(status=400)

|

||||||

|

|

||||||

|

if from_id is None:

|

||||||

|

from_json = await core.get_current_snapshot()

|

||||||

|

else:

|

||||||

|

from_path = os.path.join(context.manager_snapshot_path, f"{from_id}.json")

|

||||||

|

if not os.path.exists(from_path):

|

||||||

|

logging.error(f"/v2/snapshot/diff: 'from' parameter file not found: {from_path}")

|

||||||

|

return web.Response(status=400)

|

||||||

|

|

||||||

|

from_json = snapshot_util.read_snapshot(from_path)

|

||||||

|

|

||||||

|

if to_id is None:

|

||||||

|

logging.error("/v2/snapshot/diff: 'to' parameter is required.")

|

||||||

|

return web.Response(status=401)

|

||||||

|

else:

|

||||||

|

to_path = os.path.join(context.manager_snapshot_path, f"{to_id}.json")

|

||||||

|

if not os.path.exists(to_path):

|

||||||

|

logging.error(f"/v2/snapshot/diff: 'to' parameter file not found: {to_path}")

|

||||||

|

return web.Response(status=400)

|

||||||

|

|

||||||

|

to_json = snapshot_util.read_snapshot(to_path)

|

||||||

|

|

||||||

|

return web.json_response(snapshot_util.diff_snapshot(from_json, to_json), content_type='application/json')

|

||||||

|

|

||||||

|

except Exception as e:

|

||||||

|

logging.error(f"[ComfyUI-Manager] Error in /v2/snapshot/diff: {e}")

|

||||||

|

traceback.print_exc()

|

||||||

|

# Return a generic error response

|

||||||

|

return web.Response(status=400)

|

||||||

|

|

||||||

|

|

||||||

def unzip_install(files):

|

def unzip_install(files):

|

||||||

temp_filename = "manager-temp.zip"

|

temp_filename = "manager-temp.zip"

|

||||||

for url in files:

|

for url in files:

|

||||||

@@ -1659,67 +1696,6 @@ async def import_fail_info(request):

|

|||||||

return web.Response(status=500, text="Internal server error")

|

return web.Response(status=500, text="Internal server error")

|

||||||

|

|

||||||

|

|

||||||

@routes.post("/v2/customnode/import_fail_info_bulk")

|

|

||||||

async def import_fail_info_bulk(request):

|

|

||||||

try:

|

|

||||||

json_data = await request.json()

|

|

||||||

|

|

||||||

# Validate input using Pydantic model

|

|

||||||

request_data = ImportFailInfoBulkRequest.model_validate(json_data)

|

|

||||||

|

|

||||||

# Ensure we have either cnr_ids or urls

|

|

||||||

if not request_data.cnr_ids and not request_data.urls:

|

|

||||||

return web.Response(

|

|

||||||

status=400, text="Either 'cnr_ids' or 'urls' field is required"

|

|

||||||

)

|

|

||||||

|

|

||||||

await core.unified_manager.reload('cache')

|

|

||||||

await core.unified_manager.get_custom_nodes('default', 'cache')

|

|

||||||

|

|

||||||

results = {}

|

|

||||||

|

|

||||||

if request_data.cnr_ids:

|

|

||||||

for cnr_id in request_data.cnr_ids:

|

|

||||||

module_name = core.unified_manager.get_module_name(cnr_id)

|

|

||||||

if module_name is not None:

|

|

||||||

info = cm_global.error_dict.get(module_name)

|

|

||||||

if info is not None:

|

|

||||||

# Convert error_dict format to API spec format

|

|

||||||

results[cnr_id] = {

|

|

||||||

'error': info.get('msg', ''),

|

|

||||||

'traceback': info.get('traceback', '')

|

|

||||||

}

|

|

||||||

else:

|

|

||||||

results[cnr_id] = None

|

|

||||||

else:

|

|

||||||

results[cnr_id] = None

|

|

||||||

|

|

||||||

if request_data.urls:

|

|

||||||

for url in request_data.urls:

|

|

||||||

module_name = core.unified_manager.get_module_name(url)

|

|

||||||

if module_name is not None:

|

|

||||||

info = cm_global.error_dict.get(module_name)

|

|

||||||

if info is not None:

|

|

||||||

# Convert error_dict format to API spec format

|

|

||||||

results[url] = {

|

|

||||||

'error': info.get('msg', ''),

|

|

||||||

'traceback': info.get('traceback', '')

|

|

||||||

}

|

|

||||||

else:

|

|

||||||

results[url] = None

|

|

||||||

else:

|

|

||||||

results[url] = None

|

|

||||||

|

|

||||||

# Return results directly as JSON

|

|

||||||

return web.json_response(results, content_type="application/json")

|

|

||||||

except ValidationError as e:

|

|

||||||

logging.error(f"[ComfyUI-Manager] Invalid request data: {e}")

|

|

||||||

return web.Response(status=400, text=f"Invalid request data: {e}")

|

|

||||||

except Exception as e:

|

|

||||||

logging.error(f"[ComfyUI-Manager] Error processing bulk import fail info: {e}")

|

|

||||||

return web.Response(status=500, text="Internal server error")

|

|

||||||

|

|

||||||

|

|

||||||

@routes.get("/v2/manager/queue/reset")

|

@routes.get("/v2/manager/queue/reset")

|

||||||

async def reset_queue(request):

|

async def reset_queue(request):

|

||||||

logging.debug("[ComfyUI-Manager] Queue reset requested")

|

logging.debug("[ComfyUI-Manager] Queue reset requested")

|

||||||

@@ -2047,7 +2023,10 @@ async def default_cache_update():

|

|||||||

)

|

)

|

||||||

traceback.print_exc()

|

traceback.print_exc()

|

||||||

|

|

||||||

if core.get_config()["network_mode"] != "offline":

|

if (

|

||||||

|

core.get_config()["network_mode"] != "offline"

|

||||||

|

and not manager_util.is_manager_pip_package()

|

||||||

|

):

|

||||||

a = get_cache("custom-node-list.json")

|

a = get_cache("custom-node-list.json")

|

||||||

b = get_cache("extension-node-map.json")

|

b = get_cache("extension-node-map.json")

|

||||||

c = get_cache("model-list.json")

|

c = get_cache("model-list.json")

|

||||||

|

|||||||

@@ -11,15 +11,6 @@ import hashlib

|

|||||||

import folder_paths

|

import folder_paths

|

||||||

from server import PromptServer

|

from server import PromptServer

|

||||||

import logging

|

import logging

|

||||||

import sys

|

|

||||||

|

|

||||||

|

|

||||||

try:

|

|

||||||

from nio import AsyncClient, LoginResponse, UploadResponse

|

|

||||||

matrix_nio_is_available = True

|

|

||||||

except Exception:

|

|

||||||

logging.warning(f"[ComfyUI-Manager] The matrix sharing feature has been disabled because the `matrix-nio` dependency is not installed.\n\tTo use this feature, please run the following command:\n\t{sys.executable} -m pip install matrix-nio\n")

|

|

||||||

matrix_nio_is_available = False

|

|

||||||

|

|

||||||

|

|

||||||

def extract_model_file_names(json_data):

|

def extract_model_file_names(json_data):

|

||||||

@@ -202,14 +193,6 @@ async def get_esheep_workflow_and_images(request):

|

|||||||

return web.Response(status=200, text=json.dumps(data))

|

return web.Response(status=200, text=json.dumps(data))

|

||||||

|

|

||||||

|

|

||||||

@PromptServer.instance.routes.get("/v2/manager/get_matrix_dep_status")

|

|

||||||

async def get_matrix_dep_status(request):

|

|

||||||

if matrix_nio_is_available:

|

|

||||||

return web.Response(status=200, text='available')

|

|

||||||

else:

|

|

||||||

return web.Response(status=200, text='unavailable')

|

|

||||||

|

|

||||||

|

|

||||||

def set_matrix_auth(json_data):

|

def set_matrix_auth(json_data):

|

||||||

homeserver = json_data['homeserver']

|

homeserver = json_data['homeserver']

|

||||||

username = json_data['username']

|

username = json_data['username']

|

||||||

@@ -349,12 +332,15 @@ async def share_art(request):

|

|||||||

workflowId = upload_workflow_json["workflowId"]

|

workflowId = upload_workflow_json["workflowId"]

|

||||||

|

|

||||||

# check if the user has provided Matrix credentials

|

# check if the user has provided Matrix credentials

|

||||||

if matrix_nio_is_available and "matrix" in share_destinations:

|

if "matrix" in share_destinations:

|

||||||

comfyui_share_room_id = '!LGYSoacpJPhIfBqVfb:matrix.org'

|

comfyui_share_room_id = '!LGYSoacpJPhIfBqVfb:matrix.org'

|

||||||

filename = os.path.basename(asset_filepath)

|

filename = os.path.basename(asset_filepath)

|

||||||