Compare commits

32 Commits

manager-v4

...

feat/compl

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

49549ddcb8 | ||

|

|

35eddc2965 | ||

|

|

798b203274 | ||

|

|

9d2034bd4f | ||

|

|

6233fabe02 | ||

|

|

48ba2f4b4c | ||

|

|

3799af0017 | ||

|

|

403947a5d1 | ||

|

|

276ccca4f6 | ||

|

|

31de92a7ef | ||

|

|

3ae4aecd84 | ||

|

|

7896949719 | ||

|

|

86c7482048 | ||

|

|

0146655f0f | ||

|

|

89bb61fb05 | ||

|

|

dfd9a3ec7b | ||

|

|

985c987603 | ||

|

|

1ce35679b1 | ||

|

|

6e1c906aff | ||

|

|

cd8e87a3fb | ||

|

|

8b9420731a | ||

|

|

d9918cf773 | ||

|

|

163782e445 | ||

|

|

ad14e1ed13 | ||

|

|

b4392293fa | ||

|

|

e8ff505ebf | ||

|

|

8a5226b1d4 | ||

|

|

422af67217 | ||

|

|

5c300f75e7 | ||

|

|

5ea7bf3683 | ||

|

|

34efbe9262 | ||

|

|

9d24038a7d |

70

.github/workflows/ci.yml

vendored

70

.github/workflows/ci.yml

vendored

@@ -1,70 +0,0 @@

|

|||||||

name: CI

|

|

||||||

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches: [ main, feat/*, fix/* ]

|

|

||||||

pull_request:

|

|

||||||

branches: [ main ]

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

validate-openapi:

|

|

||||||

name: Validate OpenAPI Specification

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v4

|

|

||||||

|

|

||||||

- name: Check if OpenAPI changed

|

|

||||||

id: openapi-changed

|

|

||||||

uses: tj-actions/changed-files@v44

|

|

||||||

with:

|

|

||||||

files: openapi.yaml

|

|

||||||

|

|

||||||

- name: Setup Node.js

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

uses: actions/setup-node@v4

|

|

||||||

with:

|

|

||||||

node-version: '18'

|

|

||||||

|

|

||||||

- name: Install Redoc CLI

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

npm install -g @redocly/cli

|

|

||||||

|

|

||||||

- name: Validate OpenAPI specification

|

|

||||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

redocly lint openapi.yaml

|

|

||||||

|

|

||||||

code-quality:

|

|

||||||

name: Code Quality Checks

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v4

|

|

||||||

with:

|

|

||||||

fetch-depth: 0 # Fetch all history for proper diff

|

|

||||||

|

|

||||||

- name: Get changed Python files

|

|

||||||

id: changed-py-files

|

|

||||||

uses: tj-actions/changed-files@v44

|

|

||||||

with:

|

|

||||||

files: |

|

|

||||||

**/*.py

|

|

||||||

files_ignore: |

|

|

||||||

comfyui_manager/legacy/**

|

|

||||||

|

|

||||||

- name: Setup Python

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

uses: actions/setup-python@v5

|

|

||||||

with:

|

|

||||||

python-version: '3.9'

|

|

||||||

|

|

||||||

- name: Install dependencies

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

pip install ruff

|

|

||||||

|

|

||||||

- name: Run ruff linting on changed files

|

|

||||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

|

||||||

run: |

|

|

||||||

echo "Changed files: ${{ steps.changed-py-files.outputs.all_changed_files }}"

|

|

||||||

echo "${{ steps.changed-py-files.outputs.all_changed_files }}" | xargs -r ruff check

|

|

||||||

32

.github/workflows/publish-to-pypi.yml

vendored

32

.github/workflows/publish-to-pypi.yml

vendored

@@ -4,7 +4,7 @@ on:

|

|||||||

workflow_dispatch:

|

workflow_dispatch:

|

||||||

push:

|

push:

|

||||||

branches:

|

branches:

|

||||||

- manager-v4

|

- main

|

||||||

paths:

|

paths:

|

||||||

- "pyproject.toml"

|

- "pyproject.toml"

|

||||||

|

|

||||||

@@ -21,7 +21,7 @@ jobs:

|

|||||||

- name: Set up Python

|

- name: Set up Python

|

||||||

uses: actions/setup-python@v4

|

uses: actions/setup-python@v4

|

||||||

with:

|

with:

|

||||||

python-version: '3.x'

|

python-version: '3.9'

|

||||||

|

|

||||||

- name: Install build dependencies

|

- name: Install build dependencies

|

||||||

run: |

|

run: |

|

||||||

@@ -31,28 +31,28 @@ jobs:

|

|||||||

- name: Get current version

|

- name: Get current version

|

||||||

id: current_version

|

id: current_version

|

||||||

run: |

|

run: |

|

||||||

CURRENT_VERSION=$(grep -oP '^version = "\K[^"]+' pyproject.toml)

|

CURRENT_VERSION=$(grep -oP 'version = "\K[^"]+' pyproject.toml)

|

||||||

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

||||||

echo "Current version: $CURRENT_VERSION"

|

echo "Current version: $CURRENT_VERSION"

|

||||||

|

|

||||||

- name: Build package

|

- name: Build package

|

||||||

run: python -m build

|

run: python -m build

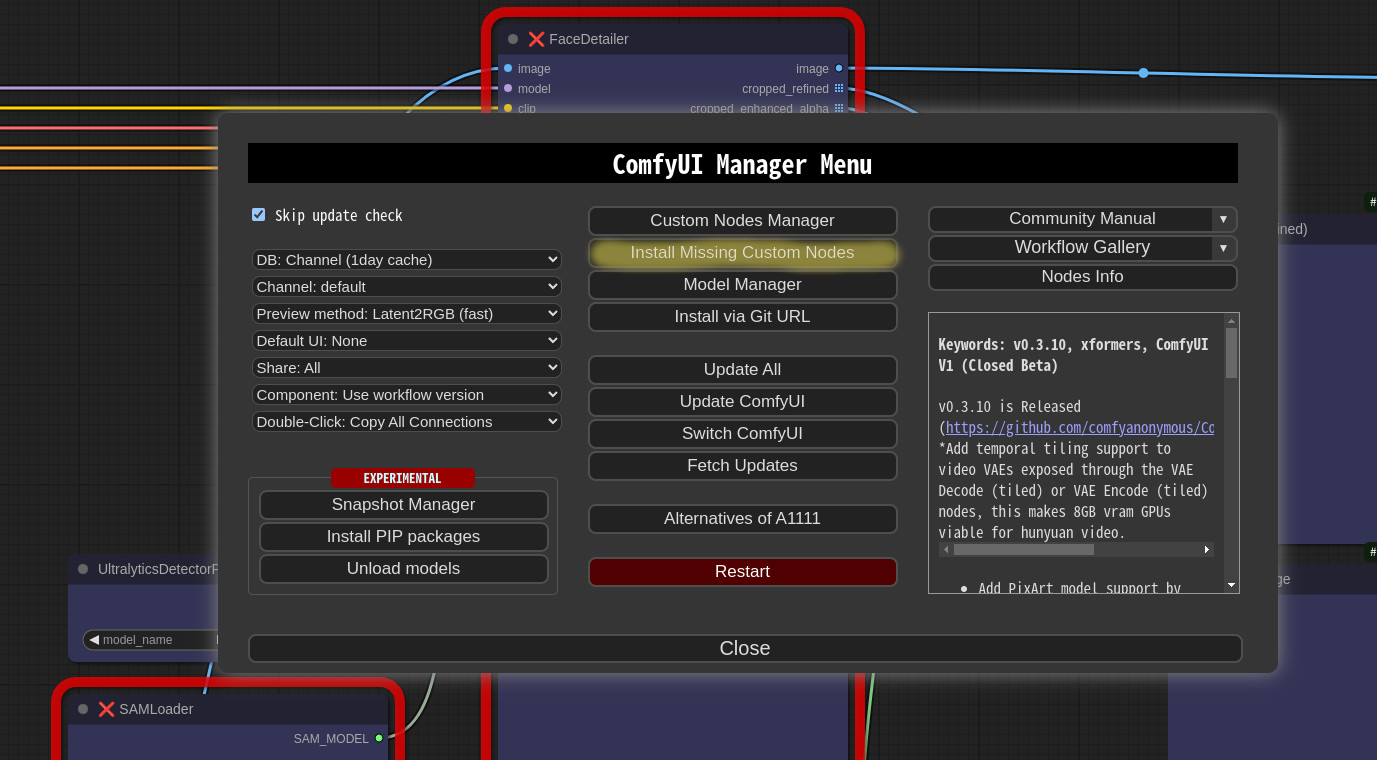

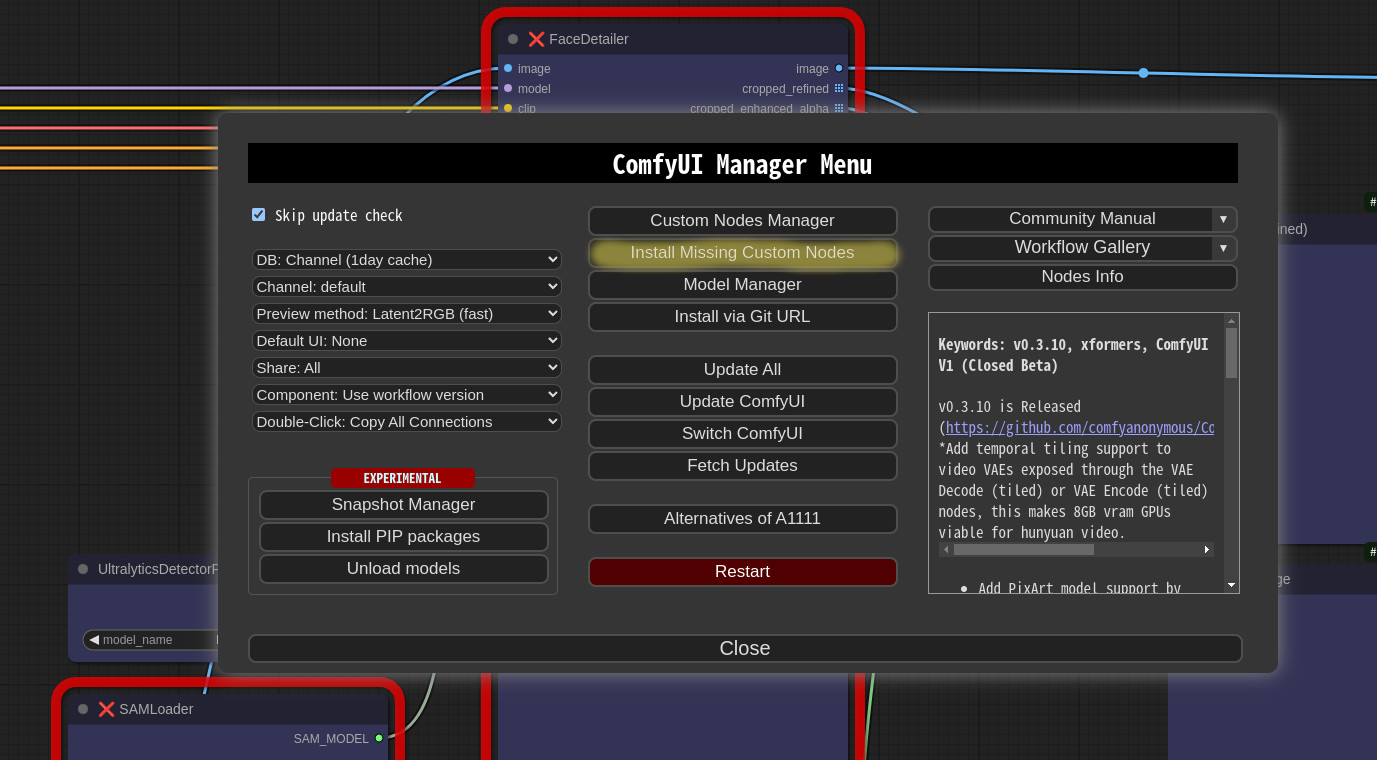

|

||||||

|

|

||||||

# - name: Create GitHub Release

|

- name: Create GitHub Release

|

||||||

# id: create_release

|

id: create_release

|

||||||

# uses: softprops/action-gh-release@v2

|

uses: softprops/action-gh-release@v2

|

||||||

# env:

|

env:

|

||||||

# GITHUB_TOKEN: ${{ github.token }}

|

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||||

# with:

|

with:

|

||||||

# files: dist/*

|

files: dist/*

|

||||||

# tag_name: v${{ steps.current_version.outputs.version }}

|

tag_name: v${{ steps.current_version.outputs.version }}

|

||||||

# draft: false

|

draft: false

|

||||||

# prerelease: false

|

prerelease: false

|

||||||

# generate_release_notes: true

|

generate_release_notes: true

|

||||||

|

|

||||||

- name: Publish to PyPI

|

- name: Publish to PyPI

|

||||||

uses: pypa/gh-action-pypi-publish@76f52bc884231f62b9a034ebfe128415bbaabdfc

|

uses: pypa/gh-action-pypi-publish@release/v1

|

||||||

with:

|

with:

|

||||||

password: ${{ secrets.PYPI_TOKEN }}

|

password: ${{ secrets.PYPI_TOKEN }}

|

||||||

skip-existing: true

|

skip-existing: true

|

||||||

verbose: true

|

verbose: true

|

||||||

25

.github/workflows/publish.yml

vendored

Normal file

25

.github/workflows/publish.yml

vendored

Normal file

@@ -0,0 +1,25 @@

|

|||||||

|

name: Publish to Comfy registry

|

||||||

|

on:

|

||||||

|

workflow_dispatch:

|

||||||

|

push:

|

||||||

|

branches:

|

||||||

|

- main-blocked

|

||||||

|

paths:

|

||||||

|

- "pyproject.toml"

|

||||||

|

|

||||||

|

permissions:

|

||||||

|

issues: write

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

publish-node:

|

||||||

|

name: Publish Custom Node to registry

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

if: ${{ github.repository_owner == 'ltdrdata' || github.repository_owner == 'Comfy-Org' }}

|

||||||

|

steps:

|

||||||

|

- name: Check out code

|

||||||

|

uses: actions/checkout@v4

|

||||||

|

- name: Publish Custom Node

|

||||||

|

uses: Comfy-Org/publish-node-action@v1

|

||||||

|

with:

|

||||||

|

## Add your own personal access token to your Github Repository secrets and reference it here.

|

||||||

|

personal_access_token: ${{ secrets.REGISTRY_ACCESS_TOKEN }}

|

||||||

1

.gitignore

vendored

1

.gitignore

vendored

@@ -19,6 +19,5 @@ pip_overrides.json

|

|||||||

check2.sh

|

check2.sh

|

||||||

/venv/

|

/venv/

|

||||||

build

|

build

|

||||||

dist

|

|

||||||

*.egg-info

|

*.egg-info

|

||||||

.env

|

.env

|

||||||

105

README.md

105

README.md

@@ -89,20 +89,20 @@

|

|||||||

|

|

||||||

|

|

||||||

## Paths

|

## Paths

|

||||||

In `ComfyUI-Manager` V4.0.3b4 and later, configuration files and dynamically generated files are located under `<USER_DIRECTORY>/__manager/`.

|

In `ComfyUI-Manager` V3.0 and later, configuration files and dynamically generated files are located under `<USER_DIRECTORY>/default/ComfyUI-Manager/`.

|

||||||

|

|

||||||

* <USER_DIRECTORY>

|

* <USER_DIRECTORY>

|

||||||

* If executed without any options, the path defaults to ComfyUI/user.

|

* If executed without any options, the path defaults to ComfyUI/user.

|

||||||

* It can be set using --user-directory <USER_DIRECTORY>.

|

* It can be set using --user-directory <USER_DIRECTORY>.

|

||||||

|

|

||||||

* Basic config files: `<USER_DIRECTORY>/__manager/config.ini`

|

* Basic config files: `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini`

|

||||||

* Configurable channel lists: `<USER_DIRECTORY>/__manager/channels.ini`

|

* Configurable channel lists: `<USER_DIRECTORY>/default/ComfyUI-Manager/channels.ini`

|

||||||

* Configurable pip overrides: `<USER_DIRECTORY>/__manager/pip_overrides.json`

|

* Configurable pip overrides: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_overrides.json`

|

||||||

* Configurable pip blacklist: `<USER_DIRECTORY>/__manager/pip_blacklist.list`

|

* Configurable pip blacklist: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_blacklist.list`

|

||||||

* Configurable pip auto fix: `<USER_DIRECTORY>/__manager/pip_auto_fix.list`

|

* Configurable pip auto fix: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_auto_fix.list`

|

||||||

* Saved snapshot files: `<USER_DIRECTORY>/__manager/snapshots`

|

* Saved snapshot files: `<USER_DIRECTORY>/default/ComfyUI-Manager/snapshots`

|

||||||

* Startup script files: `<USER_DIRECTORY>/__manager/startup-scripts`

|

* Startup script files: `<USER_DIRECTORY>/default/ComfyUI-Manager/startup-scripts`

|

||||||

* Component files: `<USER_DIRECTORY>/__manager/components`

|

* Component files: `<USER_DIRECTORY>/default/ComfyUI-Manager/components`

|

||||||

|

|

||||||

|

|

||||||

## `extra_model_paths.yaml` Configuration

|

## `extra_model_paths.yaml` Configuration

|

||||||

@@ -115,17 +115,17 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

|

|

||||||

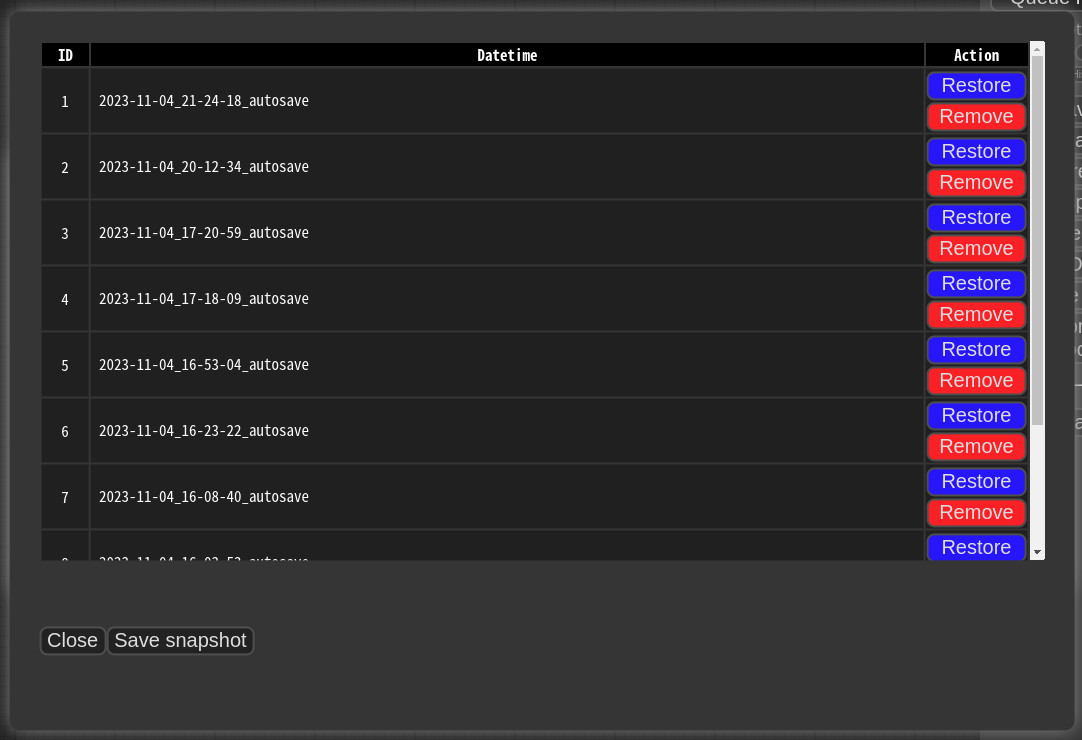

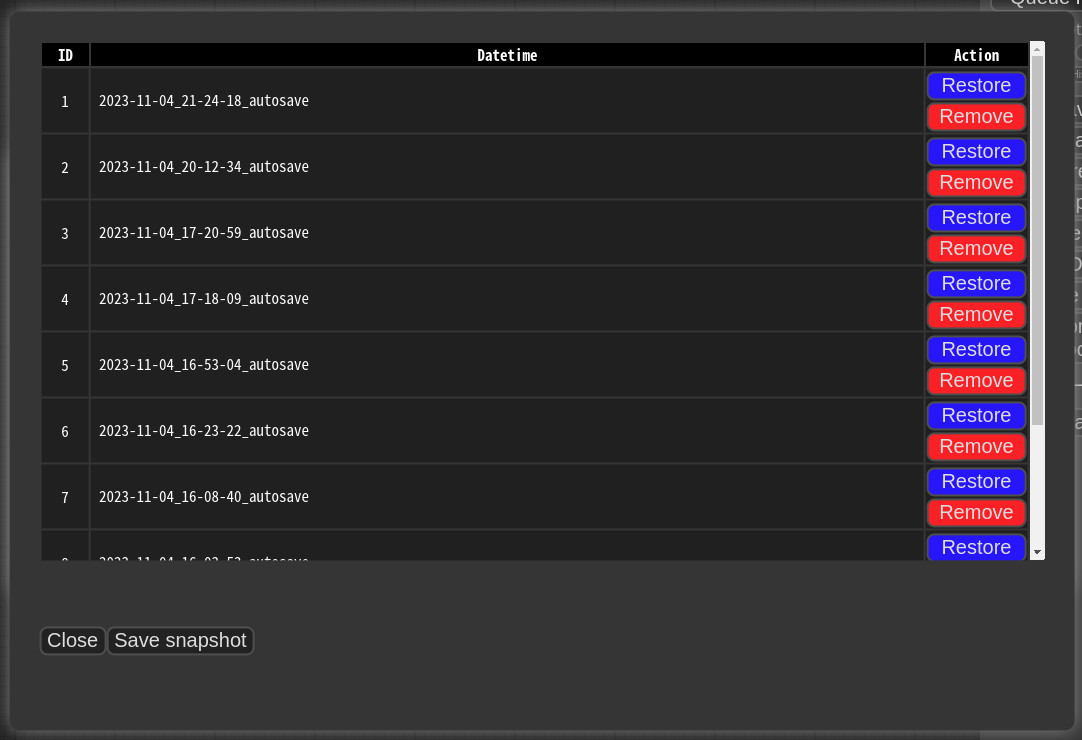

## Snapshot-Manager

|

## Snapshot-Manager

|

||||||

* When you press `Save snapshot` or use `Update All` on `Manager Menu`, the current installation status snapshot is saved.

|

* When you press `Save snapshot` or use `Update All` on `Manager Menu`, the current installation status snapshot is saved.

|

||||||

* Snapshot file dir: `<USER_DIRECTORY>/__manager/snapshots`

|

* Snapshot file dir: `<USER_DIRECTORY>/default/ComfyUI-Manager/snapshots`

|

||||||

* You can rename snapshot file.

|

* You can rename snapshot file.

|

||||||

* Press the "Restore" button to revert to the installation status of the respective snapshot.

|

* Press the "Restore" button to revert to the installation status of the respective snapshot.

|

||||||

* However, for custom nodes not managed by Git, snapshot support is incomplete.

|

* However, for custom nodes not managed by Git, snapshot support is incomplete.

|

||||||

* When you press `Restore`, it will take effect on the next ComfyUI startup.

|

* When you press `Restore`, it will take effect on the next ComfyUI startup.

|

||||||

* The selected snapshot file is saved in `<USER_DIRECTORY>/__manager/startup-scripts/restore-snapshot.json`, and upon restarting ComfyUI, the snapshot is applied and then deleted.

|

* The selected snapshot file is saved in `<USER_DIRECTORY>/default/ComfyUI-Manager/startup-scripts/restore-snapshot.json`, and upon restarting ComfyUI, the snapshot is applied and then deleted.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## cm-cli: command line tools for power users

|

## cm-cli: command line tools for power user

|

||||||

* A tool is provided that allows you to use the features of ComfyUI-Manager without running ComfyUI.

|

* A tool is provided that allows you to use the features of ComfyUI-Manager without running ComfyUI.

|

||||||

* For more details, please refer to the [cm-cli documentation](docs/en/cm-cli.md).

|

* For more details, please refer to the [cm-cli documentation](docs/en/cm-cli.md).

|

||||||

|

|

||||||

@@ -169,12 +169,12 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

* `<current timestamp>` Ensure that the timestamp is always unique.

|

* `<current timestamp>` Ensure that the timestamp is always unique.

|

||||||

* "components" should have the same structure as the content of the file stored in `<USER_DIRECTORY>/__manager/components`.

|

* "components" should have the same structure as the content of the file stored in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||||

* `<component name>`: The name should be in the format `<prefix>::<node name>`.

|

* `<component name>`: The name should be in the format `<prefix>::<node name>`.

|

||||||

* `<component node data>`: In the node data of the group node.

|

* `<compnent nodeata>`: In the nodedata of the group node.

|

||||||

* `<version>`: Only two formats are allowed: `major.minor.patch` or `major.minor`. (e.g. `1.0`, `2.2.1`)

|

* `<version>`: Only two formats are allowed: `major.minor.patch` or `major.minor`. (e.g. `1.0`, `2.2.1`)

|

||||||

* `<datetime>`: Saved time

|

* `<datetime>`: Saved time

|

||||||

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in `<USER_DIRECTORY>/__manager/components`.

|

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||||

* `<category>`: If there is neither a category nor a packname, it is saved in the components category.

|

* `<category>`: If there is neither a category nor a packname, it is saved in the components category.

|

||||||

```

|

```

|

||||||

"version":"1.0",

|

"version":"1.0",

|

||||||

@@ -189,7 +189,7 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

* Dragging and dropping or pasting a single component will add a node. However, when adding multiple components, nodes will not be added.

|

* Dragging and dropping or pasting a single component will add a node. However, when adding multiple components, nodes will not be added.

|

||||||

|

|

||||||

|

|

||||||

## Support for installing missing nodes

|

## Support of missing nodes installation

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -215,24 +215,23 @@ The following settings are applied based on the section marked as `is_default`.

|

|||||||

downgrade_blacklist = <Set a list of packages to prevent downgrades. List them separated by commas.>

|

downgrade_blacklist = <Set a list of packages to prevent downgrades. List them separated by commas.>

|

||||||

security_level = <Set the security level => strong|normal|normal-|weak>

|

security_level = <Set the security level => strong|normal|normal-|weak>

|

||||||

always_lazy_install = <Whether to perform dependency installation on restart even in environments other than Windows.>

|

always_lazy_install = <Whether to perform dependency installation on restart even in environments other than Windows.>

|

||||||

network_mode = <Set the network mode => public|private|offline|personal_cloud>

|

network_mode = <Set the network mode => public|private|offline>

|

||||||

```

|

```

|

||||||

|

|

||||||

* network_mode:

|

* network_mode:

|

||||||

- public: An environment that uses a typical public network.

|

- public: An environment that uses a typical public network.

|

||||||

- private: An environment that uses a closed network, where a private node DB is configured via `channel_url`. (Uses cache if available)

|

- private: An environment that uses a closed network, where a private node DB is configured via `channel_url`. (Uses cache if available)

|

||||||

- offline: An environment that does not use any external connections when using an offline network. (Uses cache if available)

|

- offline: An environment that does not use any external connections when using an offline network. (Uses cache if available)

|

||||||

- personal_cloud: Applies relaxed security features in cloud environments such as Google Colab or Runpod, where strong security is not required.

|

|

||||||

|

|

||||||

|

|

||||||

## Additional Feature

|

## Additional Feature

|

||||||

* Logging to file feature

|

* Logging to file feature

|

||||||

* This feature is enabled by default and can be disabled by setting `file_logging = False` in the `config.ini`.

|

* This feature is enabled by default and can be disabled by setting `file_logging = False` in the `config.ini`.

|

||||||

|

|

||||||

* Fix node (recreate): When right-clicking on a node and selecting `Fix node (recreate)`, you can recreate the node. The widget's values are reset, while the connections maintain those with the same names.

|

* Fix node(recreate): When right-clicking on a node and selecting `Fix node (recreate)`, you can recreate the node. The widget's values are reset, while the connections maintain those with the same names.

|

||||||

* It is used to correct errors in nodes of old workflows created before, which are incompatible with the version changes of custom nodes.

|

* It is used to correct errors in nodes of old workflows created before, which are incompatible with the version changes of custom nodes.

|

||||||

|

|

||||||

* Double-Click Node Title: You can set the double-click behavior of nodes in the ComfyUI-Manager menu.

|

* Double-Click Node Title: You can set the double click behavior of nodes in the ComfyUI-Manager menu.

|

||||||

* `Copy All Connections`, `Copy Input Connections`: Double-clicking a node copies the connections of the nearest node.

|

* `Copy All Connections`, `Copy Input Connections`: Double-clicking a node copies the connections of the nearest node.

|

||||||

* This action targets the nearest node within a straight-line distance of 1000 pixels from the center of the node.

|

* This action targets the nearest node within a straight-line distance of 1000 pixels from the center of the node.

|

||||||

* In the case of `Copy All Connections`, it duplicates existing outputs, but since it does not allow duplicate connections, the existing output connections of the original node are disconnected.

|

* In the case of `Copy All Connections`, it duplicates existing outputs, but since it does not allow duplicate connections, the existing output connections of the original node are disconnected.

|

||||||

@@ -298,48 +297,46 @@ When you run the `scan.sh` script:

|

|||||||

|

|

||||||

* It updates the `github-stats.json`.

|

* It updates the `github-stats.json`.

|

||||||

* This uses the GitHub API, so set your token with `export GITHUB_TOKEN=your_token_here` to avoid quickly reaching the rate limit and malfunctioning.

|

* This uses the GitHub API, so set your token with `export GITHUB_TOKEN=your_token_here` to avoid quickly reaching the rate limit and malfunctioning.

|

||||||

* To skip this step, add the `--skip-stat-update` option.

|

* To skip this step, add the `--skip-update-stat` option.

|

||||||

|

|

||||||

* The `--skip-all` option applies both `--skip-update` and `--skip-stat-update`.

|

* The `--skip-all` option applies both `--skip-update` and `--skip-stat-update`.

|

||||||

|

|

||||||

|

|

||||||

## Troubleshooting

|

## Troubleshooting

|

||||||

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the `<USER_DIRECTORY>/__manager/config.ini` file that is generated.

|

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini` file that is generated.

|

||||||

* If updating ComfyUI-Manager itself fails, please go to the **ComfyUI-Manager** directory and execute the command `git update-ref refs/remotes/origin/main a361cc1 && git fetch --all && git pull`.

|

* If updating ComfyUI-Manager itself fails, please go to the **ComfyUI-Manager** directory and execute the command `git update-ref refs/remotes/origin/main a361cc1 && git fetch --all && git pull`.

|

||||||

* If you encounter the error message `Overlapped Object has pending operation at deallocation on ComfyUI Manager load` under Windows

|

* If you encounter the error message `Overlapped Object has pending operation at deallocation on Comfyui Manager load` under Windows

|

||||||

* Edit `config.ini` file: add `windows_selector_event_loop_policy = True`

|

* Edit `config.ini` file: add `windows_selector_event_loop_policy = True`

|

||||||

* If the `SSL: CERTIFICATE_VERIFY_FAILED` error occurs.

|

* if `SSL: CERTIFICATE_VERIFY_FAILED` error is occured.

|

||||||

* Edit `config.ini` file: add `bypass_ssl = True`

|

* Edit `config.ini` file: add `bypass_ssl = True`

|

||||||

|

|

||||||

|

|

||||||

## Security policy

|

## Security policy

|

||||||

|

* Edit `config.ini` file: add `security_level = <LEVEL>`

|

||||||

The security settings are applied based on whether the ComfyUI server's listener is non-local and whether the network mode is set to `personal_cloud`.

|

* `strong`

|

||||||

|

* doesn't allow `high` and `middle` level risky feature

|

||||||

* **non-local**: When the server is launched with `--listen` and is bound to a network range other than the local `127.` range, allowing remote IP access.

|

* `normal`

|

||||||

* **personal\_cloud**: When the `network_mode` is set to `personal_cloud`.

|

* doesn't allow `high` level risky feature

|

||||||

|

* `middle` level risky feature is available

|

||||||

|

* `normal-`

|

||||||

### Risky Level Table

|

* doesn't allow `high` level risky feature if `--listen` is specified and not starts with `127.`

|

||||||

|

* `middle` level risky feature is available

|

||||||

| Risky Level | features |

|

* `weak`

|

||||||

|-------------|---------------------------------------------------------------------------------------------------------------------------------------|

|

* all feature is available

|

||||||

| high+ | * `Install via git url`, `pip install`<BR>* Installation of nodepack registered not in the `default channel`. |

|

|

||||||

| high | * Fix nodepack |

|

* `high` level risky features

|

||||||

| middle+ | * Uninstall/Update<BR>* Installation of nodepack registered in the `default channel`.<BR>* Restore/Remove Snapshot<BR>* Install model |

|

* `Install via git url`, `pip install`

|

||||||

| middle | * Restart |

|

* Installation of custom nodes registered not in the `default channel`.

|

||||||

| low | * Update ComfyUI |

|

* Fix custom nodes

|

||||||

|

|

||||||

|

* `middle` level risky features

|

||||||

### Security Level Table

|

* Uninstall/Update

|

||||||

|

* Installation of custom nodes registered in the `default channel`.

|

||||||

| Security Level | local | non-local (personal_cloud) | non-local (not personal_cloud) |

|

* Restore/Remove Snapshot

|

||||||

|----------------|--------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------|--------------------------------|

|

* Restart

|

||||||

| strong | * Only `weak` level risky features are allowed | * Only `weak` level risky features are allowed | * Only `weak` level risky features are allowed |

|

|

||||||

| normal | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+`, `high` and `middle+` level risky features are not allowed<BR>* `middle` level risky features are available

|

* `low` level risky features

|

||||||

| normal- | * All features are available | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+`, `high` and `middle+` level risky features are not allowed<BR>* `middle` level risky features are available

|

* Update ComfyUI

|

||||||

| weak | * All features are available | * All features are available | * `high+` and `middle+` level risky features are not allowed<BR>* `high`, `middle` and `low` level risky features are available

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# Disclaimer

|

# Disclaimer

|

||||||

|

|||||||

4

check.sh

4

check.sh

@@ -37,7 +37,7 @@ find ~/.tmp/default -name "*.py" -print0 | xargs -0 grep -E "crypto|^_A="

|

|||||||

|

|

||||||

echo

|

echo

|

||||||

echo CHECK3

|

echo CHECK3

|

||||||

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*[^#]*https\?:"

|

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*https\\?:"

|

||||||

find ~/.tmp/default -name "requirements.txt" | xargs grep "^\s*[^#].*\.whl"

|

find ~/.tmp/default -name "requirements.txt" | xargs grep "\.whl"

|

||||||

|

|

||||||

echo

|

echo

|

||||||

|

|||||||

@@ -1,10 +1,5 @@

|

|||||||

import os

|

import os

|

||||||

import logging

|

import logging

|

||||||

from aiohttp import web

|

|

||||||

from .common.manager_security import HANDLER_POLICY

|

|

||||||

from .common import manager_security

|

|

||||||

from comfy.cli_args import args

|

|

||||||

|

|

||||||

|

|

||||||

def prestartup():

|

def prestartup():

|

||||||

from . import prestartup_script # noqa: F401

|

from . import prestartup_script # noqa: F401

|

||||||

@@ -12,29 +7,25 @@ def prestartup():

|

|||||||

|

|

||||||

|

|

||||||

def start():

|

def start():

|

||||||

|

from comfy.cli_args import args

|

||||||

|

|

||||||

logging.info('[START] ComfyUI-Manager')

|

logging.info('[START] ComfyUI-Manager')

|

||||||

from .common import cm_global # noqa: F401

|

from .common import cm_global # noqa: F401

|

||||||

|

|

||||||

if args.enable_manager:

|

if not args.disable_manager:

|

||||||

if args.enable_manager_legacy_ui:

|

if args.enable_manager_legacy_ui:

|

||||||

try:

|

try:

|

||||||

from .legacy import manager_server # noqa: F401

|

from .legacy import manager_server # noqa: F401

|

||||||

from .legacy import share_3rdparty # noqa: F401

|

from .legacy import share_3rdparty # noqa: F401

|

||||||

from .legacy import manager_core as core

|

|

||||||

import nodes

|

import nodes

|

||||||

|

|

||||||

logging.info("[ComfyUI-Manager] Legacy UI is enabled.")

|

logging.info("[ComfyUI-Manager] Legacy UI is enabled.")

|

||||||

nodes.EXTENSION_WEB_DIRS['comfyui-manager-legacy'] = os.path.join(os.path.dirname(__file__), 'js')

|

nodes.EXTENSION_WEB_DIRS['comfyui-manager-legacy'] = os.path.join(os.path.dirname(__file__), 'js')

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

print("Error enabling legacy ComfyUI Manager frontend:", e)

|

print("Error enabling legacy ComfyUI Manager frontend:", e)

|

||||||

core = None

|

|

||||||

else:

|

else:

|

||||||

from .glob import manager_server # noqa: F401

|

from .glob import manager_server # noqa: F401

|

||||||

from .glob import share_3rdparty # noqa: F401

|

from .glob import share_3rdparty # noqa: F401

|

||||||

from .glob import manager_core as core

|

|

||||||

|

|

||||||

if core is not None:

|

|

||||||

manager_security.is_personal_cloud_mode = core.get_config()['network_mode'].lower() == 'personal_cloud'

|

|

||||||

|

|

||||||

|

|

||||||

def should_be_disabled(fullpath:str) -> bool:

|

def should_be_disabled(fullpath:str) -> bool:

|

||||||

@@ -42,7 +33,9 @@ def should_be_disabled(fullpath:str) -> bool:

|

|||||||

1. Disables the legacy ComfyUI-Manager.

|

1. Disables the legacy ComfyUI-Manager.

|

||||||

2. The blocklist can be expanded later based on policies.

|

2. The blocklist can be expanded later based on policies.

|

||||||

"""

|

"""

|

||||||

if args.enable_manager:

|

from comfy.cli_args import args

|

||||||

|

|

||||||

|

if not args.disable_manager:

|

||||||

# In cases where installation is done via a zip archive, the directory name may not be comfyui-manager, and it may not contain a git repository.

|

# In cases where installation is done via a zip archive, the directory name may not be comfyui-manager, and it may not contain a git repository.

|

||||||

# It is assumed that any installed legacy ComfyUI-Manager will have at least 'comfyui-manager' in its directory name.

|

# It is assumed that any installed legacy ComfyUI-Manager will have at least 'comfyui-manager' in its directory name.

|

||||||

dir_name = os.path.basename(fullpath).lower()

|

dir_name = os.path.basename(fullpath).lower()

|

||||||

@@ -50,55 +43,3 @@ def should_be_disabled(fullpath:str) -> bool:

|

|||||||

return True

|

return True

|

||||||

|

|

||||||

return False

|

return False

|

||||||

|

|

||||||

|

|

||||||

def get_client_ip(request):

|

|

||||||

peername = request.transport.get_extra_info("peername")

|

|

||||||

if peername is not None:

|

|

||||||

host, port = peername

|

|

||||||

return host

|

|

||||||

|

|

||||||

return "unknown"

|

|

||||||

|

|

||||||

|

|

||||||

def create_middleware():

|

|

||||||

connected_clients = set()

|

|

||||||

is_local_mode = manager_security.is_loopback(args.listen)

|

|

||||||

|

|

||||||

@web.middleware

|

|

||||||

async def manager_middleware(request: web.Request, handler):

|

|

||||||

nonlocal connected_clients

|

|

||||||

|

|

||||||

# security policy for remote environments

|

|

||||||

prev_client_count = len(connected_clients)

|

|

||||||

client_ip = get_client_ip(request)

|

|

||||||

connected_clients.add(client_ip)

|

|

||||||

next_client_count = len(connected_clients)

|

|

||||||

|

|

||||||

if prev_client_count == 1 and next_client_count > 1:

|

|

||||||

manager_security.multiple_remote_alert()

|

|

||||||

|

|

||||||

policy = manager_security.get_handler_policy(handler)

|

|

||||||

is_banned = False

|

|

||||||

|

|

||||||

# policy check

|

|

||||||

if len(connected_clients) > 1:

|

|

||||||

if is_local_mode:

|

|

||||||

if HANDLER_POLICY.MULTIPLE_REMOTE_BAN_NON_LOCAL in policy:

|

|

||||||

is_banned = True

|

|

||||||

if HANDLER_POLICY.MULTIPLE_REMOTE_BAN_NOT_PERSONAL_CLOUD in policy:

|

|

||||||

is_banned = not manager_security.is_personal_cloud_mode

|

|

||||||

|

|

||||||

if HANDLER_POLICY.BANNED in policy:

|

|

||||||

is_banned = True

|

|

||||||

|

|

||||||

if is_banned:

|

|

||||||

logging.warning(f"[Manager] Banning request from {client_ip}: {request.path}")

|

|

||||||

response = web.Response(text="[Manager] This request is banned.", status=403)

|

|

||||||

else:

|

|

||||||

response: web.Response = await handler(request)

|

|

||||||

|

|

||||||

return response

|

|

||||||

|

|

||||||

return manager_middleware

|

|

||||||

|

|

||||||

@@ -46,7 +46,10 @@ comfyui_manager_path = os.path.abspath(os.path.dirname(__file__))

|

|||||||

cm_global.pip_blacklist = {'torch', 'torchaudio', 'torchsde', 'torchvision'}

|

cm_global.pip_blacklist = {'torch', 'torchaudio', 'torchsde', 'torchvision'}

|

||||||

cm_global.pip_downgrade_blacklist = ['torch', 'torchaudio', 'torchsde', 'torchvision', 'transformers', 'safetensors', 'kornia']

|

cm_global.pip_downgrade_blacklist = ['torch', 'torchaudio', 'torchsde', 'torchvision', 'transformers', 'safetensors', 'kornia']

|

||||||

|

|

||||||

cm_global.pip_overrides = {}

|

if sys.version_info < (3, 13):

|

||||||

|

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

||||||

|

else:

|

||||||

|

cm_global.pip_overrides = {}

|

||||||

|

|

||||||

if os.path.exists(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json")):

|

if os.path.exists(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json")):

|

||||||

with open(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json"), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

with open(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json"), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||||

@@ -149,6 +152,9 @@ class Ctx:

|

|||||||

with open(context.manager_pip_overrides_path, 'r', encoding="UTF-8", errors="ignore") as json_file:

|

with open(context.manager_pip_overrides_path, 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||||

cm_global.pip_overrides = json.load(json_file)

|

cm_global.pip_overrides = json.load(json_file)

|

||||||

|

|

||||||

|

if sys.version_info < (3, 13):

|

||||||

|

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

||||||

|

|

||||||

if os.path.exists(context.manager_pip_blacklist_path):

|

if os.path.exists(context.manager_pip_blacklist_path):

|

||||||

with open(context.manager_pip_blacklist_path, 'r', encoding="UTF-8", errors="ignore") as f:

|

with open(context.manager_pip_blacklist_path, 'r', encoding="UTF-8", errors="ignore") as f:

|

||||||

for x in f.readlines():

|

for x in f.readlines():

|

||||||

@@ -670,7 +676,7 @@ def install(

|

|||||||

cmd_ctx.set_channel_mode(channel, mode)

|

cmd_ctx.set_channel_mode(channel, mode)

|

||||||

cmd_ctx.set_no_deps(no_deps)

|

cmd_ctx.set_no_deps(no_deps)

|

||||||

|

|

||||||

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, context.manager_files_path)

|

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, core.manager_files_path)

|

||||||

for_each_nodes(nodes, act=install_node, exit_on_fail=exit_on_fail)

|

for_each_nodes(nodes, act=install_node, exit_on_fail=exit_on_fail)

|

||||||

pip_fixer.fix_broken()

|

pip_fixer.fix_broken()

|

||||||

|

|

||||||

|

|||||||

@@ -1,16 +0,0 @@

|

|||||||

# ComfyUI-Manager: Core Backend (glob)

|

|

||||||

|

|

||||||

This directory contains the Python backend modules that power ComfyUI-Manager, handling the core functionality of node management, downloading, security, and server operations.

|

|

||||||

|

|

||||||

## Core Modules

|

|

||||||

|

|

||||||

- **manager_downloader.py**: Handles downloading operations for models, extensions, and other resources.

|

|

||||||

- **manager_util.py**: Provides utility functions used throughout the system.

|

|

||||||

|

|

||||||

## Specialized Modules

|

|

||||||

|

|

||||||

- **cm_global.py**: Maintains global variables and state management across the system.

|

|

||||||

- **cnr_utils.py**: Helper utilities for interacting with the custom node registry (CNR).

|

|

||||||

- **git_utils.py**: Git-specific utilities for repository operations.

|

|

||||||

- **node_package.py**: Handles the packaging and installation of node extensions.

|

|

||||||

- **security_check.py**: Implements the multi-level security system for installation safety.

|

|

||||||

@@ -11,7 +11,6 @@ from . import manager_util

|

|||||||

|

|

||||||

import requests

|

import requests

|

||||||

import toml

|

import toml

|

||||||

import logging

|

|

||||||

|

|

||||||

base_url = "https://api.comfy.org"

|

base_url = "https://api.comfy.org"

|

||||||

|

|

||||||

@@ -24,7 +23,7 @@ async def get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

try:

|

try:

|

||||||

return await _get_cnr_data(cache_mode, dont_wait)

|

return await _get_cnr_data(cache_mode, dont_wait)

|

||||||

except asyncio.TimeoutError:

|

except asyncio.TimeoutError:

|

||||||

logging.info("A timeout occurred during the fetch process from ComfyRegistry.")

|

print("A timeout occurred during the fetch process from ComfyRegistry.")

|

||||||

return await _get_cnr_data(cache_mode=True, dont_wait=True) # timeout fallback

|

return await _get_cnr_data(cache_mode=True, dont_wait=True) # timeout fallback

|

||||||

|

|

||||||

async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

||||||

@@ -80,12 +79,12 @@ async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

full_nodes[x['id']] = x

|

full_nodes[x['id']] = x

|

||||||

|

|

||||||

if page % 5 == 0:

|

if page % 5 == 0:

|

||||||

logging.info(f"FETCH ComfyRegistry Data: {page}/{sub_json_obj['totalPages']}")

|

print(f"FETCH ComfyRegistry Data: {page}/{sub_json_obj['totalPages']}")

|

||||||

|

|

||||||

page += 1

|

page += 1

|

||||||

time.sleep(0.5)

|

time.sleep(0.5)

|

||||||

|

|

||||||

logging.info("FETCH ComfyRegistry Data [DONE]")

|

print("FETCH ComfyRegistry Data [DONE]")

|

||||||

|

|

||||||

for v in full_nodes.values():

|

for v in full_nodes.values():

|

||||||

if 'latest_version' not in v:

|

if 'latest_version' not in v:

|

||||||

@@ -101,7 +100,7 @@ async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

if cache_state == 'not-cached':

|

if cache_state == 'not-cached':

|

||||||

return {}

|

return {}

|

||||||

else:

|

else:

|

||||||

logging.info("[ComfyUI-Manager] The ComfyRegistry cache update is still in progress, so an outdated cache is being used.")

|

print("[ComfyUI-Manager] The ComfyRegistry cache update is still in progress, so an outdated cache is being used.")

|

||||||

with open(manager_util.get_cache_path(uri), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

with open(manager_util.get_cache_path(uri), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||||

return json.load(json_file)['nodes']

|

return json.load(json_file)['nodes']

|

||||||

|

|

||||||

@@ -115,7 +114,7 @@ async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

|||||||

return json_obj['nodes']

|

return json_obj['nodes']

|

||||||

except Exception:

|

except Exception:

|

||||||

res = {}

|

res = {}

|

||||||

logging.warning("Cannot connect to comfyregistry.")

|

print("Cannot connect to comfyregistry.")

|

||||||

finally:

|

finally:

|

||||||

if cache_mode:

|

if cache_mode:

|

||||||

is_cache_loading = False

|

is_cache_loading = False

|

||||||

@@ -181,7 +180,7 @@ def install_node(node_id, version=None):

|

|||||||

else:

|

else:

|

||||||

url = f"{base_url}/nodes/{node_id}/install?version={version}"

|

url = f"{base_url}/nodes/{node_id}/install?version={version}"

|

||||||

|

|

||||||

response = requests.get(url, verify=not manager_util.bypass_ssl)

|

response = requests.get(url)

|

||||||

if response.status_code == 200:

|

if response.status_code == 200:

|

||||||

# Convert the API response to a NodeVersion object

|

# Convert the API response to a NodeVersion object

|

||||||

return map_node_version(response.json())

|

return map_node_version(response.json())

|

||||||

@@ -192,7 +191,7 @@ def install_node(node_id, version=None):

|

|||||||

def all_versions_of_node(node_id):

|

def all_versions_of_node(node_id):

|

||||||

url = f"{base_url}/nodes/{node_id}/versions?statuses=NodeVersionStatusActive&statuses=NodeVersionStatusPending"

|

url = f"{base_url}/nodes/{node_id}/versions?statuses=NodeVersionStatusActive&statuses=NodeVersionStatusPending"

|

||||||

|

|

||||||

response = requests.get(url, verify=not manager_util.bypass_ssl)

|

response = requests.get(url)

|

||||||

if response.status_code == 200:

|

if response.status_code == 200:

|

||||||

return response.json()

|

return response.json()

|

||||||

else:

|

else:

|

||||||

@@ -212,7 +211,6 @@ def read_cnr_info(fullpath):

|

|||||||

|

|

||||||

project = data.get('project', {})

|

project = data.get('project', {})

|

||||||

name = project.get('name').strip().lower()

|

name = project.get('name').strip().lower()

|

||||||

original_name = project.get('name')

|

|

||||||

|

|

||||||

# normalize version

|

# normalize version

|

||||||

# for example: 2.5 -> 2.5.0

|

# for example: 2.5 -> 2.5.0

|

||||||

@@ -224,7 +222,6 @@ def read_cnr_info(fullpath):

|

|||||||

if name and version: # repository is optional

|

if name and version: # repository is optional

|

||||||

return {

|

return {

|

||||||

"id": name,

|

"id": name,

|

||||||

"original_name": original_name,

|

|

||||||

"version": version,

|

"version": version,

|

||||||

"url": repository

|

"url": repository

|

||||||

}

|

}

|

||||||

@@ -241,7 +238,7 @@ def generate_cnr_id(fullpath, cnr_id):

|

|||||||

with open(cnr_id_path, "w") as f:

|

with open(cnr_id_path, "w") as f:

|

||||||

return f.write(cnr_id)

|

return f.write(cnr_id)

|

||||||

except Exception:

|

except Exception:

|

||||||

logging.error(f"[ComfyUI Manager] unable to create file: {cnr_id_path}")

|

print(f"[ComfyUI Manager] unable to create file: {cnr_id_path}")

|

||||||

|

|

||||||

|

|

||||||

def read_cnr_id(fullpath):

|

def read_cnr_id(fullpath):

|

||||||

|

|||||||

@@ -34,7 +34,7 @@ manager_pip_blacklist_path = None

|

|||||||

manager_components_path = None

|

manager_components_path = None

|

||||||

manager_batch_history_path = None

|

manager_batch_history_path = None

|

||||||

|

|

||||||

def update_user_directory(manager_dir):

|

def update_user_directory(user_dir):

|

||||||

global manager_files_path

|

global manager_files_path

|

||||||

global manager_config_path

|

global manager_config_path

|

||||||

global manager_channel_list_path

|

global manager_channel_list_path

|

||||||

@@ -45,7 +45,7 @@ def update_user_directory(manager_dir):

|

|||||||

global manager_components_path

|

global manager_components_path

|

||||||

global manager_batch_history_path

|

global manager_batch_history_path

|

||||||

|

|

||||||

manager_files_path = manager_dir

|

manager_files_path = os.path.abspath(os.path.join(user_dir, 'default', 'ComfyUI-Manager'))

|

||||||

if not os.path.exists(manager_files_path):

|

if not os.path.exists(manager_files_path):

|

||||||

os.makedirs(manager_files_path)

|

os.makedirs(manager_files_path)

|

||||||

|

|

||||||

@@ -73,7 +73,7 @@ def update_user_directory(manager_dir):

|

|||||||

|

|

||||||

try:

|

try:

|

||||||

import folder_paths

|

import folder_paths

|

||||||

update_user_directory(folder_paths.get_system_user_directory("manager"))

|

update_user_directory(folder_paths.get_user_directory())

|

||||||

|

|

||||||

except Exception:

|

except Exception:

|

||||||

# fallback:

|

# fallback:

|

||||||

@@ -106,3 +106,4 @@ def get_comfyui_tag():

|

|||||||

except Exception:

|

except Exception:

|

||||||

return None

|

return None

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@@ -4,7 +4,6 @@ class NetworkMode(enum.Enum):

|

|||||||

PUBLIC = "public"

|

PUBLIC = "public"

|

||||||

PRIVATE = "private"

|

PRIVATE = "private"

|

||||||

OFFLINE = "offline"

|

OFFLINE = "offline"

|

||||||

PERSONAL_CLOUD = "personal_cloud"

|

|

||||||

|

|

||||||

class SecurityLevel(enum.Enum):

|

class SecurityLevel(enum.Enum):

|

||||||

STRONG = "strong"

|

STRONG = "strong"

|

||||||

|

|||||||

@@ -46,8 +46,6 @@ def git_url(fullpath):

|

|||||||

|

|

||||||

for k, v in config.items():

|

for k, v in config.items():

|

||||||

if k.startswith('remote ') and 'url' in v:

|

if k.startswith('remote ') and 'url' in v:

|

||||||

if 'Comfy-Org/ComfyUI-Manager' in v['url']:

|

|

||||||

return "https://github.com/ltdrdata/ComfyUI-Manager"

|

|

||||||

return v['url']

|

return v['url']

|

||||||

|

|

||||||

return None

|

return None

|

||||||

|

|||||||

@@ -55,11 +55,7 @@ def download_url(model_url: str, model_dir: str, filename: str):

|

|||||||

return aria2_download_url(model_url, model_dir, filename)

|

return aria2_download_url(model_url, model_dir, filename)

|

||||||

else:

|

else:

|

||||||

from torchvision.datasets.utils import download_url as torchvision_download_url

|

from torchvision.datasets.utils import download_url as torchvision_download_url

|

||||||

try:

|

return torchvision_download_url(model_url, model_dir, filename)

|

||||||

return torchvision_download_url(model_url, model_dir, filename)

|

|

||||||

except Exception as e:

|

|

||||||

logging.error(f"[ComfyUI-Manager] Failed to download: {model_url} / {repr(e)}")

|

|

||||||

raise

|

|

||||||

|

|

||||||

|

|

||||||

def aria2_find_task(dir: str, filename: str):

|

def aria2_find_task(dir: str, filename: str):

|

||||||

|

|||||||

@@ -1,36 +0,0 @@

|

|||||||

from enum import Enum

|

|

||||||

|

|

||||||

is_personal_cloud_mode = False

|

|

||||||

handler_policy = {}

|

|

||||||

|

|

||||||

class HANDLER_POLICY(Enum):

|

|

||||||

MULTIPLE_REMOTE_BAN_NON_LOCAL = 1

|

|

||||||

MULTIPLE_REMOTE_BAN_NOT_PERSONAL_CLOUD = 2

|

|

||||||

BANNED = 3

|

|

||||||

|

|

||||||

|

|

||||||

def is_loopback(address):

|

|

||||||

import ipaddress

|

|

||||||

try:

|

|

||||||

return ipaddress.ip_address(address).is_loopback

|

|

||||||

except ValueError:

|

|

||||||

return False

|

|

||||||

|

|

||||||

|

|

||||||

def do_nothing():

|

|

||||||

pass

|

|

||||||

|

|

||||||

|

|

||||||

def get_handler_policy(x):

|

|

||||||

return handler_policy.get(x) or set()

|

|

||||||

|

|

||||||

def add_handler_policy(x, policy):

|

|

||||||

s = handler_policy.get(x)

|

|

||||||

if s is None:

|

|

||||||

s = set()

|

|

||||||

handler_policy[x] = s

|

|

||||||

|

|

||||||

s.add(policy)

|

|

||||||

|

|

||||||

|

|

||||||

multiple_remote_alert = do_nothing

|

|

||||||

@@ -15,7 +15,7 @@ import re

|

|||||||

import logging

|

import logging

|

||||||

import platform

|

import platform

|

||||||

import shlex

|

import shlex

|

||||||

from functools import lru_cache

|

from . import cm_global

|

||||||

|

|

||||||

|

|

||||||

cache_lock = threading.Lock()

|

cache_lock = threading.Lock()

|

||||||

@@ -25,7 +25,6 @@ comfyui_manager_path = os.path.abspath(os.path.join(os.path.dirname(__file__), '

|

|||||||

cache_dir = os.path.join(comfyui_manager_path, '.cache') # This path is also updated together in **manager_core.update_user_directory**.

|

cache_dir = os.path.join(comfyui_manager_path, '.cache') # This path is also updated together in **manager_core.update_user_directory**.

|

||||||

|

|

||||||

use_uv = False

|

use_uv = False

|

||||||

bypass_ssl = False

|

|

||||||

|

|

||||||

def is_manager_pip_package():

|

def is_manager_pip_package():

|

||||||

return not os.path.exists(os.path.join(comfyui_manager_path, '..', 'custom_nodes'))

|

return not os.path.exists(os.path.join(comfyui_manager_path, '..', 'custom_nodes'))

|

||||||

@@ -39,64 +38,18 @@ def add_python_path_to_env():

|

|||||||

os.environ['PATH'] = os.path.dirname(sys.executable)+sep+os.environ['PATH']

|

os.environ['PATH'] = os.path.dirname(sys.executable)+sep+os.environ['PATH']

|

||||||

|

|

||||||

|

|

||||||

@lru_cache(maxsize=2)

|

|

||||||

def get_pip_cmd(force_uv=False):

|

|

||||||

"""

|

|

||||||

Get the base pip command, with automatic fallback to uv if pip is unavailable.

|

|

||||||

|

|

||||||

Args:

|

|

||||||

force_uv (bool): If True, use uv directly without trying pip

|

|

||||||

|

|

||||||

Returns:

|

|

||||||

list: Base command for pip operations

|

|

||||||

"""

|

|

||||||

embedded = 'python_embeded' in sys.executable

|

|

||||||

|

|

||||||

# Try pip first (unless forcing uv)

|

|

||||||

if not force_uv:

|

|

||||||

try:

|

|

||||||

test_cmd = [sys.executable] + (['-s'] if embedded else []) + ['-m', 'pip', '--version']

|

|

||||||

subprocess.check_output(test_cmd, stderr=subprocess.DEVNULL, timeout=5)

|

|

||||||

return [sys.executable] + (['-s'] if embedded else []) + ['-m', 'pip']

|

|

||||||

except Exception:

|

|

||||||

logging.warning("[ComfyUI-Manager] python -m pip not available. Falling back to uv.")

|

|

||||||

|

|

||||||

# Try uv (either forced or pip failed)

|

|

||||||

import shutil

|

|

||||||

|

|

||||||

# Try uv as Python module

|

|

||||||

try:

|

|

||||||

test_cmd = [sys.executable] + (['-s'] if embedded else []) + ['-m', 'uv', '--version']

|

|

||||||

subprocess.check_output(test_cmd, stderr=subprocess.DEVNULL, timeout=5)

|

|

||||||

logging.info("[ComfyUI-Manager] Using uv as Python module for pip operations.")

|

|

||||||

return [sys.executable] + (['-s'] if embedded else []) + ['-m', 'uv', 'pip']

|

|

||||||

except Exception:

|

|

||||||

pass

|

|

||||||

|

|

||||||

# Try standalone uv

|

|

||||||

if shutil.which('uv'):

|

|

||||||

logging.info("[ComfyUI-Manager] Using standalone uv for pip operations.")

|

|

||||||

return ['uv', 'pip']

|

|

||||||

|

|

||||||

# Nothing worked

|

|

||||||

logging.error("[ComfyUI-Manager] Neither python -m pip nor uv are available. Cannot proceed with package operations.")

|

|

||||||

raise Exception("Neither pip nor uv are available for package management")

|

|

||||||

|

|

||||||

|

|

||||||

def make_pip_cmd(cmd):

|

def make_pip_cmd(cmd):

|

||||||

"""

|

if 'python_embeded' in sys.executable:

|

||||||

Create a pip command by combining the cached base pip command with the given arguments.

|

if use_uv:

|

||||||

|

return [sys.executable, '-s', '-m', 'uv', 'pip'] + cmd

|

||||||

Args:

|

else:

|

||||||

cmd (list): List of pip command arguments (e.g., ['install', 'package'])

|

return [sys.executable, '-s', '-m', 'pip'] + cmd

|

||||||

|

else:

|

||||||

Returns:

|

# FIXED: https://github.com/ltdrdata/ComfyUI-Manager/issues/1667

|

||||||

list: Complete command list ready for subprocess execution

|

if use_uv:

|

||||||

"""

|

return [sys.executable, '-m', 'uv', 'pip'] + cmd

|

||||||

global use_uv

|

else:

|

||||||

base_cmd = get_pip_cmd(force_uv=use_uv)

|

return [sys.executable, '-m', 'pip'] + cmd

|

||||||

return base_cmd + cmd

|

|

||||||

|

|

||||||

|

|

||||||

# DON'T USE StrictVersion - cannot handle pre_release version

|

# DON'T USE StrictVersion - cannot handle pre_release version

|

||||||

# try:

|

# try:

|

||||||

@@ -187,7 +140,7 @@ async def get_data(uri, silent=False):

|

|||||||

print(f"FETCH DATA from: {uri}", end="")

|

print(f"FETCH DATA from: {uri}", end="")

|

||||||

|

|

||||||

if uri.startswith("http"):

|

if uri.startswith("http"):

|

||||||

async with aiohttp.ClientSession(trust_env=True, connector=aiohttp.TCPConnector(verify_ssl=not bypass_ssl)) as session:

|

async with aiohttp.ClientSession(trust_env=True, connector=aiohttp.TCPConnector(verify_ssl=False)) as session:

|

||||||

headers = {

|

headers = {

|

||||||

'Cache-Control': 'no-cache',

|

'Cache-Control': 'no-cache',

|

||||||

'Pragma': 'no-cache',

|

'Pragma': 'no-cache',

|

||||||

@@ -377,32 +330,6 @@ torch_torchvision_torchaudio_version_map = {

|

|||||||

}

|

}

|

||||||

|

|

||||||

|

|

||||||

def torch_rollback(prev):

|

|

||||||

spec = prev.split('+')

|

|

||||||

if len(spec) > 1:

|

|

||||||

platform = spec[1]

|

|

||||||

else:

|

|

||||||

cmd = make_pip_cmd(['install', '--force', 'torch', 'torchvision', 'torchaudio'])

|

|

||||||

subprocess.check_output(cmd, universal_newlines=True)

|

|

||||||

logging.error(cmd)

|

|

||||||

return

|

|

||||||

|

|

||||||

torch_ver = StrictVersion(spec[0])

|

|

||||||

torch_ver = f"{torch_ver.major}.{torch_ver.minor}.{torch_ver.patch}"

|

|

||||||

torch_torchvision_torchaudio_ver = torch_torchvision_torchaudio_version_map.get(torch_ver)

|

|

||||||

|

|

||||||

if torch_torchvision_torchaudio_ver is None:

|

|

||||||

cmd = make_pip_cmd(['install', '--pre', 'torch', 'torchvision', 'torchaudio',

|

|

||||||

'--index-url', f"https://download.pytorch.org/whl/nightly/{platform}"])

|

|

||||||

logging.info("[ComfyUI-Manager] restore PyTorch to nightly version")

|

|

||||||

else:

|

|

||||||

torchvision_ver, torchaudio_ver = torch_torchvision_torchaudio_ver

|

|

||||||

cmd = make_pip_cmd(['install', f'torch=={torch_ver}', f'torchvision=={torchvision_ver}', f"torchaudio=={torchaudio_ver}",

|

|

||||||

'--index-url', f"https://download.pytorch.org/whl/{platform}"])

|

|

||||||

logging.info(f"[ComfyUI-Manager] restore PyTorch to {torch_ver}+{platform}")

|

|

||||||

|

|

||||||

subprocess.check_output(cmd, universal_newlines=True)

|

|

||||||

|

|

||||||

|

|

||||||

class PIPFixer:

|

class PIPFixer:

|

||||||

def __init__(self, prev_pip_versions, comfyui_path, manager_files_path):

|

def __init__(self, prev_pip_versions, comfyui_path, manager_files_path):

|

||||||

@@ -410,6 +337,32 @@ class PIPFixer:

|

|||||||

self.comfyui_path = comfyui_path

|

self.comfyui_path = comfyui_path

|

||||||

self.manager_files_path = manager_files_path

|

self.manager_files_path = manager_files_path

|

||||||

|

|

||||||

|

def torch_rollback(self):

|

||||||

|

spec = self.prev_pip_versions['torch'].split('+')

|

||||||

|

if len(spec) > 0:

|

||||||

|

platform = spec[1]

|

||||||

|

else:

|

||||||

|

cmd = make_pip_cmd(['install', '--force', 'torch', 'torchvision', 'torchaudio'])

|

||||||

|

subprocess.check_output(cmd, universal_newlines=True)

|

||||||

|

logging.error(cmd)

|

||||||

|

return

|

||||||

|

|

||||||

|

torch_ver = StrictVersion(spec[0])

|

||||||

|

torch_ver = f"{torch_ver.major}.{torch_ver.minor}.{torch_ver.patch}"

|

||||||

|

torch_torchvision_torchaudio_ver = torch_torchvision_torchaudio_version_map.get(torch_ver)

|

||||||

|

|

||||||

|

if torch_torchvision_torchaudio_ver is None:

|

||||||

|

cmd = make_pip_cmd(['install', '--pre', 'torch', 'torchvision', 'torchaudio',

|

||||||

|

'--index-url', f"https://download.pytorch.org/whl/nightly/{platform}"])

|

||||||

|

logging.info("[ComfyUI-Manager] restore PyTorch to nightly version")

|

||||||

|

else:

|

||||||

|

torchvision_ver, torchaudio_ver = torch_torchvision_torchaudio_ver

|

||||||

|

cmd = make_pip_cmd(['install', f'torch=={torch_ver}', f'torchvision=={torchvision_ver}', f"torchaudio=={torchaudio_ver}",

|

||||||

|

'--index-url', f"https://download.pytorch.org/whl/{platform}"])

|

||||||

|

logging.info(f"[ComfyUI-Manager] restore PyTorch to {torch_ver}+{platform}")

|

||||||

|

|

||||||

|

subprocess.check_output(cmd, universal_newlines=True)

|

||||||

|

|

||||||

def fix_broken(self):

|

def fix_broken(self):

|

||||||

new_pip_versions = get_installed_packages(True)

|

new_pip_versions = get_installed_packages(True)

|

||||||

|

|

||||||

@@ -431,7 +384,7 @@ class PIPFixer:

|

|||||||

elif self.prev_pip_versions['torch'] != new_pip_versions['torch'] \

|

elif self.prev_pip_versions['torch'] != new_pip_versions['torch'] \

|

||||||

or self.prev_pip_versions['torchvision'] != new_pip_versions['torchvision'] \

|

or self.prev_pip_versions['torchvision'] != new_pip_versions['torchvision'] \

|

||||||

or self.prev_pip_versions['torchaudio'] != new_pip_versions['torchaudio']:

|

or self.prev_pip_versions['torchaudio'] != new_pip_versions['torchaudio']:

|

||||||

torch_rollback(self.prev_pip_versions['torch'])

|

self.torch_rollback()

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

logging.error("[ComfyUI-Manager] Failed to restore PyTorch")

|

logging.error("[ComfyUI-Manager] Failed to restore PyTorch")

|

||||||

logging.error(e)

|

logging.error(e)

|

||||||

@@ -462,14 +415,32 @@ class PIPFixer:

|

|||||||

|

|

||||||

if len(targets) > 0:

|

if len(targets) > 0:

|

||||||

for x in targets:

|

for x in targets:

|

||||||

cmd = make_pip_cmd(['install', f"{x}=={versions[0].version_string}"])

|

if sys.version_info < (3, 13):

|

||||||

subprocess.check_output(cmd, universal_newlines=True)

|

cmd = make_pip_cmd(['install', f"{x}=={versions[0].version_string}", "numpy<2"])

|

||||||

|

subprocess.check_output(cmd, universal_newlines=True)

|

||||||

|

|

||||||

logging.info(f"[ComfyUI-Manager] 'opencv' dependencies were fixed: {targets}")

|

logging.info(f"[ComfyUI-Manager] 'opencv' dependencies were fixed: {targets}")

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

logging.error("[ComfyUI-Manager] Failed to restore opencv")

|

logging.error("[ComfyUI-Manager] Failed to restore opencv")

|

||||||

logging.error(e)

|

logging.error(e)

|

||||||

|

|

||||||

|

# fix numpy

|

||||||

|

if sys.version_info >= (3, 13):

|

||||||

|

logging.info("[ComfyUI-Manager] In Python 3.13 and above, PIP Fixer does not downgrade `numpy` below version 2.0. If you need to force a downgrade of `numpy`, please use `pip_auto_fix.list`.")

|

||||||

|

else:

|

||||||

|

try:

|

||||||

|

np = new_pip_versions.get('numpy')

|

||||||

|

if cm_global.pip_overrides.get('numpy') == 'numpy<2':

|

||||||

|

if np is not None:

|

||||||

|

if StrictVersion(np) >= StrictVersion('2'):

|

||||||

|

cmd = make_pip_cmd(['install', "numpy<2"])

|

||||||

|

subprocess.check_output(cmd , universal_newlines=True)

|

||||||

|

|

||||||

|

logging.info("[ComfyUI-Manager] 'numpy' dependency were fixed")

|

||||||

|

except Exception as e:

|

||||||

|

logging.error("[ComfyUI-Manager] Failed to restore numpy")

|

||||||

|

logging.error(e)

|

||||||

|

|

||||||

# fix missing frontend

|

# fix missing frontend

|

||||||

try:

|

try:

|

||||||

# NOTE: package name in requirements is 'comfyui-frontend-package'

|

# NOTE: package name in requirements is 'comfyui-frontend-package'

|

||||||

@@ -569,69 +540,3 @@ def robust_readlines(fullpath):

|

|||||||

|

|

||||||

print(f"[ComfyUI-Manager] Failed to recognize encoding for: {fullpath}")

|

print(f"[ComfyUI-Manager] Failed to recognize encoding for: {fullpath}")

|

||||||

return []

|

return []

|

||||||

|

|

||||||

|

|

||||||

def restore_pip_snapshot(pips, options):

|

|

||||||

non_url = []

|

|

||||||

local_url = []

|

|

||||||

non_local_url = []

|

|

||||||

|

|

||||||

for k, v in pips.items():

|

|

||||||

# NOTE: skip torch related packages

|

|

||||||

if k.startswith("torch==") or k.startswith("torchvision==") or k.startswith("torchaudio==") or k.startswith("nvidia-"):

|

|

||||||

continue

|

|

||||||

|

|

||||||

if v == "":

|

|

||||||

non_url.append(k)

|

|

||||||

else:

|

|

||||||

if v.startswith('file:'):

|

|

||||||

local_url.append(v)

|

|

||||||

else:

|

|

||||||

non_local_url.append(v)

|

|

||||||

|

|

||||||

|

|

||||||

# restore other pips

|

|

||||||

failed = []

|

|

||||||

if '--pip-non-url' in options:

|

|

||||||

# try all at once

|

|