* Add ty type checker to CI and fix type errors - Add ty (Astral's fast Python type checker) to GitHub CI workflow - Fix type annotations across all RAG apps: - Update load_data return types from list[str] to list[dict[str, Any]] - Fix base_rag_example.py to properly handle dict format from create_text_chunks - Fix type errors in leann-core: - chunking_utils.py: Add explicit type annotations - cli.py: Fix return type annotations for PDF extraction functions - interactive_utils.py: Fix readline import type handling - Fix type errors in apps: - wechat_history.py: Fix return type annotations - document_rag.py, code_rag.py: Replace **kwargs with explicit arguments - Add ty configuration to pyproject.toml This resolves the bug introduced in PR #157 where create_text_chunks() changed to return list[dict] but callers were not updated. * Fix remaining ty type errors - Fix slack_mcp_reader.py channel parameter can be None - Fix embedding_compute.py ContextProp type issue - Fix searcher_base.py method override signatures - Fix chunking_utils.py chunk_text assignment - Fix slack_rag.py and twitter_rag.py return types - Fix email.py and image_rag.py method overrides * Fix multimodal benchmark scripts type errors - Fix undefined LeannRetriever -> LeannMultiVector - Add proper type casts for HuggingFace Dataset iteration - Cast task config values to correct types - Add type annotations for dataset row dicts * Enable ty check for multimodal scripts in CI All type errors in multimodal scripts have been fixed, so we can now include them in the CI type checking. * Fix all test type errors and enable ty check on tests - Fix test_basic.py: search() takes str not list - Fix test_cli_prompt_template.py: add type: ignore for Mock assignments - Fix test_prompt_template_persistence.py: match BaseSearcher.search signature - Fix test_prompt_template_e2e.py: add type narrowing asserts after skip - Fix test_readme_examples.py: use explicit kwargs instead of **model_args - Fix metadata_filter.py: allow Optional[MetadataFilters] - Update CI to run ty check on tests * Format code with ruff * Format searcher_base.py

Vision-based PDF Multi-Vector Demos (macOS/MPS)

This folder contains two demos to index PDF pages as images and run multi-vector retrieval with ColPali/ColQwen2, plus optional similarity map visualization and answer generation.

What you’ll run

multi-vector-leann-paper-example.py: local PDF → pages → embed → build HNSW index → search.multi-vector-leann-similarity-map.py: HF dataset (default) or local pages → embed → index → retrieve → similarity maps → optional Qwen-VL answer.

Prerequisites (macOS)

1) Homebrew poppler (for pdf2image)

brew install poppler

which pdfinfo && pdfinfo -v

2) Python environment

Use uv (recommended) or pip. Python 3.9+.

Using uv:

uv pip install \

colpali_engine \

pdf2image \

pillow \

matplotlib qwen_vl_utils \

einops \

seaborn

Notes:

- On first run, models download from Hugging Face. Login/config if needed.

- The scripts auto-select device: CUDA > MPS > CPU. Verify MPS:

python -c "import torch; print('MPS available:', bool(getattr(torch.backends, 'mps', None) and torch.backends.mps.is_available()))"

Run the demos

A) Local PDF example

Converts a local PDF into page images, embeds them, builds an index, and searches.

cd apps/multimodal/vision-based-pdf-multi-vector

# If you don't have the sample PDF locally, download it (ignored by Git)

mkdir -p pdfs

curl -L -o pdfs/2004.12832v2.pdf https://arxiv.org/pdf/2004.12832.pdf

ls pdfs/2004.12832v2.pdf

# Ensure output dir exists

mkdir -p pages

python multi-vector-leann-paper-example.py

Expected:

- Page images in

pages/. - Console prints like

Using device=mps, dtype=...and retrieved file paths for queries.

To use your own PDF: edit pdf_path near the top of the script.

B) Similarity map + answer demo

Uses HF dataset weaviate/arXiv-AI-papers-multi-vector by default; can switch to local pages.

cd apps/multimodal/vision-based-pdf-multi-vector

python multi-vector-leann-similarity-map.py

Artifacts (when enabled):

- Retrieved pages:

./figures/retrieved_page_rank{K}.png - Similarity maps:

./figures/similarity_map_rank{K}.png

Key knobs in the script (top of file):

QUERY: your questionMODEL:"colqwen2"or"colpali"USE_HF_DATASET: setFalseto use local pagesPDF,PAGES_DIR: for local modeINDEX_PATH,TOPK,FIRST_STAGE_K,REBUILD_INDEXSIMILARITY_MAP,SIM_TOKEN_IDX,SIM_OUTPUTANSWER,MAX_NEW_TOKENS(Qwen-VL)

Troubleshooting

- pdf2image errors on macOS: ensure

brew install popplerandpdfinfoworks in terminal. - Slow or OOM on MPS: reduce dataset size (e.g., set

MAX_DOCS) or switch to CPU. - NaNs on MPS: keep fp32 on MPS (default in similarity-map script); avoid fp16 there.

- First-run model downloads can be large; ensure network access (HF mirrors if needed).

Notes

- Index files are under

./indexes/. Delete or setREBUILD_INDEX=Trueto rebuild. - For local PDFs, page images go to

./pages/.

Retrieval and Visualization Example

Example settings in multi-vector-leann-similarity-map.py:

QUERY = "How does DeepSeek-V2 compare against the LLaMA family of LLMs?"SIMILARITY_MAP = True(to generate heatmaps)TOPK = 1(save the top retrieved page and its similarity map)

Run:

cd apps/multimodal/vision-based-pdf-multi-vector

python multi-vector-leann-similarity-map.py

Outputs (by default):

- Retrieved page:

./figures/retrieved_page_rank1.png - Similarity map:

./figures/similarity_map_rank1.png

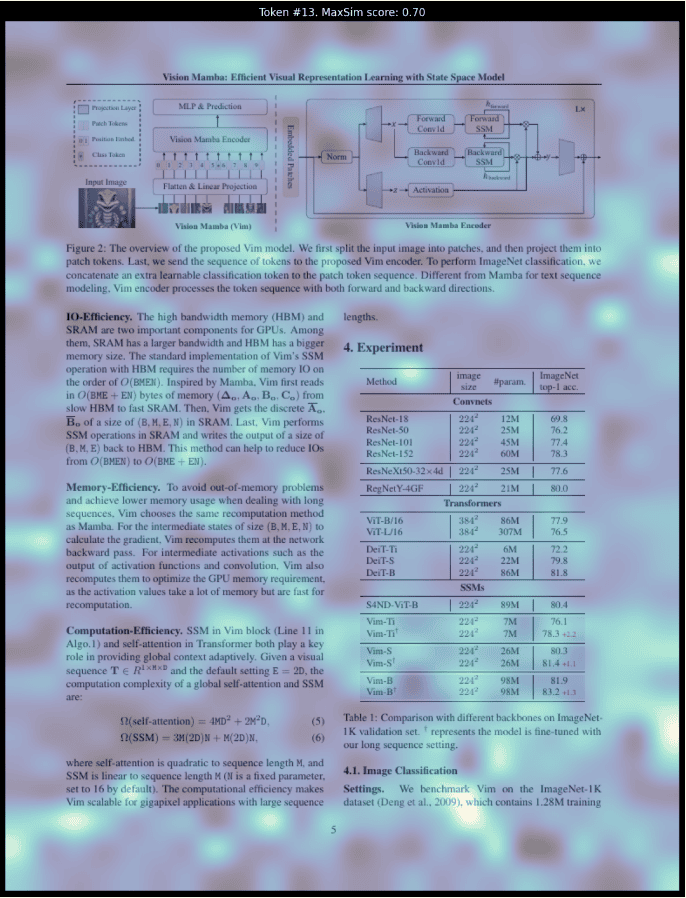

Sample visualization (example result, and the query is "QUERY = "How does Vim model performance and efficiency compared to other models?"

"):

Notes:

- Set

SIM_TOKEN_IDXto visualize a specific token index; set-1to auto-select the most salient token. - If you change

SIM_OUTPUTto a file path (e.g.,./figures/my_map.png), multiple ranks are saved asmy_map_rank{K}.png.