Compare commits

116 Commits

draft-v4-c

...

tests/api-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

da87651e53 | ||

|

|

416122d61d | ||

|

|

d3c625e791 | ||

|

|

ca2c41783c | ||

|

|

e2a6446585 | ||

|

|

839790b5ab | ||

|

|

58b9946936 | ||

|

|

a19ba22eaf | ||

|

|

117715aa22 | ||

|

|

891a5a85ee | ||

|

|

166debfabb | ||

|

|

7258a09fe5 | ||

|

|

058a436187 | ||

|

|

1950802c55 | ||

|

|

eb52a03372 | ||

|

|

f8aa428be3 | ||

|

|

ec0893f136 | ||

|

|

92b99ea963 | ||

|

|

02cd52bb65 | ||

|

|

af1ec2c87b | ||

|

|

41006c3a33 | ||

|

|

116a6d500d | ||

|

|

87d0ac807f | ||

|

|

fc943172eb | ||

|

|

9daa5a2fbd | ||

|

|

b7b2746a61 | ||

|

|

d66a4fbfc8 | ||

|

|

683a172ad8 | ||

|

|

6e12358f5a | ||

|

|

8bcf16dc90 | ||

|

|

65c0a2a1f5 | ||

|

|

115236eb9c | ||

|

|

08de942abe | ||

|

|

e9dff83290 | ||

|

|

3bc6c7584d | ||

|

|

22a2bf1584 | ||

|

|

79ece5f72c | ||

|

|

5da6fe1373 | ||

|

|

48c10d0b95 | ||

|

|

9bb56b1457 | ||

|

|

83420fd828 | ||

|

|

52f4b9506f | ||

|

|

b501e9b20b | ||

|

|

1f7ae5319a | ||

|

|

68c201239d | ||

|

|

6e4e43f612 | ||

|

|

81c3708f39 | ||

|

|

f4d2bbde34 | ||

|

|

d14b42a42c | ||

|

|

0e9c32344c | ||

|

|

30c4ea06af | ||

|

|

8211264993 | ||

|

|

67cf5b49e1 | ||

|

|

8e7ba18e05 | ||

|

|

8359e1063e | ||

|

|

ca078e54b9 | ||

|

|

f7e930c5a2 | ||

|

|

479d95e1c8 | ||

|

|

2b0ff08eef | ||

|

|

67a487db15 | ||

|

|

2488cb3458 | ||

|

|

157e6336fa | ||

|

|

d808a1f406 | ||

|

|

2bb4d8cd63 | ||

|

|

a8164e1631 | ||

|

|

a31d286945 | ||

|

|

12eeef4cf0 | ||

|

|

ce8e6dc36e | ||

|

|

7a32e544a7 | ||

|

|

e16e9d7a0e | ||

|

|

821f908dbc | ||

|

|

e007e6f897 | ||

|

|

94f496fd65 | ||

|

|

d2ce35d2e6 | ||

|

|

2eeebb32dc | ||

|

|

f6d636d82f | ||

|

|

0cd397623e | ||

|

|

5978b6c9ee | ||

|

|

9e132811bc | ||

|

|

3a3b5c1f92 | ||

|

|

26be01ff82 | ||

|

|

8f6dd92374 | ||

|

|

d50b71a887 | ||

|

|

3bc9cbc767 | ||

|

|

b6f6b4fd8a | ||

|

|

a66bada8a3 | ||

|

|

a804f7de19 | ||

|

|

72a61a9966 | ||

|

|

b08bb658ea | ||

|

|

7b28bf608b | ||

|

|

b57747fdf1 | ||

|

|

0735271b10 | ||

|

|

770cd0f9f5 | ||

|

|

32b6266dd9 | ||

|

|

2a8412a2bf | ||

|

|

0c4d289002 | ||

|

|

cee01fec25 | ||

|

|

f00686f3f2 | ||

|

|

bd33f7726e | ||

|

|

22ab526b0c | ||

|

|

af269d198d | ||

|

|

995ef6356e | ||

|

|

aa3bf77c28 | ||

|

|

15667c1259 | ||

|

|

c7b6b565da | ||

|

|

3214ab52c6 | ||

|

|

e3062ff613 | ||

|

|

036b63efe7 | ||

|

|

8d3e1d60d0 | ||

|

|

59876452f4 | ||

|

|

04972ad87f | ||

|

|

c7e69f4e26 | ||

|

|

7a59b6d0d9 | ||

|

|

d227ad97a4 | ||

|

|

b93a474dae | ||

|

|

a5fe075bf3 |

@@ -1 +0,0 @@

|

||||

PYPI_TOKEN=your-pypi-token

|

||||

58

.github/workflows/publish-to-pypi.yml

vendored

58

.github/workflows/publish-to-pypi.yml

vendored

@@ -1,58 +0,0 @@

|

||||

name: Publish to PyPI

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

paths:

|

||||

- "pyproject.toml"

|

||||

|

||||

jobs:

|

||||

build-and-publish:

|

||||

runs-on: ubuntu-latest

|

||||

if: ${{ github.repository_owner == 'ltdrdata' || github.repository_owner == 'Comfy-Org' }}

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: '3.9'

|

||||

|

||||

- name: Install build dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

python -m pip install build twine

|

||||

|

||||

- name: Get current version

|

||||

id: current_version

|

||||

run: |

|

||||

CURRENT_VERSION=$(grep -oP 'version = "\K[^"]+' pyproject.toml)

|

||||

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

||||

echo "Current version: $CURRENT_VERSION"

|

||||

|

||||

- name: Build package

|

||||

run: python -m build

|

||||

|

||||

- name: Create GitHub Release

|

||||

id: create_release

|

||||

uses: softprops/action-gh-release@v2

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

files: dist/*

|

||||

tag_name: v${{ steps.current_version.outputs.version }}

|

||||

draft: false

|

||||

prerelease: false

|

||||

generate_release_notes: true

|

||||

|

||||

- name: Publish to PyPI

|

||||

uses: pypa/gh-action-pypi-publish@release/v1

|

||||

with:

|

||||

password: ${{ secrets.PYPI_TOKEN }}

|

||||

skip-existing: true

|

||||

verbose: true

|

||||

2

.github/workflows/publish.yml

vendored

2

.github/workflows/publish.yml

vendored

@@ -14,7 +14,7 @@ jobs:

|

||||

publish-node:

|

||||

name: Publish Custom Node to registry

|

||||

runs-on: ubuntu-latest

|

||||

if: ${{ github.repository_owner == 'ltdrdata' || github.repository_owner == 'Comfy-Org' }}

|

||||

if: ${{ github.repository_owner == 'ltdrdata' }}

|

||||

steps:

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

5

.gitignore

vendored

5

.gitignore

vendored

@@ -17,7 +17,4 @@ github-stats-cache.json

|

||||

pip_overrides.json

|

||||

*.json

|

||||

check2.sh

|

||||

/venv/

|

||||

build

|

||||

*.egg-info

|

||||

.env

|

||||

/venv/

|

||||

@@ -1,47 +0,0 @@

|

||||

## Testing Changes

|

||||

|

||||

1. Activate the ComfyUI environment.

|

||||

|

||||

2. Build package locally after making changes.

|

||||

|

||||

```bash

|

||||

# from inside the ComfyUI-Manager directory, with the ComfyUI environment activated

|

||||

python -m build

|

||||

```

|

||||

|

||||

3. Install the package locally in the ComfyUI environment.

|

||||

|

||||

```bash

|

||||

# Uninstall existing package

|

||||

pip uninstall comfyui-manager

|

||||

|

||||

# Install the locale package

|

||||

pip install dist/comfyui-manager-*.whl

|

||||

```

|

||||

|

||||

4. Start ComfyUI.

|

||||

|

||||

```bash

|

||||

# after navigating to the ComfyUI directory

|

||||

python main.py

|

||||

```

|

||||

|

||||

## Manually Publish Test Version to PyPi

|

||||

|

||||

1. Set the `PYPI_TOKEN` environment variable in env file.

|

||||

|

||||

2. If manually publishing, you likely want to use a release candidate version, so set the version in [pyproject.toml](pyproject.toml) to something like `0.0.1rc1`.

|

||||

|

||||

3. Build the package.

|

||||

|

||||

```bash

|

||||

python -m build

|

||||

```

|

||||

|

||||

4. Upload the package to PyPi.

|

||||

|

||||

```bash

|

||||

python -m twine upload dist/* --username __token__ --password $PYPI_TOKEN

|

||||

```

|

||||

|

||||

5. View at https://pypi.org/project/comfyui-manager/

|

||||

@@ -1,7 +0,0 @@

|

||||

include comfyui_manager/js/*

|

||||

include comfyui_manager/*.json

|

||||

include comfyui_manager/glob/*

|

||||

include LICENSE.txt

|

||||

include README.md

|

||||

include requirements.txt

|

||||

include pyproject.toml

|

||||

81

README.md

81

README.md

@@ -5,7 +5,6 @@

|

||||

|

||||

|

||||

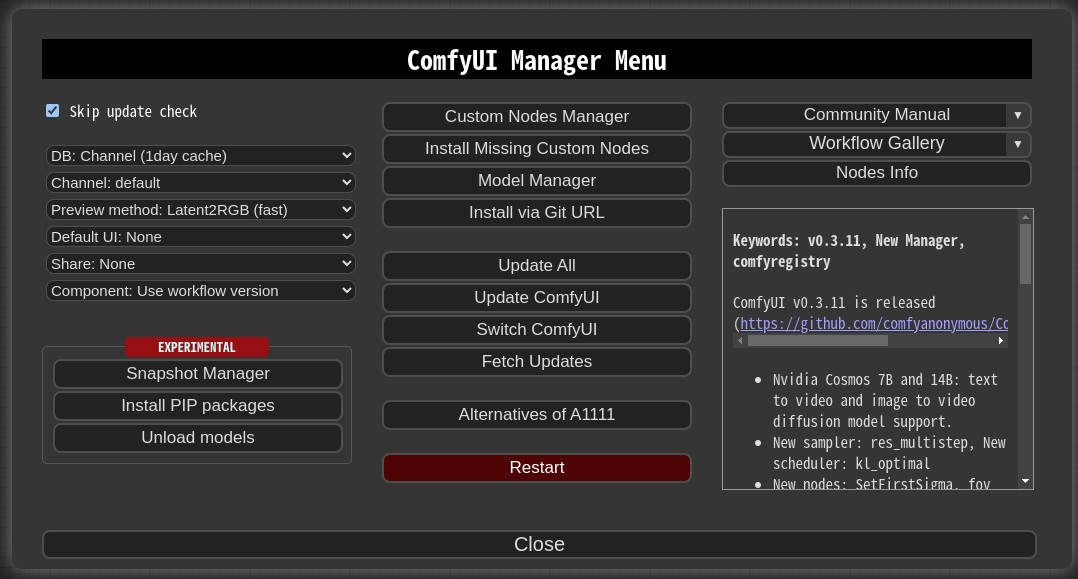

## NOTICE

|

||||

* V4.0: Modify the structure to be installable via pip instead of using git clone.

|

||||

* V3.16: Support for `uv` has been added. Set `use_uv` in `config.ini`.

|

||||

* V3.10: `double-click feature` is removed

|

||||

* This feature has been moved to https://github.com/ltdrdata/comfyui-connection-helper

|

||||

@@ -14,26 +13,78 @@

|

||||

|

||||

## Installation

|

||||

|

||||

* When installing the latest ComfyUI, it will be automatically installed as a dependency, so manual installation is no longer necessary.

|

||||

### Installation[method1] (General installation method: ComfyUI-Manager only)

|

||||

|

||||

* Manual installation of the nightly version:

|

||||

* Clone to a temporary directory (**Note:** Do **not** clone into `ComfyUI/custom_nodes`.)

|

||||

```

|

||||

git clone https://github.com/Comfy-Org/ComfyUI-Manager

|

||||

```

|

||||

* Install via pip

|

||||

```

|

||||

cd ComfyUI-Manager

|

||||

pip install .

|

||||

```

|

||||

To install ComfyUI-Manager in addition to an existing installation of ComfyUI, you can follow the following steps:

|

||||

|

||||

1. goto `ComfyUI/custom_nodes` dir in terminal(cmd)

|

||||

2. `git clone https://github.com/ltdrdata/ComfyUI-Manager comfyui-manager`

|

||||

3. Restart ComfyUI

|

||||

|

||||

|

||||

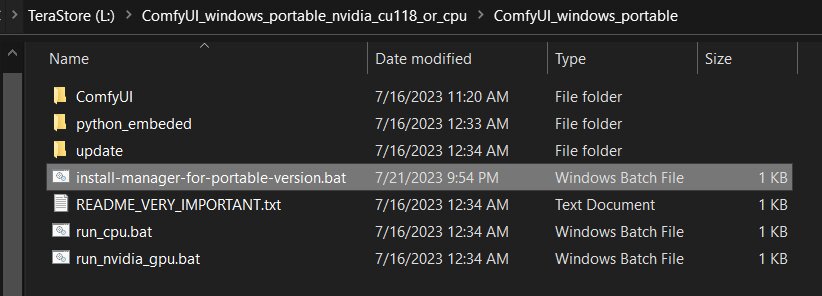

### Installation[method2] (Installation for portable ComfyUI version: ComfyUI-Manager only)

|

||||

1. install git

|

||||

- https://git-scm.com/download/win

|

||||

- standalone version

|

||||

- select option: use windows default console window

|

||||

2. Download [scripts/install-manager-for-portable-version.bat](https://github.com/ltdrdata/ComfyUI-Manager/raw/main/scripts/install-manager-for-portable-version.bat) into installed `"ComfyUI_windows_portable"` directory

|

||||

- Don't click. Right click the link and use save as...

|

||||

3. double click `install-manager-for-portable-version.bat` batch file

|

||||

|

||||

|

||||

|

||||

|

||||

### Installation[method3] (Installation through comfy-cli: install ComfyUI and ComfyUI-Manager at once.)

|

||||

> RECOMMENDED: comfy-cli provides various features to manage ComfyUI from the CLI.

|

||||

|

||||

* **prerequisite: python 3, git**

|

||||

|

||||

Windows:

|

||||

```commandline

|

||||

python -m venv venv

|

||||

venv\Scripts\activate

|

||||

pip install comfy-cli

|

||||

comfy install

|

||||

```

|

||||

|

||||

Linux/OSX:

|

||||

```commandline

|

||||

python -m venv venv

|

||||

. venv/bin/activate

|

||||

pip install comfy-cli

|

||||

comfy install

|

||||

```

|

||||

* See also: https://github.com/Comfy-Org/comfy-cli

|

||||

|

||||

|

||||

## Front-end

|

||||

### Installation[method4] (Installation for linux+venv: ComfyUI + ComfyUI-Manager)

|

||||

|

||||

* The built-in front-end of ComfyUI-Manager is the legacy front-end. The front-end for ComfyUI-Manager is now provided via [ComfyUI Frontend](https://github.com/Comfy-Org/ComfyUI_frontend).

|

||||

* To enable the legacy front-end, set the environment variable `ENABLE_LEGACY_COMFYUI_MANAGER_FRONT` to `true` before running.

|

||||

To install ComfyUI with ComfyUI-Manager on Linux using a venv environment, you can follow these steps:

|

||||

* **prerequisite: python-is-python3, python3-venv, git**

|

||||

|

||||

1. Download [scripts/install-comfyui-venv-linux.sh](https://github.com/ltdrdata/ComfyUI-Manager/raw/main/scripts/install-comfyui-venv-linux.sh) into empty install directory

|

||||

- Don't click. Right click the link and use save as...

|

||||

- ComfyUI will be installed in the subdirectory of the specified directory, and the directory will contain the generated executable script.

|

||||

2. `chmod +x install-comfyui-venv-linux.sh`

|

||||

3. `./install-comfyui-venv-linux.sh`

|

||||

|

||||

### Installation Precautions

|

||||

* **DO**: `ComfyUI-Manager` files must be accurately located in the path `ComfyUI/custom_nodes/comfyui-manager`

|

||||

* Installing in a compressed file format is not recommended.

|

||||

* **DON'T**: Decompress directly into the `ComfyUI/custom_nodes` location, resulting in the Manager contents like `__init__.py` being placed directly in that directory.

|

||||

* You have to remove all ComfyUI-Manager files from `ComfyUI/custom_nodes`

|

||||

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager/ComfyUI-Manager`.

|

||||

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager-main`.

|

||||

* In such cases, `ComfyUI-Manager` may operate, but it won't be recognized within `ComfyUI-Manager`, and updates cannot be performed. It also poses the risk of duplicate installations. Remove it and install properly via `git clone` method.

|

||||

|

||||

|

||||

You can execute ComfyUI by running either `./run_gpu.sh` or `./run_cpu.sh` depending on your system configuration.

|

||||

|

||||

## Colab Notebook

|

||||

This repository provides Colab notebooks that allow you to install and use ComfyUI, including ComfyUI-Manager. To use ComfyUI, [click on this link](https://colab.research.google.com/github/ltdrdata/ComfyUI-Manager/blob/main/notebooks/comfyui_colab_with_manager.ipynb).

|

||||

* Support for installing ComfyUI

|

||||

* Support for basic installation of ComfyUI-Manager

|

||||

* Support for automatically installing dependencies of custom nodes upon restarting Colab notebooks.

|

||||

|

||||

|

||||

## How To Use

|

||||

|

||||

25

__init__.py

Normal file

25

__init__.py

Normal file

@@ -0,0 +1,25 @@

|

||||

"""

|

||||

This file is the entry point for the ComfyUI-Manager package, handling CLI-only mode and initial setup.

|

||||

"""

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

cli_mode_flag = os.path.join(os.path.dirname(__file__), '.enable-cli-only-mode')

|

||||

|

||||

if not os.path.exists(cli_mode_flag):

|

||||

sys.path.append(os.path.join(os.path.dirname(__file__), "glob"))

|

||||

import manager_server # noqa: F401

|

||||

import share_3rdparty # noqa: F401

|

||||

import cm_global

|

||||

|

||||

if not cm_global.disable_front and not 'DISABLE_COMFYUI_MANAGER_FRONT' in os.environ:

|

||||

WEB_DIRECTORY = "js"

|

||||

else:

|

||||

print("\n[ComfyUI-Manager] !! cli-only-mode is enabled !!\n")

|

||||

|

||||

NODE_CLASS_MAPPINGS = {}

|

||||

__all__ = ['NODE_CLASS_MAPPINGS']

|

||||

|

||||

|

||||

|

||||

@@ -15,36 +15,41 @@ import git

|

||||

import importlib

|

||||

|

||||

|

||||

sys.path.append(os.path.dirname(__file__))

|

||||

sys.path.append(os.path.join(os.path.dirname(__file__), "glob"))

|

||||

|

||||

import manager_util

|

||||

|

||||

# read env vars

|

||||

# COMFYUI_FOLDERS_BASE_PATH is not required in cm-cli.py

|

||||

# `comfy_path` should be resolved before importing manager_core

|

||||

|

||||

comfy_path = os.environ.get('COMFYUI_PATH')

|

||||

|

||||

if comfy_path is None:

|

||||

print("[bold red]cm-cli: environment variable 'COMFYUI_PATH' is not specified.[/bold red]")

|

||||

exit(-1)

|

||||

try:

|

||||

import folder_paths

|

||||

comfy_path = os.path.join(os.path.dirname(folder_paths.__file__))

|

||||

except:

|

||||

print("\n[bold yellow]WARN: The `COMFYUI_PATH` environment variable is not set. Assuming `custom_nodes/ComfyUI-Manager/../../` as the ComfyUI path.[/bold yellow]", file=sys.stderr)

|

||||

comfy_path = os.path.abspath(os.path.join(manager_util.comfyui_manager_path, '..', '..'))

|

||||

|

||||

# This should be placed here

|

||||

sys.path.append(comfy_path)

|

||||

|

||||

if not os.path.exists(os.path.join(comfy_path, 'folder_paths.py')):

|

||||

print("[bold red]cm-cli: '{comfy_path}' is not a valid 'COMFYUI_PATH' location.[/bold red]")

|

||||

exit(-1)

|

||||

|

||||

|

||||

import utils.extra_config

|

||||

from .glob import cm_global

|

||||

from .glob import manager_core as core

|

||||

from .glob.manager_core import unified_manager

|

||||

from .glob import cnr_utils

|

||||

import cm_global

|

||||

import manager_core as core

|

||||

from manager_core import unified_manager

|

||||

import cnr_utils

|

||||

|

||||

comfyui_manager_path = os.path.abspath(os.path.dirname(__file__))

|

||||

|

||||

cm_global.pip_blacklist = {'torch', 'torchsde', 'torchvision'}

|

||||

cm_global.pip_downgrade_blacklist = ['torch', 'torchsde', 'torchvision', 'transformers', 'safetensors', 'kornia']

|

||||

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

||||

cm_global.pip_blacklist = {'torch', 'torchaudio', 'torchsde', 'torchvision'}

|

||||

cm_global.pip_downgrade_blacklist = ['torch', 'torchaudio', 'torchsde', 'torchvision', 'transformers', 'safetensors', 'kornia']

|

||||

|

||||

if sys.version_info < (3, 13):

|

||||

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

||||

else:

|

||||

cm_global.pip_overrides = {}

|

||||

|

||||

if os.path.exists(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json")):

|

||||

with open(os.path.join(manager_util.comfyui_manager_path, "pip_overrides.json"), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||

@@ -146,7 +151,9 @@ class Ctx:

|

||||

if os.path.exists(core.manager_pip_overrides_path):

|

||||

with open(core.manager_pip_overrides_path, 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||

cm_global.pip_overrides = json.load(json_file)

|

||||

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

||||

|

||||

if sys.version_info < (3, 13):

|

||||

cm_global.pip_overrides = {'numpy': 'numpy<2'}

|

||||

|

||||

if os.path.exists(core.manager_pip_blacklist_path):

|

||||

with open(core.manager_pip_blacklist_path, 'r', encoding="UTF-8", errors="ignore") as f:

|

||||

@@ -183,13 +190,18 @@ class Ctx:

|

||||

cmd_ctx = Ctx()

|

||||

|

||||

|

||||

def install_node(node_spec_str, is_all=False, cnt_msg=''):

|

||||

def install_node(node_spec_str, is_all=False, cnt_msg='', **kwargs):

|

||||

exit_on_fail = kwargs.get('exit_on_fail', False)

|

||||

print(f"install_node exit on fail:{exit_on_fail}...")

|

||||

|

||||

if core.is_valid_url(node_spec_str):

|

||||

# install via urls

|

||||

res = asyncio.run(core.gitclone_install(node_spec_str, no_deps=cmd_ctx.no_deps))

|

||||

if not res.result:

|

||||

print(res.msg)

|

||||

print(f"[bold red]ERROR: An error occurred while installing '{node_spec_str}'.[/bold red]")

|

||||

if exit_on_fail:

|

||||

sys.exit(1)

|

||||

else:

|

||||

print(f"{cnt_msg} [INSTALLED] {node_spec_str:50}")

|

||||

else:

|

||||

@@ -224,6 +236,8 @@ def install_node(node_spec_str, is_all=False, cnt_msg=''):

|

||||

print("")

|

||||

else:

|

||||

print(f"[bold red]ERROR: An error occurred while installing '{node_name}'.\n{res.msg}[/bold red]")

|

||||

if exit_on_fail:

|

||||

sys.exit(1)

|

||||

|

||||

|

||||

def reinstall_node(node_spec_str, is_all=False, cnt_msg=''):

|

||||

@@ -585,7 +599,7 @@ def get_all_installed_node_specs():

|

||||

return res

|

||||

|

||||

|

||||

def for_each_nodes(nodes, act, allow_all=True):

|

||||

def for_each_nodes(nodes, act, allow_all=True, **kwargs):

|

||||

is_all = False

|

||||

if allow_all and 'all' in nodes:

|

||||

is_all = True

|

||||

@@ -597,7 +611,7 @@ def for_each_nodes(nodes, act, allow_all=True):

|

||||

i = 1

|

||||

for x in nodes:

|

||||

try:

|

||||

act(x, is_all=is_all, cnt_msg=f'{i}/{total}')

|

||||

act(x, is_all=is_all, cnt_msg=f'{i}/{total}', **kwargs)

|

||||

except Exception as e:

|

||||

print(f"ERROR: {e}")

|

||||

traceback.print_exc()

|

||||

@@ -641,13 +655,17 @@ def install(

|

||||

None,

|

||||

help="user directory"

|

||||

),

|

||||

exit_on_fail: bool = typer.Option(

|

||||

False,

|

||||

help="Exit on failure"

|

||||

)

|

||||

):

|

||||

cmd_ctx.set_user_directory(user_directory)

|

||||

cmd_ctx.set_channel_mode(channel, mode)

|

||||

cmd_ctx.set_no_deps(no_deps)

|

||||

|

||||

pip_fixer = manager_util.PIPFixer(manager_util.get_installed_packages(), comfy_path, core.manager_files_path)

|

||||

for_each_nodes(nodes, act=install_node)

|

||||

for_each_nodes(nodes, act=install_node, exit_on_fail=exit_on_fail)

|

||||

pip_fixer.fix_broken()

|

||||

|

||||

|

||||

@@ -1,2 +1,2 @@

|

||||

#!/bin/bash

|

||||

python ./comfyui_manager/cm-cli.py $*

|

||||

python cm-cli.py $*

|

||||

|

||||

@@ -1,40 +0,0 @@

|

||||

import os

|

||||

import logging

|

||||

from comfy.cli_args import args

|

||||

|

||||

ENABLE_LEGACY_COMFYUI_MANAGER_FRONT_DEFAULT = True # Enable legacy ComfyUI Manager frontend while new UI is in beta phase

|

||||

|

||||

def prestartup():

|

||||

from . import prestartup_script # noqa: F401

|

||||

logging.info('[PRE] ComfyUI-Manager')

|

||||

|

||||

|

||||

def start():

|

||||

logging.info('[START] ComfyUI-Manager')

|

||||

from .glob import manager_server # noqa: F401

|

||||

from .glob import share_3rdparty # noqa: F401

|

||||

from .glob import cm_global # noqa: F401

|

||||

|

||||

should_show_legacy_manager_front = os.environ.get('ENABLE_LEGACY_COMFYUI_MANAGER_FRONT', 'false') == 'true' or ENABLE_LEGACY_COMFYUI_MANAGER_FRONT_DEFAULT

|

||||

if not args.disable_manager and should_show_legacy_manager_front:

|

||||

try:

|

||||

import nodes

|

||||

nodes.EXTENSION_WEB_DIRS['comfyui-manager-legacy'] = os.path.join(os.path.dirname(__file__), 'js')

|

||||

except Exception as e:

|

||||

print("Error enabling legacy ComfyUI Manager frontend:", e)

|

||||

|

||||

|

||||

def should_be_disabled(fullpath:str) -> bool:

|

||||

"""

|

||||

1. Disables the legacy ComfyUI-Manager.

|

||||

2. The blocklist can be expanded later based on policies.

|

||||

"""

|

||||

|

||||

if not args.disable_manager:

|

||||

# In cases where installation is done via a zip archive, the directory name may not be comfyui-manager, and it may not contain a git repository.

|

||||

# It is assumed that any installed legacy ComfyUI-Manager will have at least 'comfyui-manager' in its directory name.

|

||||

dir_name = os.path.basename(fullpath).lower()

|

||||

if 'comfyui-manager' in dir_name:

|

||||

return True

|

||||

|

||||

return False

|

||||

@@ -1,17 +0,0 @@

|

||||

import enum

|

||||

|

||||

class NetworkMode(enum.Enum):

|

||||

PUBLIC = "public"

|

||||

PRIVATE = "private"

|

||||

OFFLINE = "offline"

|

||||

|

||||

class SecurityLevel(enum.Enum):

|

||||

STRONG = "strong"

|

||||

NORMAL = "normal"

|

||||

NORMAL_MINUS = "normal-minus"

|

||||

WEAK = "weak"

|

||||

|

||||

class DBMode(enum.Enum):

|

||||

LOCAL = "local"

|

||||

CACHE = "cache"

|

||||

REMOTE = "remote"

|

||||

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

@@ -15,12 +15,9 @@ comfy_path = os.environ.get('COMFYUI_PATH')

|

||||

git_exe_path = os.environ.get('GIT_EXE_PATH')

|

||||

|

||||

if comfy_path is None:

|

||||

print("git_helper: environment variable 'COMFYUI_PATH' is not specified.")

|

||||

exit(-1)

|

||||

print("\nWARN: The `COMFYUI_PATH` environment variable is not set. Assuming `custom_nodes/ComfyUI-Manager/../../` as the ComfyUI path.", file=sys.stderr)

|

||||

comfy_path = os.path.abspath(os.path.join(os.path.dirname(__file__), '..', '..'))

|

||||

|

||||

if not os.path.exists(os.path.join(comfy_path, 'folder_paths.py')):

|

||||

print("git_helper: '{comfy_path}' is not a valid 'COMFYUI_PATH' location.")

|

||||

exit(-1)

|

||||

|

||||

def download_url(url, dest_folder, filename=None):

|

||||

# Ensure the destination folder exists

|

||||

8938

github-stats.json

8938

github-stats.json

File diff suppressed because it is too large

Load Diff

@@ -6,9 +6,8 @@ import time

|

||||

from dataclasses import dataclass

|

||||

from typing import List

|

||||

|

||||

from . import manager_core

|

||||

from . import manager_util

|

||||

|

||||

import manager_core

|

||||

import manager_util

|

||||

import requests

|

||||

import toml

|

||||

|

||||

@@ -32,15 +32,18 @@ from packaging import version

|

||||

|

||||

import uuid

|

||||

|

||||

from . import cm_global

|

||||

from . import cnr_utils

|

||||

from . import manager_util

|

||||

from . import git_utils

|

||||

from . import manager_downloader

|

||||

from .node_package import InstalledNodePackage

|

||||

from .enums import NetworkMode, SecurityLevel, DBMode

|

||||

glob_path = os.path.join(os.path.dirname(__file__)) # ComfyUI-Manager/glob

|

||||

sys.path.append(glob_path)

|

||||

|

||||

version_code = [4, 0]

|

||||

import cm_global

|

||||

import cnr_utils

|

||||

import manager_util

|

||||

import git_utils

|

||||

import manager_downloader

|

||||

from node_package import InstalledNodePackage

|

||||

|

||||

|

||||

version_code = [3, 32, 3]

|

||||

version_str = f"V{version_code[0]}.{version_code[1]}" + (f'.{version_code[2]}' if len(version_code) > 2 else '')

|

||||

|

||||

|

||||

@@ -55,7 +58,6 @@ class InvalidChannel(Exception):

|

||||

self.channel = channel

|

||||

super().__init__(channel)

|

||||

|

||||

|

||||

def get_default_custom_nodes_path():

|

||||

global default_custom_nodes_path

|

||||

if default_custom_nodes_path is None:

|

||||

@@ -181,11 +183,10 @@ comfy_base_path = os.environ.get('COMFYUI_FOLDERS_BASE_PATH')

|

||||

|

||||

if comfy_path is None:

|

||||

try:

|

||||

comfy_path = os.path.abspath(os.path.dirname(sys.modules['__main__'].__file__))

|

||||

os.environ['COMFYUI_PATH'] = comfy_path

|

||||

import folder_paths

|

||||

comfy_path = os.path.join(os.path.dirname(folder_paths.__file__))

|

||||

except:

|

||||

logging.error("[ComfyUI-Manager] environment variable 'COMFYUI_PATH' is not specified.")

|

||||

exit(-1)

|

||||

comfy_path = os.path.abspath(os.path.join(manager_util.comfyui_manager_path, '..', '..'))

|

||||

|

||||

if comfy_base_path is None:

|

||||

comfy_base_path = comfy_path

|

||||

@@ -202,7 +203,6 @@ manager_snapshot_path = None

|

||||

manager_pip_overrides_path = None

|

||||

manager_pip_blacklist_path = None

|

||||

manager_components_path = None

|

||||

manager_batch_history_path = None

|

||||

|

||||

def update_user_directory(user_dir):

|

||||

global manager_files_path

|

||||

@@ -213,7 +213,6 @@ def update_user_directory(user_dir):

|

||||

global manager_pip_overrides_path

|

||||

global manager_pip_blacklist_path

|

||||

global manager_components_path

|

||||

global manager_batch_history_path

|

||||

|

||||

manager_files_path = os.path.abspath(os.path.join(user_dir, 'default', 'ComfyUI-Manager'))

|

||||

if not os.path.exists(manager_files_path):

|

||||

@@ -233,14 +232,10 @@ def update_user_directory(user_dir):

|

||||

manager_pip_blacklist_path = os.path.join(manager_files_path, "pip_blacklist.list")

|

||||

manager_components_path = os.path.join(manager_files_path, "components")

|

||||

manager_util.cache_dir = os.path.join(manager_files_path, "cache")

|

||||

manager_batch_history_path = os.path.join(manager_files_path, "batch_history")

|

||||

|

||||

if not os.path.exists(manager_util.cache_dir):

|

||||

os.makedirs(manager_util.cache_dir)

|

||||

|

||||

if not os.path.exists(manager_batch_history_path):

|

||||

os.makedirs(manager_batch_history_path)

|

||||

|

||||

try:

|

||||

import folder_paths

|

||||

update_user_directory(folder_paths.get_user_directory())

|

||||

@@ -558,7 +553,7 @@ class UnifiedManager:

|

||||

ver = str(manager_util.StrictVersion(info['version']))

|

||||

return {'id': cnr['id'], 'cnr': cnr, 'ver': ver}

|

||||

else:

|

||||

return {'id': info['id'], 'ver': info['version']}

|

||||

return None

|

||||

else:

|

||||

return None

|

||||

|

||||

@@ -734,7 +729,7 @@ class UnifiedManager:

|

||||

|

||||

return latest

|

||||

|

||||

async def reload(self, cache_mode, dont_wait=True, update_cnr_map=True):

|

||||

async def reload(self, cache_mode, dont_wait=True):

|

||||

self.custom_node_map_cache = {}

|

||||

self.cnr_inactive_nodes = {} # node_id -> node_version -> fullpath

|

||||

self.nightly_inactive_nodes = {} # node_id -> fullpath

|

||||

@@ -742,18 +737,17 @@ class UnifiedManager:

|

||||

self.unknown_active_nodes = {} # node_id -> repo url * fullpath

|

||||

self.active_nodes = {} # node_id -> node_version * fullpath

|

||||

|

||||

if get_config()['network_mode'] != 'public' or manager_util.is_manager_pip_package():

|

||||

if get_config()['network_mode'] != 'public':

|

||||

dont_wait = True

|

||||

|

||||

if update_cnr_map:

|

||||

# reload 'cnr_map' and 'repo_cnr_map'

|

||||

cnrs = await cnr_utils.get_cnr_data(cache_mode=cache_mode=='cache', dont_wait=dont_wait)

|

||||

# reload 'cnr_map' and 'repo_cnr_map'

|

||||

cnrs = await cnr_utils.get_cnr_data(cache_mode=cache_mode=='cache', dont_wait=dont_wait)

|

||||

|

||||

for x in cnrs:

|

||||

self.cnr_map[x['id']] = x

|

||||

if 'repository' in x:

|

||||

normalized_url = git_utils.normalize_url(x['repository'])

|

||||

self.repo_cnr_map[normalized_url] = x

|

||||

for x in cnrs:

|

||||

self.cnr_map[x['id']] = x

|

||||

if 'repository' in x:

|

||||

normalized_url = git_utils.normalize_url(x['repository'])

|

||||

self.repo_cnr_map[normalized_url] = x

|

||||

|

||||

# reload node status info from custom_nodes/*

|

||||

for custom_nodes_path in folder_paths.get_folder_paths('custom_nodes'):

|

||||

@@ -874,8 +868,9 @@ class UnifiedManager:

|

||||

package_name = remap_pip_package(line.strip())

|

||||

if package_name and not package_name.startswith('#') and package_name not in self.processed_install:

|

||||

self.processed_install.add(package_name)

|

||||

install_cmd = manager_util.make_pip_cmd(["install", package_name])

|

||||

if package_name.strip() != "" and not package_name.startswith('#'):

|

||||

clean_package_name = package_name.split('#')[0].strip()

|

||||

install_cmd = manager_util.make_pip_cmd(["install", clean_package_name])

|

||||

if clean_package_name != "" and not clean_package_name.startswith('#'):

|

||||

res = res and try_install_script(url, repo_path, install_cmd, instant_execution=instant_execution)

|

||||

|

||||

pip_fixer.fix_broken()

|

||||

@@ -1447,20 +1442,12 @@ class UnifiedManager:

|

||||

return self.unified_enable(node_id, version_spec)

|

||||

|

||||

elif version_spec == 'unknown' or version_spec == 'nightly':

|

||||

to_path = os.path.abspath(os.path.join(get_default_custom_nodes_path(), node_id))

|

||||

|

||||

if version_spec == 'nightly':

|

||||

# disable cnr nodes

|

||||

if self.is_enabled(node_id, 'cnr'):

|

||||

self.unified_disable(node_id, False)

|

||||

|

||||

# use `repo name` as a dir name instead of `cnr id` if system added nodepack (i.e. publisher is null)

|

||||

cnr = self.cnr_map.get(node_id)

|

||||

|

||||

if cnr is not None and cnr.get('publisher') is None:

|

||||

repo_name = os.path.basename(git_utils.normalize_url(repo_url))

|

||||

to_path = os.path.abspath(os.path.join(get_default_custom_nodes_path(), repo_name))

|

||||

|

||||

to_path = os.path.abspath(os.path.join(get_default_custom_nodes_path(), node_id))

|

||||

res = self.repo_install(repo_url, to_path, instant_execution=instant_execution, no_deps=no_deps, return_postinstall=return_postinstall)

|

||||

if res.result:

|

||||

if version_spec == 'unknown':

|

||||

@@ -1677,9 +1664,9 @@ def read_config():

|

||||

'model_download_by_agent': get_bool('model_download_by_agent', False),

|

||||

'downgrade_blacklist': default_conf.get('downgrade_blacklist', '').lower(),

|

||||

'always_lazy_install': get_bool('always_lazy_install', False),

|

||||

'network_mode': default_conf.get('network_mode', NetworkMode.PUBLIC.value).lower(),

|

||||

'security_level': default_conf.get('security_level', SecurityLevel.NORMAL.value).lower(),

|

||||

'db_mode': default_conf.get('db_mode', DBMode.CACHE.value).lower(),

|

||||

'network_mode': default_conf.get('network_mode', 'public').lower(),

|

||||

'security_level': default_conf.get('security_level', 'normal').lower(),

|

||||

'db_mode': default_conf.get('db_mode', 'cache').lower(),

|

||||

}

|

||||

|

||||

except Exception:

|

||||

@@ -1700,9 +1687,9 @@ def read_config():

|

||||

'model_download_by_agent': False,

|

||||

'downgrade_blacklist': '',

|

||||

'always_lazy_install': False,

|

||||

'network_mode': NetworkMode.OFFLINE.value,

|

||||

'security_level': SecurityLevel.NORMAL.value,

|

||||

'db_mode': DBMode.CACHE.value,

|

||||

'network_mode': 'public', # public | private | offline

|

||||

'security_level': 'normal', # strong | normal | normal- | weak

|

||||

'db_mode': 'cache', # local | cache | remote

|

||||

}

|

||||

|

||||

|

||||

@@ -2086,6 +2073,13 @@ def is_valid_url(url):

|

||||

return False

|

||||

|

||||

|

||||

def extract_url_and_commit_id(s):

|

||||

index = s.rfind('@')

|

||||

if index == -1:

|

||||

return (s, '')

|

||||

else:

|

||||

return (s[:index], s[index+1:])

|

||||

|

||||

async def gitclone_install(url, instant_execution=False, msg_prefix='', no_deps=False):

|

||||

await unified_manager.reload('cache')

|

||||

await unified_manager.get_custom_nodes('default', 'cache')

|

||||

@@ -2103,8 +2097,11 @@ async def gitclone_install(url, instant_execution=False, msg_prefix='', no_deps=

|

||||

cnr = unified_manager.get_cnr_by_repo(url)

|

||||

if cnr:

|

||||

cnr_id = cnr['id']

|

||||

return await unified_manager.install_by_id(cnr_id, version_spec='nightly', channel='default', mode='cache')

|

||||

return await unified_manager.install_by_id(cnr_id, version_spec=None, channel='default', mode='cache')

|

||||

else:

|

||||

new_url, commit_id = extract_url_and_commit_id(url)

|

||||

if commit_id != "":

|

||||

url = new_url

|

||||

repo_name = os.path.splitext(os.path.basename(url))[0]

|

||||

|

||||

# NOTE: Keep original name as possible if unknown node

|

||||

@@ -2137,6 +2134,10 @@ async def gitclone_install(url, instant_execution=False, msg_prefix='', no_deps=

|

||||

return result.fail(f"Failed to clone '{clone_url}' into '{repo_path}'")

|

||||

else:

|

||||

repo = git.Repo.clone_from(clone_url, repo_path, recursive=True, progress=GitProgress())

|

||||

if commit_id!= "":

|

||||

repo.git.checkout(commit_id)

|

||||

repo.git.submodule('update', '--init', '--recursive')

|

||||

|

||||

repo.git.clear_cache()

|

||||

repo.close()

|

||||

|

||||

@@ -2199,7 +2200,7 @@ async def get_data_by_mode(mode, filename, channel_url=None):

|

||||

cache_uri = str(manager_util.simple_hash(uri))+'_'+filename

|

||||

cache_uri = os.path.join(manager_util.cache_dir, cache_uri)

|

||||

|

||||

if get_config()['network_mode'] == 'offline' or manager_util.is_manager_pip_package():

|

||||

if get_config()['network_mode'] == 'offline':

|

||||

# offline network mode

|

||||

if os.path.exists(cache_uri):

|

||||

json_obj = await manager_util.get_data(cache_uri)

|

||||

@@ -2219,7 +2220,7 @@ async def get_data_by_mode(mode, filename, channel_url=None):

|

||||

with open(cache_uri, "w", encoding='utf-8') as file:

|

||||

json.dump(json_obj, file, indent=4, sort_keys=True)

|

||||

except Exception as e:

|

||||

print(f"[ComfyUI-Manager] Due to a network error, switching to local mode.\n=> {filename} @ {channel_url}/{mode}\n=> {e}")

|

||||

print(f"[ComfyUI-Manager] Due to a network error, switching to local mode.\n=> {filename}\n=> {e}")

|

||||

uri = os.path.join(manager_util.comfyui_manager_path, filename)

|

||||

json_obj = await manager_util.get_data(uri)

|

||||

|

||||

@@ -14,23 +14,18 @@ import git

|

||||

from datetime import datetime

|

||||

|

||||

from server import PromptServer

|

||||

import manager_core as core

|

||||

import manager_util

|

||||

import cm_global

|

||||

import logging

|

||||

import asyncio

|

||||

from collections import deque

|

||||

import queue

|

||||

|

||||

from . import manager_core as core

|

||||

from . import manager_util

|

||||

from . import cm_global

|

||||

from . import manager_downloader

|

||||

import manager_downloader

|

||||

|

||||

|

||||

logging.info(f"### Loading: ComfyUI-Manager ({core.version_str})")

|

||||

|

||||

if not manager_util.is_manager_pip_package():

|

||||

network_mode_description = "offline"

|

||||

else:

|

||||

network_mode_description = core.get_config()['network_mode']

|

||||

logging.info("[ComfyUI-Manager] network_mode: " + network_mode_description)

|

||||

logging.info("[ComfyUI-Manager] network_mode: " + core.get_config()['network_mode'])

|

||||

|

||||

comfy_ui_hash = "-"

|

||||

comfyui_tag = None

|

||||

@@ -160,7 +155,9 @@ class ManagerFuncsInComfyUI(core.ManagerFuncs):

|

||||

|

||||

core.manager_funcs = ManagerFuncsInComfyUI()

|

||||

|

||||

from .manager_downloader import download_url, download_url_with_agent

|

||||

sys.path.append('../..')

|

||||

|

||||

from manager_downloader import download_url, download_url_with_agent

|

||||

|

||||

core.comfy_path = os.path.dirname(folder_paths.__file__)

|

||||

core.js_path = os.path.join(core.comfy_path, "web", "extensions")

|

||||

@@ -394,73 +391,16 @@ def nickname_filter(json_obj):

|

||||

return json_obj

|

||||

|

||||

|

||||

class TaskBatch:

|

||||

def __init__(self, batch_json, tasks, failed):

|

||||

self.nodepack_result = {}

|

||||

self.model_result = {}

|

||||

self.batch_id = batch_json.get('batch_id') if batch_json is not None else None

|

||||

self.batch_json = batch_json

|

||||

self.tasks = tasks

|

||||

self.current_index = 0

|

||||

self.stats = {}

|

||||

self.failed = failed if failed is not None else set()

|

||||

self.is_aborted = False

|

||||

|

||||

def is_done(self):

|

||||

return len(self.tasks) <= self.current_index

|

||||

|

||||

def get_next(self):

|

||||

if self.is_done():

|

||||

return None

|

||||

|

||||

item = self.tasks[self.current_index]

|

||||

self.current_index += 1

|

||||

return item

|

||||

|

||||

def done_count(self):

|

||||

return len(self.nodepack_result) + len(self.model_result)

|

||||

|

||||

def total_count(self):

|

||||

return len(self.tasks)

|

||||

|

||||

def abort(self):

|

||||

self.is_aborted = True

|

||||

|

||||

def finalize(self):

|

||||

if self.batch_id is not None:

|

||||

batch_path = os.path.join(core.manager_batch_history_path, self.batch_id+".json")

|

||||

json_obj = {

|

||||

"batch": self.batch_json,

|

||||

"nodepack_result": self.nodepack_result,

|

||||

"model_result": self.model_result,

|

||||

"failed": list(self.failed)

|

||||

}

|

||||

with open(batch_path, "w") as json_file:

|

||||

json.dump(json_obj, json_file, indent=4)

|

||||

|

||||

|

||||

temp_queue_batch = []

|

||||

task_batch_queue = deque()

|

||||

task_queue = queue.Queue()

|

||||

nodepack_result = {}

|

||||

model_result = {}

|

||||

tasks_in_progress = set()

|

||||

task_worker_lock = threading.Lock()

|

||||

aborted_batch = None

|

||||

|

||||

|

||||

def finalize_temp_queue_batch(batch_json=None, failed=None):

|

||||

"""

|

||||

make temp_queue_batch as a batch snapshot and add to batch_queue

|

||||

"""

|

||||

|

||||

global temp_queue_batch

|

||||

|

||||

if len(temp_queue_batch):

|

||||

batch = TaskBatch(batch_json, temp_queue_batch, failed)

|

||||

task_batch_queue.append(batch)

|

||||

temp_queue_batch = []

|

||||

|

||||

|

||||

async def task_worker():

|

||||

global task_queue

|

||||

global nodepack_result

|

||||

global model_result

|

||||

global tasks_in_progress

|

||||

|

||||

async def do_install(item) -> str:

|

||||

@@ -473,7 +413,8 @@ async def task_worker():

|

||||

return f"Cannot resolve install target: '{node_spec_str}'"

|

||||

|

||||

node_name, version_spec, is_specified = node_spec

|

||||

res = await core.unified_manager.install_by_id(node_name, version_spec, channel, mode, return_postinstall=skip_post_install) # discard post install if skip_post_install mode

|

||||

res = await core.unified_manager.install_by_id(node_name, version_spec, channel, mode, return_postinstall=skip_post_install)

|

||||

# discard post install if skip_post_install mode

|

||||

|

||||

if res.action not in ['skip', 'enable', 'install-git', 'install-cnr', 'switch-cnr']:

|

||||

logging.error(f"[ComfyUI-Manager] Installation failed:\n{res.msg}")

|

||||

@@ -488,11 +429,6 @@ async def task_worker():

|

||||

traceback.print_exc()

|

||||

return f"Installation failed:\n{node_spec_str}"

|

||||

|

||||

async def do_enable(item) -> str:

|

||||

ui_id, cnr_id = item

|

||||

core.unified_manager.unified_enable(cnr_id)

|

||||

return 'success'

|

||||

|

||||

async def do_update(item):

|

||||

ui_id, node_name, node_ver = item

|

||||

|

||||

@@ -651,45 +587,31 @@ async def task_worker():

|

||||

|

||||

return f"Model installation error: {model_url}"

|

||||

|

||||

stats = {}

|

||||

|

||||

while True:

|

||||

with task_worker_lock:

|

||||

if len(task_batch_queue) > 0:

|

||||

cur_batch = task_batch_queue[0]

|

||||

else:

|

||||

logging.info("\n[ComfyUI-Manager] All tasks are completed.")

|

||||

logging.info("\nAfter restarting ComfyUI, please refresh the browser.")

|

||||

done_count = len(nodepack_result) + len(model_result)

|

||||

total_count = done_count + task_queue.qsize()

|

||||

|

||||

res = {'status': 'all-done'}

|

||||

if task_queue.empty():

|

||||

logging.info(f"\n[ComfyUI-Manager] Queued works are completed.\n{stats}")

|

||||

|

||||

PromptServer.instance.send_sync("cm-queue-status", res)

|

||||

|

||||

return

|

||||

|

||||

if cur_batch.is_done():

|

||||

logging.info(f"\n[ComfyUI-Manager] A tasks batch(batch_id={cur_batch.batch_id}) is completed.\nstat={cur_batch.stats}")

|

||||

|

||||

res = {'status': 'batch-done',

|

||||

'nodepack_result': cur_batch.nodepack_result,

|

||||

'model_result': cur_batch.model_result,

|

||||

'total_count': cur_batch.total_count(),

|

||||

'done_count': cur_batch.done_count(),

|

||||

'batch_id': cur_batch.batch_id,

|

||||

'remaining_batch_count': len(task_batch_queue) }

|

||||

|

||||

PromptServer.instance.send_sync("cm-queue-status", res)

|

||||

cur_batch.finalize()

|

||||

task_batch_queue.popleft()

|

||||

continue

|

||||

logging.info("\nAfter restarting ComfyUI, please refresh the browser.")

|

||||

PromptServer.instance.send_sync("cm-queue-status",

|

||||

{'status': 'done',

|

||||

'nodepack_result': nodepack_result, 'model_result': model_result,

|

||||

'total_count': total_count, 'done_count': done_count})

|

||||

nodepack_result = {}

|

||||

task_queue = queue.Queue()

|

||||

return # terminate worker thread

|

||||

|

||||

with task_worker_lock:

|

||||

kind, item = cur_batch.get_next()

|

||||

kind, item = task_queue.get()

|

||||

tasks_in_progress.add((kind, item[0]))

|

||||

|

||||

try:

|

||||

if kind == 'install':

|

||||

msg = await do_install(item)

|

||||

elif kind == 'enable':

|

||||

msg = await do_enable(item)

|

||||

elif kind == 'install-model':

|

||||

msg = await do_install_model(item)

|

||||

elif kind == 'update':

|

||||

@@ -713,131 +635,31 @@ async def task_worker():

|

||||

with task_worker_lock:

|

||||

tasks_in_progress.remove((kind, item[0]))

|

||||

|

||||

ui_id = item[0]

|

||||

if kind == 'install-model':

|

||||

cur_batch.model_result[ui_id] = msg

|

||||

ui_target = "model_manager"

|

||||

elif kind == 'update-main':

|

||||

cur_batch.nodepack_result[ui_id] = msg

|

||||

ui_target = "main"

|

||||

elif kind == 'update-comfyui':

|

||||

cur_batch.nodepack_result['comfyui'] = msg

|

||||

ui_target = "main"

|

||||

elif kind == 'update':

|

||||

cur_batch.nodepack_result[ui_id] = msg['msg']

|

||||

ui_target = "nodepack_manager"

|

||||

else:

|

||||

cur_batch.nodepack_result[ui_id] = msg

|

||||

ui_target = "nodepack_manager"

|

||||

ui_id = item[0]

|

||||

if kind == 'install-model':

|

||||

model_result[ui_id] = msg

|

||||

ui_target = "model_manager"

|

||||

elif kind == 'update-main':

|

||||

nodepack_result[ui_id] = msg

|

||||

ui_target = "main"

|

||||

elif kind == 'update-comfyui':

|

||||

nodepack_result['comfyui'] = msg

|

||||

ui_target = "main"

|

||||

elif kind == 'update':

|

||||

nodepack_result[ui_id] = msg['msg']

|

||||

ui_target = "nodepack_manager"

|

||||

else:

|

||||

nodepack_result[ui_id] = msg

|

||||

ui_target = "nodepack_manager"

|

||||

|

||||

cur_batch.stats[kind] = cur_batch.stats.get(kind, 0) + 1

|

||||

stats[kind] = stats.get(kind, 0) + 1

|

||||

|

||||

PromptServer.instance.send_sync("cm-queue-status",

|

||||

{'status': 'in_progress',

|

||||

'target': item[0],

|

||||

'batch_id': cur_batch.batch_id,

|

||||

'ui_target': ui_target,

|

||||

'total_count': cur_batch.total_count(),

|

||||

'done_count': cur_batch.done_count()})

|

||||

{'status': 'in_progress', 'target': item[0], 'ui_target': ui_target,

|

||||

'total_count': total_count, 'done_count': done_count})

|

||||

|

||||

|

||||

@routes.post("/v2/manager/queue/batch")

|

||||

async def queue_batch(request):

|

||||

json_data = await request.json()

|

||||

|

||||

failed = set()

|

||||

|

||||

for k, v in json_data.items():

|

||||

if k == 'update_all':

|

||||

await _update_all({'mode': v})

|

||||

|

||||

elif k == 'reinstall':

|

||||

for x in v:

|

||||

res = await _uninstall_custom_node(x)

|

||||

if res.status != 200:

|

||||

failed.add(x[0])

|

||||

else:

|

||||

res = await _install_custom_node(x)

|

||||

if res.status != 200:

|

||||

failed.add(x[0])

|

||||

|

||||

elif k == 'install':

|

||||

for x in v:

|

||||

res = await _install_custom_node(x)

|

||||

if res.status != 200:

|

||||

failed.add(x[0])

|

||||

|

||||

elif k == 'uninstall':

|

||||

for x in v:

|

||||

res = await _uninstall_custom_node(x)

|

||||

if res.status != 200:

|

||||

failed.add(x[0])

|

||||

|

||||

elif k == 'update':

|

||||

for x in v:

|

||||

res = await _update_custom_node(x)

|

||||

if res.status != 200:

|

||||

failed.add(x[0])

|

||||

|

||||

elif k == 'update_comfyui':

|

||||

await update_comfyui(None)

|

||||

|

||||

elif k == 'disable':

|

||||

for x in v:

|

||||

await _disable_node(x)

|

||||

|

||||

elif k == 'install_model':

|

||||

for x in v:

|

||||

res = await _install_model(x)

|

||||

if res.status != 200:

|

||||

failed.add(x[0])

|

||||

|

||||

elif k == 'fix':

|

||||

for x in v:

|

||||

res = await _fix_custom_node(x)

|

||||

if res.status != 200:

|

||||

failed.add(x[0])

|

||||

|

||||

with task_worker_lock:

|

||||

finalize_temp_queue_batch(json_data, failed)

|

||||

_queue_start()

|

||||

|

||||

return web.json_response({"failed": list(failed)}, content_type='application/json')

|

||||

|

||||

|

||||

@routes.get("/v2/manager/queue/history_list")

|

||||

async def get_history_list(request):

|

||||

history_path = core.manager_batch_history_path

|

||||

|

||||

try:

|

||||

files = [os.path.join(history_path, f) for f in os.listdir(history_path) if os.path.isfile(os.path.join(history_path, f))]

|

||||

files.sort(key=lambda x: os.path.getmtime(x), reverse=True)

|

||||

history_ids = [os.path.basename(f)[:-5] for f in files]

|

||||

|

||||

return web.json_response({"ids": list(history_ids)}, content_type='application/json')

|

||||

except Exception as e:

|

||||

logging.error(f"[ComfyUI-Manager] /v2/manager/queue/history_list - {e}")

|

||||

return web.Response(status=400)

|

||||

|

||||

|

||||

@routes.get("/v2/manager/queue/history")

|

||||

async def get_history(request):

|

||||

try:

|

||||

json_name = request.rel_url.query["id"]+'.json'

|

||||

batch_path = os.path.join(core.manager_batch_history_path, json_name)

|

||||

|

||||

with open(batch_path, 'r', encoding='utf-8') as file:

|

||||

json_str = file.read()

|

||||

json_obj = json.loads(json_str)

|

||||

return web.json_response(json_obj, content_type='application/json')

|

||||

|

||||

except Exception as e:

|

||||

logging.error(f"[ComfyUI-Manager] /v2/manager/queue/history - {e}")

|

||||

|

||||

return web.Response(status=400)

|

||||

|

||||

|

||||

@routes.get("/v2/customnode/getmappings")

|

||||

@routes.get("/customnode/getmappings")

|

||||

async def fetch_customnode_mappings(request):

|

||||

"""

|

||||

provide unified (node -> node pack) mapping list

|

||||

@@ -873,7 +695,7 @@ async def fetch_customnode_mappings(request):

|

||||

return web.json_response(json_obj, content_type='application/json')

|

||||

|

||||

|

||||

@routes.get("/v2/customnode/fetch_updates")

|

||||

@routes.get("/customnode/fetch_updates")

|

||||

async def fetch_updates(request):

|

||||

try:

|

||||

if request.rel_url.query["mode"] == "local":

|

||||

@@ -900,13 +722,8 @@ async def fetch_updates(request):

|

||||

return web.Response(status=400)

|

||||

|

||||

|

||||

@routes.get("/v2/manager/queue/update_all")

|

||||

@routes.get("/manager/queue/update_all")

|

||||

async def update_all(request):

|

||||

json_data = dict(request.rel_url.query)

|

||||

return await _update_all(json_data)

|

||||

|

||||

|

||||

async def _update_all(json_data):

|

||||

if not is_allowed_security_level('middle'):

|

||||

logging.error(SECURITY_MESSAGE_MIDDLE_OR_BELOW)

|

||||

return web.Response(status=403)

|

||||

@@ -918,13 +735,13 @@ async def _update_all(json_data):

|

||||

|

||||

await core.save_snapshot_with_postfix('autosave')

|

||||

|

||||

if json_data["mode"] == "local":

|

||||

if request.rel_url.query["mode"] == "local":

|

||||

channel = 'local'

|

||||

else:

|

||||

channel = core.get_config()['channel_url']

|

||||

|

||||

await core.unified_manager.reload(json_data["mode"])

|

||||

await core.unified_manager.get_custom_nodes(channel, json_data["mode"])

|

||||

await core.unified_manager.reload(request.rel_url.query["mode"])

|

||||

await core.unified_manager.get_custom_nodes(channel, request.rel_url.query["mode"])

|

||||

|

||||

for k, v in core.unified_manager.active_nodes.items():

|

||||

if k == 'comfyui-manager':

|

||||

@@ -933,7 +750,7 @@ async def _update_all(json_data):

|

||||

continue

|

||||

|

||||

update_item = k, k, v[0]

|

||||

temp_queue_batch.append(("update-main", update_item))

|

||||

task_queue.put(("update-main", update_item))

|

||||

|

||||

for k, v in core.unified_manager.unknown_active_nodes.items():

|

||||

if k == 'comfyui-manager':

|

||||

@@ -942,7 +759,7 @@ async def _update_all(json_data):

|

||||

continue

|

||||

|

||||

update_item = k, k, 'unknown'

|

||||

temp_queue_batch.append(("update-main", update_item))

|

||||

task_queue.put(("update-main", update_item))

|

||||

|

||||

return web.Response(status=200)

|

||||

|

||||

@@ -993,7 +810,7 @@ def populate_markdown(x):

|

||||

|

||||

# freeze imported version

|

||||

startup_time_installed_node_packs = core.get_installed_node_packs()

|

||||

@routes.get("/v2/customnode/installed")

|

||||

@routes.get("/customnode/installed")

|

||||

async def installed_list(request):

|

||||

mode = request.query.get('mode', 'default')

|

||||

|

||||

@@ -1005,7 +822,7 @@ async def installed_list(request):

|

||||

return web.json_response(res, content_type='application/json')

|

||||

|

||||

|

||||

@routes.get("/v2/customnode/getlist")

|

||||

@routes.get("/customnode/getlist")

|

||||

async def fetch_customnode_list(request):

|

||||

"""

|

||||

provide unified custom node list

|

||||

@@ -1118,7 +935,7 @@ def check_model_installed(json_obj):

|

||||

executor.submit(process_model_phase, item)

|

||||

|

||||

|

||||

@routes.get("/v2/externalmodel/getlist")

|

||||

@routes.get("/externalmodel/getlist")

|

||||

async def fetch_externalmodel_list(request):

|

||||

# The model list is only allowed in the default channel, yet.

|

||||

json_obj = await core.get_data_by_mode(request.rel_url.query["mode"], 'model-list.json')

|

||||

@@ -1131,14 +948,14 @@ async def fetch_externalmodel_list(request):

|

||||

return web.json_response(json_obj, content_type='application/json')

|

||||

|

||||

|

||||

@PromptServer.instance.routes.get("/v2/snapshot/getlist")

|

||||

@PromptServer.instance.routes.get("/snapshot/getlist")

|

||||

async def get_snapshot_list(request):

|

||||

items = [f[:-5] for f in os.listdir(core.manager_snapshot_path) if f.endswith('.json')]

|

||||

items.sort(reverse=True)

|

||||

return web.json_response({'items': items}, content_type='application/json')

|

||||

|

||||

|

||||

@routes.get("/v2/snapshot/remove")

|

||||

@routes.get("/snapshot/remove")

|

||||

async def remove_snapshot(request):

|

||||

if not is_allowed_security_level('middle'):

|

||||

logging.error(SECURITY_MESSAGE_MIDDLE_OR_BELOW)

|

||||

@@ -1156,7 +973,7 @@ async def remove_snapshot(request):

|

||||

return web.Response(status=400)

|

||||

|

||||

|

||||

@routes.get("/v2/snapshot/restore")

|

||||

@routes.get("/snapshot/restore")

|

||||

async def restore_snapshot(request):

|

||||

if not is_allowed_security_level('middle'):

|

||||

logging.error(SECURITY_MESSAGE_MIDDLE_OR_BELOW)

|

||||

@@ -1182,7 +999,7 @@ async def restore_snapshot(request):

|

||||

return web.Response(status=400)

|

||||

|

||||

|

||||

@routes.get("/v2/snapshot/get_current")

|

||||

@routes.get("/snapshot/get_current")

|

||||

async def get_current_snapshot_api(request):

|

||||

try:

|

||||

return web.json_response(await core.get_current_snapshot(), content_type='application/json')

|

||||

@@ -1190,7 +1007,7 @@ async def get_current_snapshot_api(request):

|

||||

return web.Response(status=400)

|

||||

|

||||

|

||||

@routes.get("/v2/snapshot/save")

|

||||

@routes.get("/snapshot/save")

|

||||

async def save_snapshot(request):

|

||||

try:

|

||||

await core.save_snapshot_with_postfix('snapshot')

|

||||

@@ -1227,7 +1044,82 @@ def unzip_install(files):

|

||||

return True

|

||||

|

||||

|

||||

@routes.get("/v2/customnode/versions/{node_name}")

|

||||

def copy_install(files, js_path_name=None):

|

||||

for url in files:

|

||||

if url.endswith("/"):

|

||||

url = url[:-1]

|

||||

try:

|

||||

filename = os.path.basename(url)

|

||||

if url.endswith(".py"):

|

||||

download_url(url, core.get_default_custom_nodes_path(), filename)

|

||||

else:

|

||||

path = os.path.join(core.js_path, js_path_name) if js_path_name is not None else core.js_path

|

||||

if not os.path.exists(path):

|

||||

os.makedirs(path)

|

||||

download_url(url, path, filename)

|

||||

|

||||

except Exception as e:

|

||||

logging.error(f"Install(copy) error: {url} / {e}", file=sys.stderr)

|

||||

return False

|

||||

|

||||

logging.info("Installation was successful.")

|

||||

return True

|

||||

|

||||

|

||||

def copy_uninstall(files, js_path_name='.'):

|

||||

for url in files:

|

||||

if url.endswith("/"):

|

||||

url = url[:-1]

|

||||

dir_name = os.path.basename(url)

|

||||

base_path = core.get_default_custom_nodes_path() if url.endswith('.py') else os.path.join(core.js_path, js_path_name)

|

||||

file_path = os.path.join(base_path, dir_name)

|

||||

|

||||

try:

|

||||

if os.path.exists(file_path):

|

||||

os.remove(file_path)

|

||||

elif os.path.exists(file_path + ".disabled"):

|

||||

os.remove(file_path + ".disabled")

|

||||

except Exception as e:

|

||||

logging.error(f"Uninstall(copy) error: {url} / {e}", file=sys.stderr)

|

||||

return False

|

||||

|

||||

logging.info("Uninstallation was successful.")

|

||||

return True

|

||||

|

||||

|

||||

def copy_set_active(files, is_disable, js_path_name='.'):

|

||||

if is_disable:

|

||||

action_name = "Disable"

|

||||

else:

|

||||

action_name = "Enable"

|

||||

|

||||

for url in files:

|

||||

if url.endswith("/"):

|

||||

url = url[:-1]

|

||||

dir_name = os.path.basename(url)

|

||||

base_path = core.get_default_custom_nodes_path() if url.endswith('.py') else os.path.join(core.js_path, js_path_name)

|

||||

file_path = os.path.join(base_path, dir_name)

|

||||

|

||||

try:

|

||||

if is_disable:

|

||||

current_name = file_path

|

||||

new_name = file_path + ".disabled"

|

||||

else:

|

||||

current_name = file_path + ".disabled"

|

||||

new_name = file_path

|

||||

|

||||

os.rename(current_name, new_name)

|

||||

|

||||

except Exception as e:

|

||||

logging.error(f"{action_name}(copy) error: {url} / {e}", file=sys.stderr)

|

||||

|

||||

return False

|

||||

|

||||

logging.info(f"{action_name} was successful.")

|

||||

return True

|

||||

|

||||

|

||||

@routes.get("/customnode/versions/{node_name}")

|

||||

async def get_cnr_versions(request):