Compare commits

25 Commits

feat/manag

...

3.0.1

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

285d0c067f | ||

|

|

a6956ddafb | ||

|

|

f2bc05f5f1 | ||

|

|

a534296d97 | ||

|

|

722b40df80 | ||

|

|

8c58353e25 | ||

|

|

e3aea8c3e2 | ||

|

|

3b8b91d648 | ||

|

|

a735f60790 | ||

|

|

7bc1a944af | ||

|

|

164f25fb43 | ||

|

|

57861e9cbd | ||

|

|

fd62ada1a6 | ||

|

|

929453d105 | ||

|

|

559d4c1185 | ||

|

|

c1fc8aabdc | ||

|

|

851742e5e7 | ||

|

|

adf3f8d094 | ||

|

|

95df2ad212 | ||

|

|

b333342d5f | ||

|

|

e34b59f16d | ||

|

|

f8306c0896 | ||

|

|

f6572e3f5a | ||

|

|

c694764c7a | ||

|

|

da42eca04a |

@@ -1 +0,0 @@

|

||||

PYPI_TOKEN=your-pypi-token

|

||||

70

.github/workflows/ci.yml

vendored

@@ -1,70 +0,0 @@

|

||||

name: CI

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ main, feat/*, fix/* ]

|

||||

pull_request:

|

||||

branches: [ main ]

|

||||

|

||||

jobs:

|

||||

validate-openapi:

|

||||

name: Validate OpenAPI Specification

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Check if OpenAPI changed

|

||||

id: openapi-changed

|

||||

uses: tj-actions/changed-files@v44

|

||||

with:

|

||||

files: openapi.yaml

|

||||

|

||||

- name: Setup Node.js

|

||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

||||

uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version: '18'

|

||||

|

||||

- name: Install Redoc CLI

|

||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

npm install -g @redocly/cli

|

||||

|

||||

- name: Validate OpenAPI specification

|

||||

if: steps.openapi-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

redocly lint openapi.yaml

|

||||

|

||||

code-quality:

|

||||

name: Code Quality Checks

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0 # Fetch all history for proper diff

|

||||

|

||||

- name: Get changed Python files

|

||||

id: changed-py-files

|

||||

uses: tj-actions/changed-files@v44

|

||||

with:

|

||||

files: |

|

||||

**/*.py

|

||||

files_ignore: |

|

||||

comfyui_manager/legacy/**

|

||||

|

||||

- name: Setup Python

|

||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.9'

|

||||

|

||||

- name: Install dependencies

|

||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

||||

run: |

|

||||

pip install ruff

|

||||

|

||||

- name: Run ruff linting on changed files

|

||||

if: steps.changed-py-files.outputs.any_changed == 'true'

|

||||

run: |

|

||||

echo "Changed files: ${{ steps.changed-py-files.outputs.all_changed_files }}"

|

||||

echo "${{ steps.changed-py-files.outputs.all_changed_files }}" | xargs -r ruff check

|

||||

58

.github/workflows/publish-to-pypi.yml

vendored

@@ -1,58 +0,0 @@

|

||||

name: Publish to PyPI

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- manager-v4

|

||||

paths:

|

||||

- "pyproject.toml"

|

||||

|

||||

jobs:

|

||||

build-and-publish:

|

||||

runs-on: ubuntu-latest

|

||||

if: ${{ github.repository_owner == 'ltdrdata' || github.repository_owner == 'Comfy-Org' }}

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: '3.x'

|

||||

|

||||

- name: Install build dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

python -m pip install build twine

|

||||

|

||||

- name: Get current version

|

||||

id: current_version

|

||||

run: |

|

||||

CURRENT_VERSION=$(grep -oP '^version = "\K[^"]+' pyproject.toml)

|

||||

echo "version=$CURRENT_VERSION" >> $GITHUB_OUTPUT

|

||||

echo "Current version: $CURRENT_VERSION"

|

||||

|

||||

- name: Build package

|

||||

run: python -m build

|

||||

|

||||

# - name: Create GitHub Release

|

||||

# id: create_release

|

||||

# uses: softprops/action-gh-release@v2

|

||||

# env:

|

||||

# GITHUB_TOKEN: ${{ github.token }}

|

||||

# with:

|

||||

# files: dist/*

|

||||

# tag_name: v${{ steps.current_version.outputs.version }}

|

||||

# draft: false

|

||||

# prerelease: false

|

||||

# generate_release_notes: true

|

||||

|

||||

- name: Publish to PyPI

|

||||

uses: pypa/gh-action-pypi-publish@76f52bc884231f62b9a034ebfe128415bbaabdfc

|

||||

with:

|

||||

password: ${{ secrets.PYPI_TOKEN }}

|

||||

skip-existing: true

|

||||

verbose: true

|

||||

21

.github/workflows/publish.yml

vendored

Normal file

@@ -0,0 +1,21 @@

|

||||

name: Publish to Comfy registry

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

paths:

|

||||

- "pyproject.toml"

|

||||

|

||||

jobs:

|

||||

publish-node:

|

||||

name: Publish Custom Node to registry

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v4

|

||||

- name: Publish Custom Node

|

||||

uses: Comfy-Org/publish-node-action@main

|

||||

with:

|

||||

## Add your own personal access token to your Github Repository secrets and reference it here.

|

||||

personal_access_token: ${{ secrets.REGISTRY_ACCESS_TOKEN }}

|

||||

23

.github/workflows/ruff.yml

vendored

@@ -1,23 +0,0 @@

|

||||

name: Python Linting

|

||||

|

||||

on: [push, pull_request]

|

||||

|

||||

jobs:

|

||||

ruff:

|

||||

name: Run Ruff

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.x

|

||||

|

||||

- name: Install Ruff

|

||||

run: pip install ruff

|

||||

|

||||

- name: Run Ruff

|

||||

run: ruff check .

|

||||

5

.gitignore

vendored

@@ -17,8 +17,3 @@ github-stats-cache.json

|

||||

pip_overrides.json

|

||||

*.json

|

||||

check2.sh

|

||||

/venv/

|

||||

build

|

||||

dist

|

||||

*.egg-info

|

||||

.env

|

||||

@@ -1,47 +0,0 @@

|

||||

## Testing Changes

|

||||

|

||||

1. Activate the ComfyUI environment.

|

||||

|

||||

2. Build package locally after making changes.

|

||||

|

||||

```bash

|

||||

# from inside the ComfyUI-Manager directory, with the ComfyUI environment activated

|

||||

python -m build

|

||||

```

|

||||

|

||||

3. Install the package locally in the ComfyUI environment.

|

||||

|

||||

```bash

|

||||

# Uninstall existing package

|

||||

pip uninstall comfyui-manager

|

||||

|

||||

# Install the locale package

|

||||

pip install dist/comfyui-manager-*.whl

|

||||

```

|

||||

|

||||

4. Start ComfyUI.

|

||||

|

||||

```bash

|

||||

# after navigating to the ComfyUI directory

|

||||

python main.py

|

||||

```

|

||||

|

||||

## Manually Publish Test Version to PyPi

|

||||

|

||||

1. Set the `PYPI_TOKEN` environment variable in env file.

|

||||

|

||||

2. If manually publishing, you likely want to use a release candidate version, so set the version in [pyproject.toml](pyproject.toml) to something like `0.0.1rc1`.

|

||||

|

||||

3. Build the package.

|

||||

|

||||

```bash

|

||||

python -m build

|

||||

```

|

||||

|

||||

4. Upload the package to PyPi.

|

||||

|

||||

```bash

|

||||

python -m twine upload dist/* --username __token__ --password $PYPI_TOKEN

|

||||

```

|

||||

|

||||

5. View at https://pypi.org/project/comfyui-manager/

|

||||

15

MANIFEST.in

@@ -1,15 +0,0 @@

|

||||

include comfyui_manager/js/*

|

||||

include comfyui_manager/*.json

|

||||

include comfyui_manager/glob/*

|

||||

include LICENSE.txt

|

||||

include README.md

|

||||

include requirements.txt

|

||||

include pyproject.toml

|

||||

include custom-node-list.json

|

||||

include extension-node-list.json

|

||||

include extras.json

|

||||

include github-stats.json

|

||||

include model-list.json

|

||||

include alter-list.json

|

||||

include comfyui_manager/channels.list.template

|

||||

include comfyui_manager/pip-policy.json

|

||||

285

README.md

@@ -1,51 +1,60 @@

|

||||

# ComfyUI Manager

|

||||

# ComfyUI Manager (Extension)

|

||||

|

||||

**ComfyUI-Manager** is an extension designed to enhance the usability of [ComfyUI](https://github.com/comfyanonymous/ComfyUI). It offers management functions to **install, remove, disable, and enable** various custom nodes of ComfyUI. Furthermore, this extension provides a hub feature and convenience functions to access a wide range of information within ComfyUI.

|

||||

**ComfyUI-Manager (Extension)** expands the functionality of [manager-core](https://github.com/Comfy-Org/manager-core), allowing users to access the existing ComfyUI-Manager features.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## NOTICE

|

||||

* V4.0: Modify the structure to be installable via pip instead of using git clone.

|

||||

* V3.16: Support for `uv` has been added. Set `use_uv` in `config.ini`.

|

||||

* V3.10: `double-click feature` is removed

|

||||

* This feature has been moved to https://github.com/ltdrdata/comfyui-connection-helper

|

||||

* V3.3.2: Overhauled. Officially supports [https://registry.comfy.org/](https://registry.comfy.org/).

|

||||

* You can see whole nodes info on [ComfyUI Nodes Info](https://ltdrdata.github.io/) page.

|

||||

* V3.0: ComfyUI-Manager (Extension)

|

||||

|

||||

|

||||

## Installation

|

||||

|

||||

* When installing the latest ComfyUI, it will be automatically installed as a dependency, so manual installation is no longer necessary.

|

||||

### Installation[method1] installation via manager-core (Recommended)

|

||||

|

||||

* Manual installation of the nightly version:

|

||||

* Clone to a temporary directory (**Note:** Do **not** clone into `ComfyUI/custom_nodes`.)

|

||||

```

|

||||

git clone https://github.com/Comfy-Org/ComfyUI-Manager

|

||||

```

|

||||

* Install via pip

|

||||

```

|

||||

cd ComfyUI-Manager

|

||||

pip install .

|

||||

```

|

||||

|

||||

* See also: https://github.com/Comfy-Org/comfy-cli

|

||||

Search for ComfyUI-Manager in the custom node installation feature of manager-core and install it.

|

||||

|

||||

|

||||

## Front-end

|

||||

### Installation[method1] manual (Not Recommended)

|

||||

|

||||

* The built-in front-end of ComfyUI-Manager is the legacy front-end. The front-end for ComfyUI-Manager is now provided via [ComfyUI Frontend](https://github.com/Comfy-Org/ComfyUI_frontend).

|

||||

* To enable the legacy front-end, set the environment variable `ENABLE_LEGACY_COMFYUI_MANAGER_FRONT` to `true` before running.

|

||||

To install ComfyUI-Manager in addition to an existing installation of ComfyUI, you can follow the following steps:

|

||||

|

||||

1. goto `ComfyUI/custom_nodes` dir in terminal(cmd)

|

||||

2. `git clone https://github.com/ltdrdata/ComfyUI-Manager.git`

|

||||

3. Restart ComfyUI

|

||||

|

||||

|

||||

### Installation Precautions

|

||||

* **DO**: `ComfyUI-Manager` files must be accurately located in the path `ComfyUI/custom_nodes/ComfyUI-Manager`

|

||||

* Installing in a compressed file format is not recommended.

|

||||

* **DON'T**: Decompress directly into the `ComfyUI/custom_nodes` location, resulting in the Manager contents like `__init__.py` being placed directly in that directory.

|

||||

* You have to remove all ComfyUI-Manager files from `ComfyUI/custom_nodes`

|

||||

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager/ComfyUI-Manager`.

|

||||

* You have to move `ComfyUI/custom_nodes/ComfyUI-Manager/ComfyUI-Manager` to `ComfyUI/custom_nodes/ComfyUI-Manager`

|

||||

* **DON'T**: In a form where decompression occurs in a path such as `ComfyUI/custom_nodes/ComfyUI-Manager-main`.

|

||||

* In such cases, `ComfyUI-Manager` may operate, but it won't be recognized within `ComfyUI-Manager`, and updates cannot be performed. It also poses the risk of duplicate installations.

|

||||

* You have to rename `ComfyUI/custom_nodes/ComfyUI-Manager-main` to `ComfyUI/custom_nodes/ComfyUI-Manager`

|

||||

|

||||

|

||||

|

||||

## Colab Notebook

|

||||

This repository provides Colab notebooks that allow you to install and use ComfyUI, including ComfyUI-Manager. To use ComfyUI, [click on this link](https://colab.research.google.com/github/ltdrdata/ComfyUI-Manager/blob/main/notebooks/comfyui_colab_with_manager.ipynb).

|

||||

* Support for installing ComfyUI

|

||||

* Support for basic installation of ComfyUI-Manager

|

||||

* Support for automatically installing dependencies of custom nodes upon restarting Colab notebooks.

|

||||

|

||||

|

||||

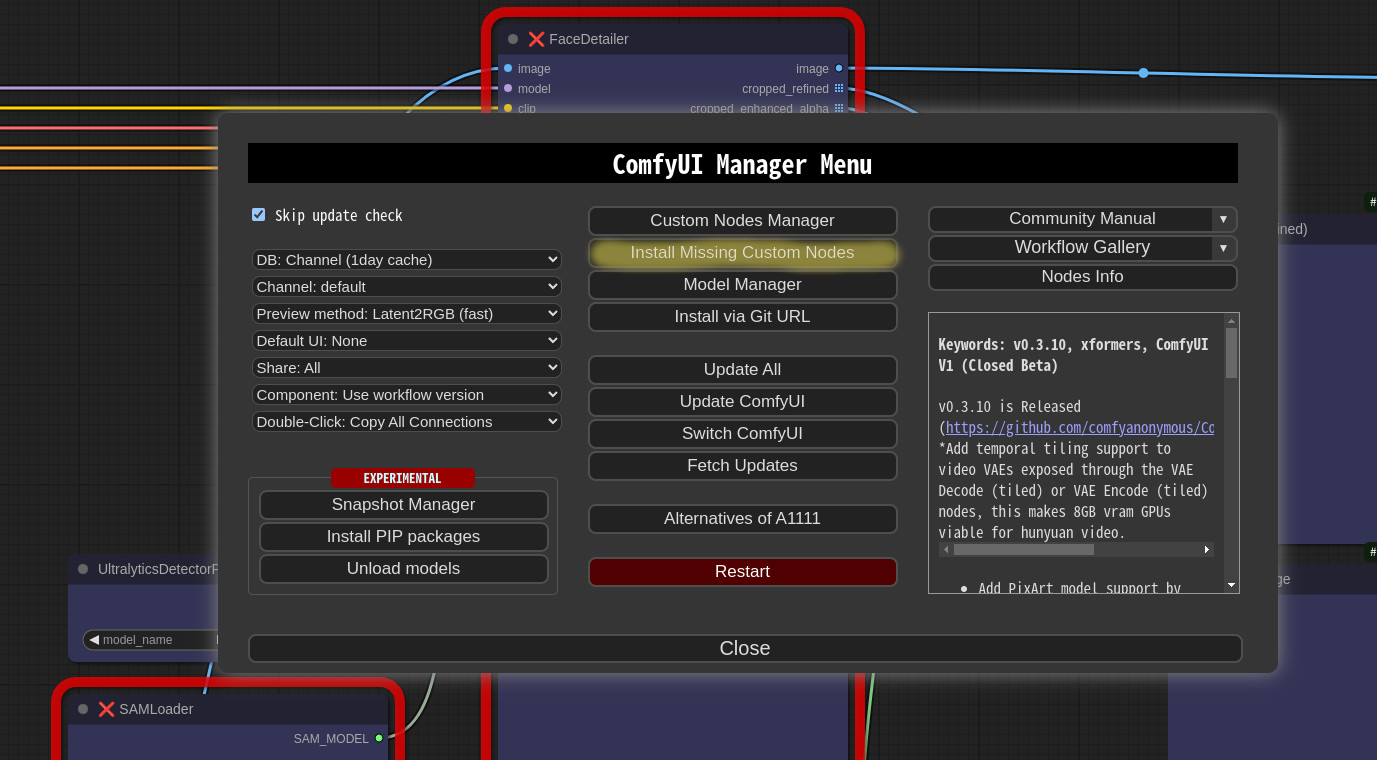

## How To Use

|

||||

|

||||

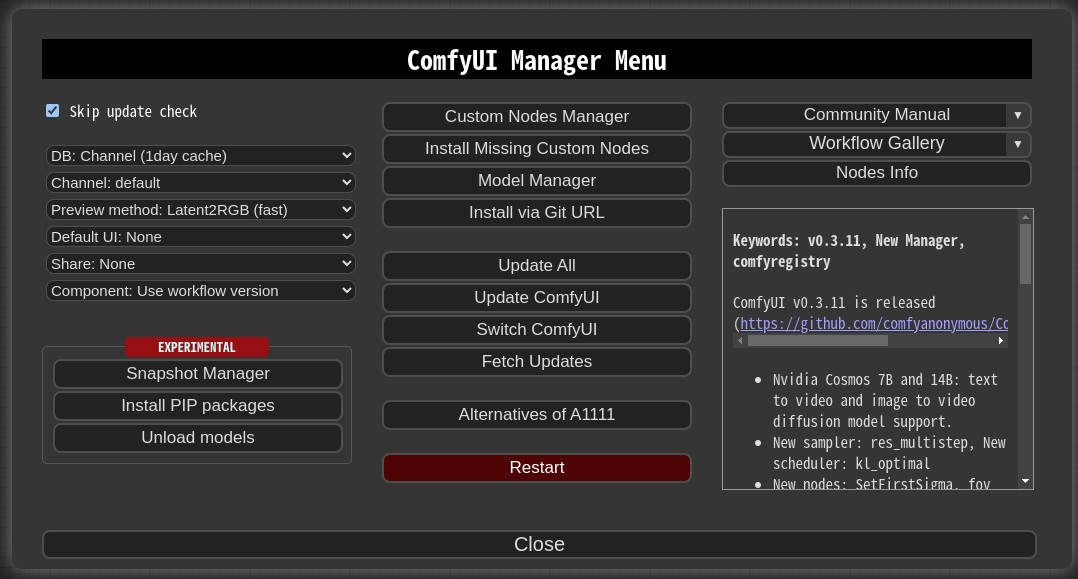

1. Click "Manager" button on main menu

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

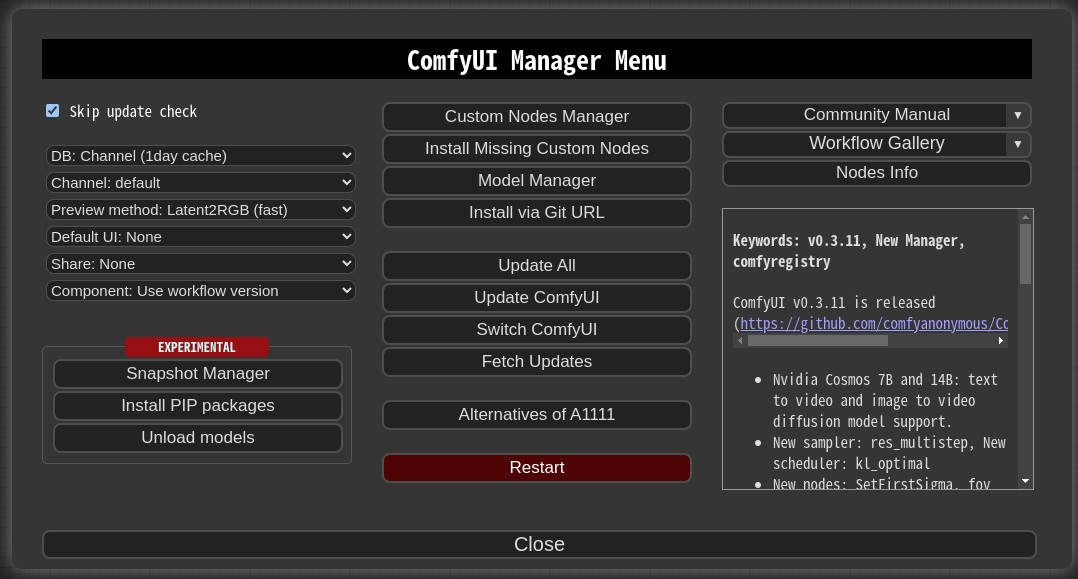

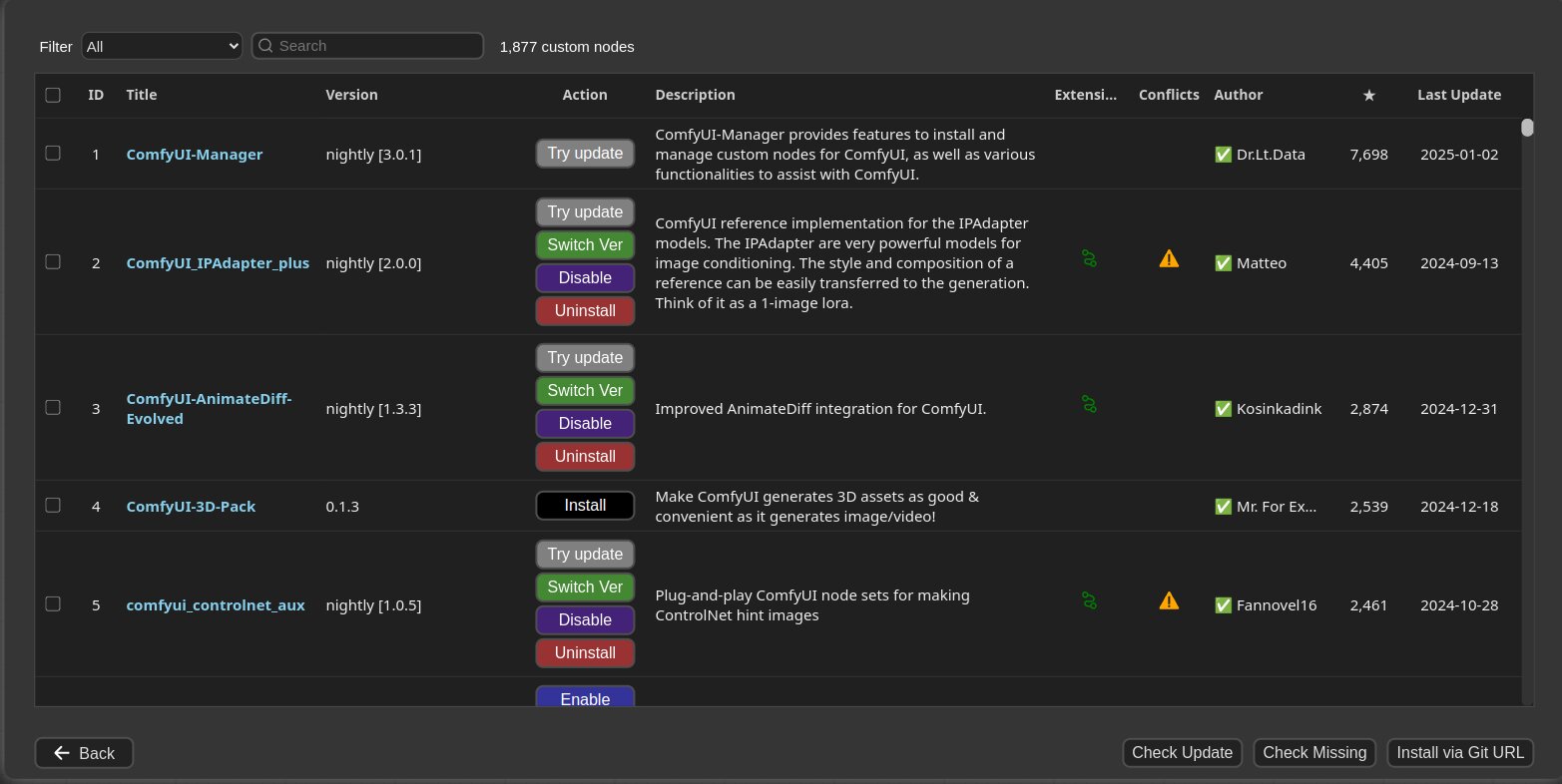

2. If you click on 'Install Custom Nodes' or 'Install Models', an installer dialog will open.

|

||||

|

||||

|

||||

|

||||

|

||||

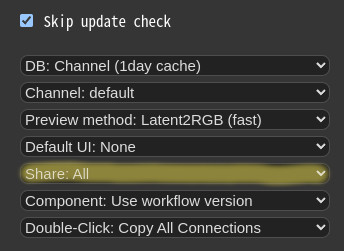

* There are three DB modes: `DB: Channel (1day cache)`, `DB: Local`, and `DB: Channel (remote)`.

|

||||

* `Channel (1day cache)` utilizes Channel cache information with a validity period of one day to quickly display the list.

|

||||

@@ -61,9 +70,9 @@

|

||||

|

||||

3. Click 'Install' or 'Try Install' button.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

* Installed: This item is already installed.

|

||||

* Install: Clicking this button will install the item.

|

||||

@@ -73,56 +82,39 @@

|

||||

* Channel settings have a broad impact, affecting not only the node list but also all functions like "Update all."

|

||||

* Conflicted Nodes with a yellow background show a list of nodes conflicting with other extensions in the respective extension. This issue needs to be addressed by the developer, and users should be aware that due to these conflicts, some nodes may not function correctly and may need to be installed accordingly.

|

||||

|

||||

4. Share

|

||||

|

||||

4. If you set the `Badge:` item in the menu as `Badge: Nickname`, `Badge: Nickname (hide built-in)`, `Badge: #ID Nickname`, `Badge: #ID Nickname (hide built-in)` the information badge will be displayed on the node.

|

||||

* When selecting (hide built-in), it hides the 🦊 icon, which signifies built-in nodes.

|

||||

* Nodes without any indication on the badge are custom nodes that Manager cannot recognize.

|

||||

* `Badge: Nickname` displays the nickname of custom nodes, while `Badge: #ID Nickname` also includes the internal ID of the node.

|

||||

|

||||

|

||||

|

||||

|

||||

5. Share

|

||||

|

||||

|

||||

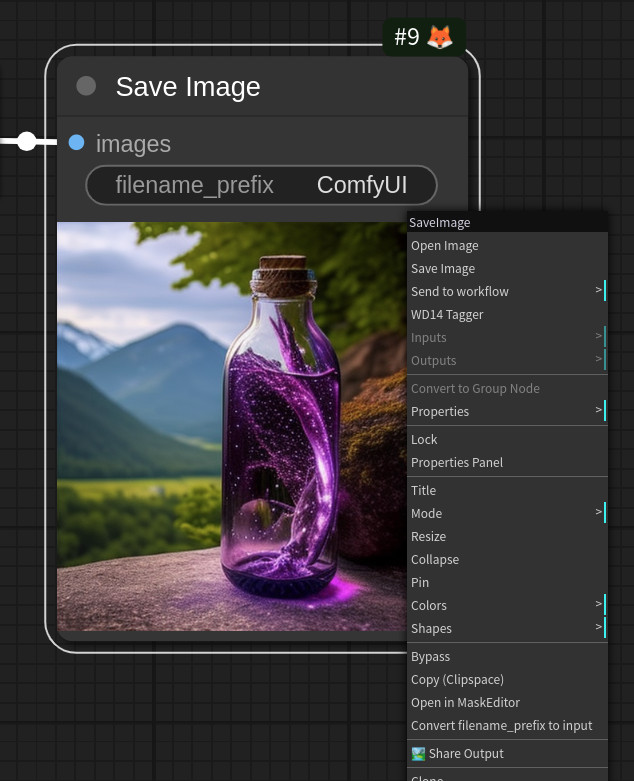

* You can share the workflow by clicking the Share button at the bottom of the main menu or selecting Share Output from the Context Menu of the Image node.

|

||||

* Currently, it supports sharing via [https://comfyworkflows.com/](https://comfyworkflows.com/),

|

||||

[https://openart.ai](https://openart.ai/workflows/dev), [https://youml.com](https://youml.com)

|

||||

as well as through the Matrix channel.

|

||||

|

||||

|

||||

|

||||

|

||||

* Through the Share settings in the Manager menu, you can configure the behavior of the Share button in the Main menu or Share Output button on Context Menu.

|

||||

* Through the Share settings in the Manager menu, you can configure the behavior of the Share button in the Main menu or Share Ouput button on Context Menu.

|

||||

* `None`: hide from Main menu

|

||||

* `All`: Show a dialog where the user can select a title for sharing.

|

||||

|

||||

|

||||

## Paths

|

||||

In `ComfyUI-Manager` V3.0 and later, configuration files and dynamically generated files are located under `<USER_DIRECTORY>/default/ComfyUI-Manager/`.

|

||||

|

||||

* <USER_DIRECTORY>

|

||||

* If executed without any options, the path defaults to ComfyUI/user.

|

||||

* It can be set using --user-directory <USER_DIRECTORY>.

|

||||

|

||||

* Basic config files: `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini`

|

||||

* Configurable channel lists: `<USER_DIRECTORY>/default/ComfyUI-Manager/channels.ini`

|

||||

* Configurable pip overrides: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_overrides.json`

|

||||

* Configurable pip blacklist: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_blacklist.list`

|

||||

* Configurable pip auto fix: `<USER_DIRECTORY>/default/ComfyUI-Manager/pip_auto_fix.list`

|

||||

* Saved snapshot files: `<USER_DIRECTORY>/default/ComfyUI-Manager/snapshots`

|

||||

* Startup script files: `<USER_DIRECTORY>/default/ComfyUI-Manager/startup-scripts`

|

||||

* Component files: `<USER_DIRECTORY>/default/ComfyUI-Manager/components`

|

||||

|

||||

|

||||

## `extra_model_paths.yaml` Configuration

|

||||

The following settings are applied based on the section marked as `is_default`.

|

||||

|

||||

* `custom_nodes`: Path for installing custom nodes

|

||||

* Importing does not need to adhere to the path set as `is_default`, but this is the path where custom nodes are installed by the `ComfyUI Nodes Manager`.

|

||||

* `download_model_base`: Path for downloading models

|

||||

|

||||

|

||||

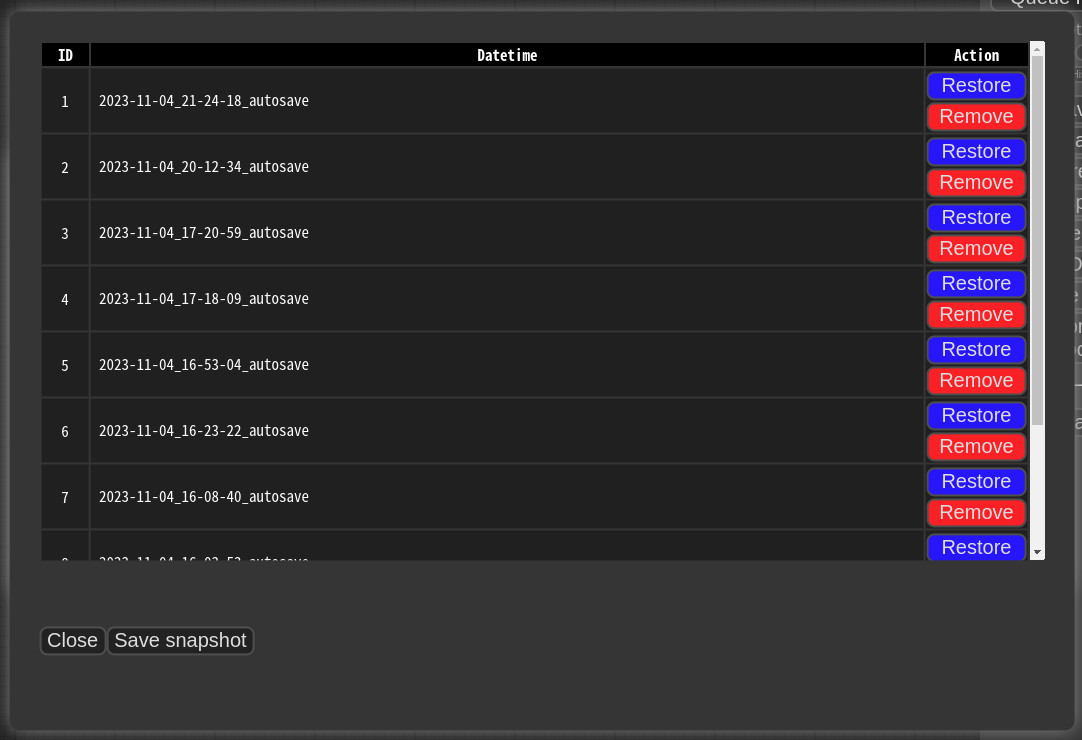

## Snapshot-Manager

|

||||

* When you press `Save snapshot` or use `Update All` on `Manager Menu`, the current installation status snapshot is saved.

|

||||

* Snapshot file dir: `<USER_DIRECTORY>/default/ComfyUI-Manager/snapshots`

|

||||

* Snapshot file dir: `ComfyUI-Manager/snapshots`

|

||||

* You can rename snapshot file.

|

||||

* Press the "Restore" button to revert to the installation status of the respective snapshot.

|

||||

* However, for custom nodes not managed by Git, snapshot support is incomplete.

|

||||

* When you press `Restore`, it will take effect on the next ComfyUI startup.

|

||||

* The selected snapshot file is saved in `<USER_DIRECTORY>/default/ComfyUI-Manager/startup-scripts/restore-snapshot.json`, and upon restarting ComfyUI, the snapshot is applied and then deleted.

|

||||

* The selected snapshot file is saved in `ComfyUI-Manager/startup-scripts/restore-snapshot.json`, and upon restarting ComfyUI, the snapshot is applied and then deleted.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## cm-cli: command line tools for power user

|

||||

@@ -139,18 +131,48 @@ The following settings are applied based on the section marked as `is_default`.

|

||||

|

||||

## Custom node support guide

|

||||

|

||||

* **NOTICE:**

|

||||

- You should no longer assume that the GitHub repository name will match the subdirectory name under `custom_nodes`. The name of the subdirectory under `custom_nodes` will now use the normalized name from the `name` field in `pyproject.toml`.

|

||||

- Avoid relying on directory names for imports whenever possible.

|

||||

* Currently, the system operates by cloning the git repository and sequentially installing the dependencies listed in requirements.txt using pip, followed by invoking the install.py script. In the future, we plan to discuss and determine the specifications for supporting custom nodes.

|

||||

|

||||

* https://docs.comfy.org/registry/overview

|

||||

* https://github.com/Comfy-Org/rfcs

|

||||

* Please submit a pull request to update either the custom-node-list.json or model-list.json file.

|

||||

|

||||

**Special purpose files** (optional)

|

||||

* `pyproject.toml` - Spec file for comfyregistry.

|

||||

* The scanner currently provides a detection function for missing nodes, which is capable of detecting nodes described by the following two patterns.

|

||||

|

||||

```

|

||||

NODE_CLASS_MAPPINGS = {

|

||||

"ExecutionSwitch": ExecutionSwitch,

|

||||

"ExecutionBlocker": ExecutionBlocker,

|

||||

...

|

||||

}

|

||||

|

||||

NODE_CLASS_MAPPINGS.update({

|

||||

"UniFormer-SemSegPreprocessor": Uniformer_SemSegPreprocessor,

|

||||

"SemSegPreprocessor": Uniformer_SemSegPreprocessor,

|

||||

})

|

||||

```

|

||||

* Or you can provide manually `node_list.json` file.

|

||||

|

||||

* When you write a docstring in the header of the .py file for the Node as follows, it will be used for managing the database in the Manager.

|

||||

* Currently, only the `nickname` is being used, but other parts will also be utilized in the future.

|

||||

* The `nickname` will be the name displayed on the badge of the node.

|

||||

* If there is no `nickname`, it will be truncated to 20 characters from the arbitrarily written title and used.

|

||||

```

|

||||

"""

|

||||

@author: Dr.Lt.Data

|

||||

@title: Impact Pack

|

||||

@nickname: Impact Pack

|

||||

@description: This extension offers various detector nodes and detailer nodes that allow you to configure a workflow that automatically enhances facial details. And provide iterative upscaler.

|

||||

"""

|

||||

```

|

||||

|

||||

|

||||

* **Special purpose files** (optional)

|

||||

* `node_list.json` - When your custom nodes pattern of NODE_CLASS_MAPPINGS is not conventional, it is used to manually provide a list of nodes for reference. ([example](https://github.com/melMass/comfy_mtb/raw/main/node_list.json))

|

||||

* `requirements.txt` - When installing, this pip requirements will be installed automatically

|

||||

* `install.py` - When installing, it is automatically called

|

||||

* `uninstall.py` - When uninstalling, it is automatically called

|

||||

* `disable.py` - When disabled, it is automatically called

|

||||

* When installing a custom node setup `.js` file, it is recommended to write this script for disabling.

|

||||

* `enable.py` - When enabled, it is automatically called

|

||||

* **All scripts are executed from the root path of the corresponding custom node.**

|

||||

|

||||

|

||||

@@ -169,12 +191,12 @@ The following settings are applied based on the section marked as `is_default`.

|

||||

}

|

||||

```

|

||||

* `<current timestamp>` Ensure that the timestamp is always unique.

|

||||

* "components" should have the same structure as the content of the file stored in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||

* "components" should have the same structure as the content of the file stored in ComfyUI-Manager/components.

|

||||

* `<component name>`: The name should be in the format `<prefix>::<node name>`.

|

||||

* `<compnent nodeata>`: In the nodedata of the group node.

|

||||

* `<version>`: Only two formats are allowed: `major.minor.patch` or `major.minor`. (e.g. `1.0`, `2.2.1`)

|

||||

* `<datetime>`: Saved time

|

||||

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in `<USER_DIRECTORY>/default/ComfyUI-Manager/components`.

|

||||

* `<packname>`: If the packname is not empty, the category becomes packname/workflow, and it is saved in the <packname>.pack file in ComfyUI-Manager/components.

|

||||

* `<category>`: If there is neither a category nor a packname, it is saved in the components category.

|

||||

```

|

||||

"version":"1.0",

|

||||

@@ -191,38 +213,11 @@ The following settings are applied based on the section marked as `is_default`.

|

||||

|

||||

## Support of missing nodes installation

|

||||

|

||||

|

||||

|

||||

|

||||

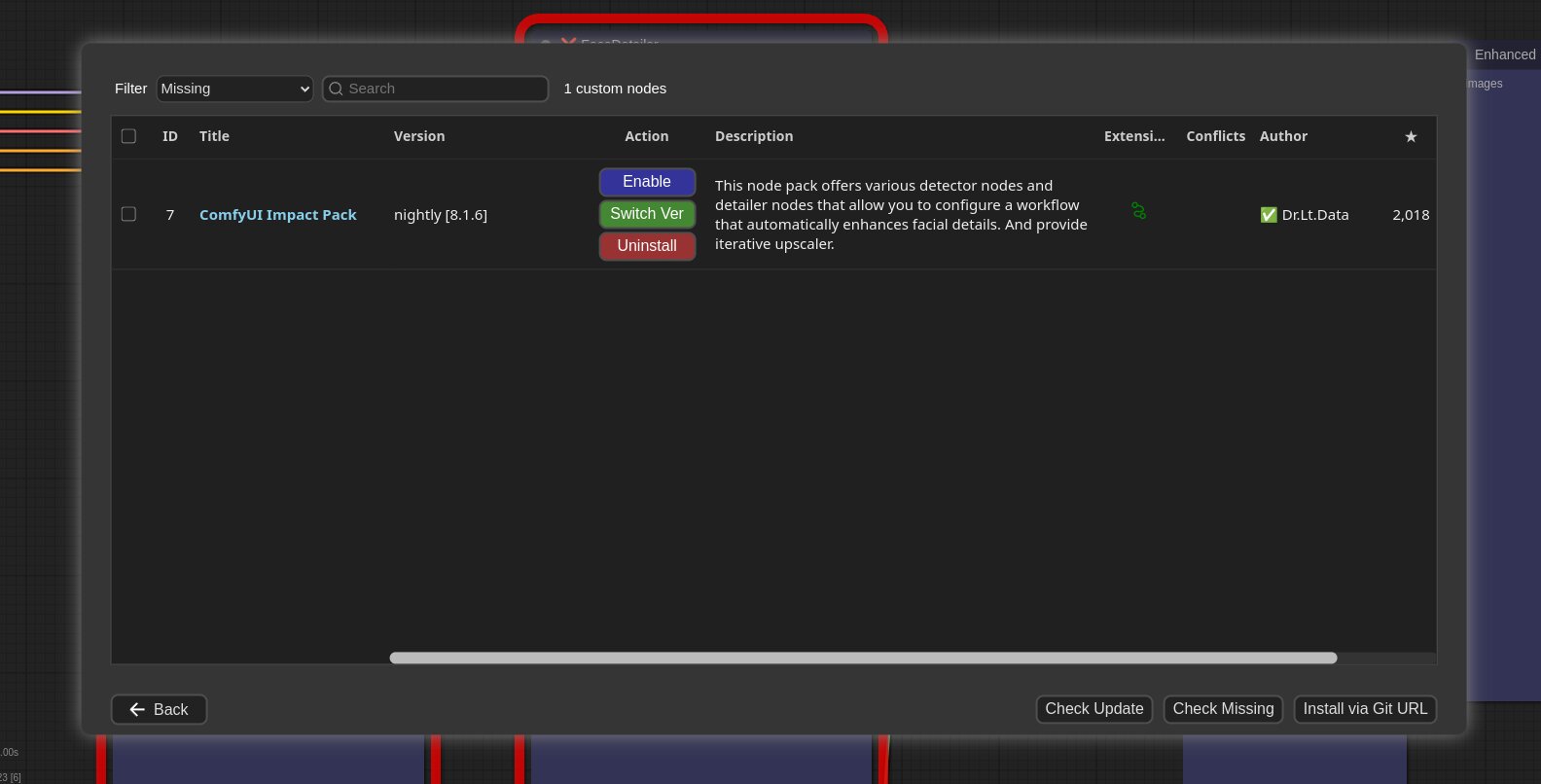

* When you click on the ```Install Missing Custom Nodes``` button in the menu, it displays a list of extension nodes that contain nodes not currently present in the workflow.

|

||||

|

||||

|

||||

|

||||

|

||||

# Config

|

||||

* You can modify the `config.ini` file to apply the settings for ComfyUI-Manager.

|

||||

* The path to the `config.ini` used by ComfyUI-Manager is displayed in the startup log messages.

|

||||

* See also: [https://github.com/ltdrdata/ComfyUI-Manager#paths]

|

||||

* Configuration options:

|

||||

```

|

||||

[default]

|

||||

git_exe = <Manually specify the path to the git executable. If left empty, the default git executable path will be used.>

|

||||

use_uv = <Use uv instead of pip for dependency installation.>

|

||||

default_cache_as_channel_url = <Determines whether to retrieve the DB designated as channel_url at startup>

|

||||

bypass_ssl = <Set to True if SSL errors occur to disable SSL.>

|

||||

file_logging = <Configure whether to create a log file used by ComfyUI-Manager.>

|

||||

windows_selector_event_loop_policy = <If an event loop error occurs on Windows, set this to True.>

|

||||

model_download_by_agent = <When downloading models, use an agent instead of torchvision_download_url.>

|

||||

downgrade_blacklist = <Set a list of packages to prevent downgrades. List them separated by commas.>

|

||||

security_level = <Set the security level => strong|normal|normal-|weak>

|

||||

always_lazy_install = <Whether to perform dependency installation on restart even in environments other than Windows.>

|

||||

network_mode = <Set the network mode => public|private|offline|personal_cloud>

|

||||

```

|

||||

|

||||

* network_mode:

|

||||

- public: An environment that uses a typical public network.

|

||||

- private: An environment that uses a closed network, where a private node DB is configured via `channel_url`. (Uses cache if available)

|

||||

- offline: An environment that does not use any external connections when using an offline network. (Uses cache if available)

|

||||

- personal_cloud: Applies relaxed security features in cloud environments such as Google Colab or Runpod, where strong security is not required.

|

||||

|

||||

|

||||

|

||||

## Additional Feature

|

||||

@@ -253,40 +248,13 @@ The following settings are applied based on the section marked as `is_default`.

|

||||

* Custom pip mapping

|

||||

* When you create the `pip_overrides.json` file, it changes the installation of specific pip packages to installations defined by the user.

|

||||

* Please refer to the `pip_overrides.json.template` file.

|

||||

|

||||

* Prevent the installation of specific pip packages

|

||||

* List the package names one per line in the `pip_blacklist.list` file.

|

||||

|

||||

* Automatically Restoring pip Installation

|

||||

* If you list pip spec requirements in `pip_auto_fix.list`, similar to `requirements.txt`, it will automatically restore the specified versions when starting ComfyUI or when versions get mismatched during various custom node installations.

|

||||

* `--index-url` can be used.

|

||||

|

||||

|

||||

* Use `aria2` as downloader

|

||||

* [howto](docs/en/use_aria2.md)

|

||||

|

||||

* If you add the item `skip_migration_check = True` to `config.ini`, it will not check whether there are nodes that can be migrated at startup.

|

||||

* This option can be used if performance issues occur in a Colab+GDrive environment.

|

||||

|

||||

## Environment Variables

|

||||

|

||||

The following features can be configured using environment variables:

|

||||

|

||||

* **COMFYUI_PATH**: The installation path of ComfyUI

|

||||

* **GITHUB_ENDPOINT**: Reverse proxy configuration for environments with limited access to GitHub

|

||||

* **HF_ENDPOINT**: Reverse proxy configuration for environments with limited access to Hugging Face

|

||||

|

||||

|

||||

### Example 1:

|

||||

Redirecting `https://github.com/ltdrdata/ComfyUI-Impact-Pack` to `https://mirror.ghproxy.com/https://github.com/ltdrdata/ComfyUI-Impact-Pack`

|

||||

|

||||

```

|

||||

GITHUB_ENDPOINT=https://mirror.ghproxy.com/https://github.com

|

||||

```

|

||||

|

||||

#### Example 2:

|

||||

Changing `https://huggingface.co/path/to/somewhere` to `https://some-hf-mirror.com/path/to/somewhere`

|

||||

|

||||

```

|

||||

HF_ENDPOINT=https://some-hf-mirror.com

|

||||

```

|

||||

|

||||

## Scanner

|

||||

When you run the `scan.sh` script:

|

||||

@@ -304,42 +272,43 @@ When you run the `scan.sh` script:

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the `<USER_DIRECTORY>/default/ComfyUI-Manager/config.ini` file that is generated.

|

||||

* If your `git.exe` is installed in a specific location other than system git, please install ComfyUI-Manager and run ComfyUI. Then, specify the path including the file name in `git_exe = ` in the ComfyUI-Manager/config.ini file that is generated.

|

||||

* If updating ComfyUI-Manager itself fails, please go to the **ComfyUI-Manager** directory and execute the command `git update-ref refs/remotes/origin/main a361cc1 && git fetch --all && git pull`.

|

||||

* Alternatively, download the update-fix.py script from [update-fix.py](https://github.com/ltdrdata/ComfyUI-Manager/raw/main/scripts/update-fix.py) and place it in the ComfyUI-Manager directory. Then, run it using your Python command.

|

||||

For the portable version, use `..\..\..\python_embeded\python.exe update-fix.py`.

|

||||

* For cases where nodes like `PreviewTextNode` from `ComfyUI_Custom_Nodes_AlekPet` are only supported as front-end nodes, we currently do not provide missing nodes for them.

|

||||

* Currently, `vid2vid` is not being updated, causing compatibility issues.

|

||||

* If you encounter the error message `Overlapped Object has pending operation at deallocation on Comfyui Manager load` under Windows

|

||||

* Edit `config.ini` file: add `windows_selector_event_loop_policy = True`

|

||||

* if `SSL: CERTIFICATE_VERIFY_FAILED` error is occured.

|

||||

* Edit `config.ini` file: add `bypass_ssl = True`

|

||||

|

||||

|

||||

## Security policy

|

||||

|

||||

The security settings are applied based on whether the ComfyUI server's listener is non-local and whether the network mode is set to `personal_cloud`.

|

||||

|

||||

* **non-local**: When the server is launched with `--listen` and is bound to a network range other than the local `127.` range, allowing remote IP access.

|

||||

* **personal\_cloud**: When the `network_mode` is set to `personal_cloud`.

|

||||

|

||||

|

||||

### Risky Level Table

|

||||

|

||||

| Risky Level | features |

|

||||

|-------------|---------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| high+ | * `Install via git url`, `pip install`<BR>* Installation of nodepack registered not in the `default channel`. |

|

||||

| high | * Fix nodepack |

|

||||

| middle+ | * Uninstall/Update<BR>* Installation of nodepack registered in the `default channel`.<BR>* Restore/Remove Snapshot<BR>* Install model |

|

||||

| middle | * Restart |

|

||||

| low | * Update ComfyUI |

|

||||

|

||||

|

||||

### Security Level Table

|

||||

|

||||

| Security Level | local | non-local (personal_cloud) | non-local (not personal_cloud) |

|

||||

|----------------|--------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------|--------------------------------|

|

||||

| strong | * Only `weak` level risky features are allowed | * Only `weak` level risky features are allowed | * Only `weak` level risky features are allowed |

|

||||

| normal | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+`, `high` and `middle+` level risky features are not allowed<BR>* `middle` level risky features are available

|

||||

| normal- | * All features are available | * `high+` and `high` level risky features are not allowed<BR>* `middle+` and `middle` level risky features are available | * `high+`, `high` and `middle+` level risky features are not allowed<BR>* `middle` level risky features are available

|

||||

| weak | * All features are available | * All features are available | * `high+` and `middle+` level risky features are not allowed<BR>* `high`, `middle` and `low` level risky features are available

|

||||

|

||||

* Edit `config.ini` file: add `security_level = <LEVEL>`

|

||||

* `strong`

|

||||

* doesn't allow `high` and `middle` level risky feature

|

||||

* `normal`

|

||||

* doesn't allow `high` level risky feature

|

||||

* `middle` level risky feature is available

|

||||

* `normal-`

|

||||

* doesn't allow `high` level risky feature if `--listen` is specified and not starts with `127.`

|

||||

* `middle` level risky feature is available

|

||||

* `weak`

|

||||

* all feature is available

|

||||

|

||||

* `high` level risky features

|

||||

* `Install via git url`, `pip install`

|

||||

* Installation of custom nodes registered not in the `default channel`.

|

||||

* Fix custom nodes

|

||||

|

||||

* `middle` level risky features

|

||||

* Uninstall/Update

|

||||

* Installation of custom nodes registered in the `default channel`.

|

||||

* Restore/Remove Snapshot

|

||||

* Restart

|

||||

|

||||

* `low` level risky features

|

||||

* Update ComfyUI

|

||||

|

||||

|

||||

# Disclaimer

|

||||

|

||||

37

__init__.py

Normal file

@@ -0,0 +1,37 @@

|

||||

legacy_manager_core_path = None

|

||||

manager_core_path = None

|

||||

|

||||

|

||||

def is_manager_core_exists():

|

||||

global legacy_manager_core_path

|

||||

global manager_core_path

|

||||

import os

|

||||

import folder_paths

|

||||

|

||||

comfy_path = os.path.dirname(folder_paths.__file__)

|

||||

legacy_manager_core_path = os.path.join(comfy_path, 'custom_nodes', 'manager-core')

|

||||

manager_core_path = legacy_manager_core_path

|

||||

|

||||

manager_core_path_file = os.path.join(comfy_path, 'manager_core_path.txt')

|

||||

if os.path.exists(manager_core_path_file):

|

||||

with open(manager_core_path_file, 'r') as f:

|

||||

manager_core_path = os.path.join(f.read().strip(), 'manager-core')

|

||||

|

||||

return os.path.exists(manager_core_path) or os.path.exists(legacy_manager_core_path)

|

||||

|

||||

|

||||

if not is_manager_core_exists():

|

||||

from .modules import migration_server

|

||||

migration_server.manager_core_path = manager_core_path

|

||||

|

||||

WEB_DIRECTORY = "migration_js"

|

||||

NODE_CLASS_MAPPINGS = {}

|

||||

else:

|

||||

# Main code

|

||||

from .modules import manager_ext_server

|

||||

from .modules import share_3rdparty

|

||||

|

||||

WEB_DIRECTORY = "js"

|

||||

|

||||

NODE_CLASS_MAPPINGS = {}

|

||||

__all__ = ['NODE_CLASS_MAPPINGS']

|

||||

4

channels.list.template

Normal file

@@ -0,0 +1,4 @@

|

||||

legacy::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/legacy

|

||||

forked::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/forked

|

||||

dev::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/dev

|

||||

tutorial::https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/node_db/tutorial

|

||||

@@ -1,49 +0,0 @@

|

||||

# ComfyUI-Manager: Core Backend (glob)

|

||||

|

||||

This directory contains the Python backend modules that power ComfyUI-Manager, handling the core functionality of node management, downloading, security, and server operations.

|

||||

|

||||

## Directory Structure

|

||||

- **glob/** - code for new cacheless ComfyUI-Manager

|

||||

- **legacy/** - code for legacy ComfyUI-Manager

|

||||

|

||||

## Core Modules

|

||||

- **manager_core.py**: The central implementation of management functions, handling configuration, installation, updates, and node management.

|

||||

- **manager_server.py**: Implements server functionality and API endpoints for the web interface to interact with the backend.

|

||||

|

||||

## Specialized Modules

|

||||

|

||||

- **share_3rdparty.py**: Manages integration with third-party sharing platforms.

|

||||

|

||||

## Architecture

|

||||

|

||||

The backend follows a modular design pattern with clear separation of concerns:

|

||||

|

||||

1. **Core Layer**: Manager modules provide the primary API and business logic

|

||||

2. **Utility Layer**: Helper modules provide specialized functionality

|

||||

3. **Integration Layer**: Modules that connect to external systems

|

||||

|

||||

## Security Model

|

||||

|

||||

The system implements a comprehensive security framework with multiple levels:

|

||||

|

||||

- **Block**: Highest security - blocks most remote operations

|

||||

- **High**: Allows only specific trusted operations

|

||||

- **Middle**: Standard security for most users

|

||||

- **Normal-**: More permissive for advanced users

|

||||

- **Weak**: Lowest security for development environments

|

||||

|

||||

## Implementation Details

|

||||

|

||||

- The backend is designed to work seamlessly with ComfyUI

|

||||

- Asynchronous task queuing is implemented for background operations

|

||||

- The system supports multiple installation modes

|

||||

- Error handling and risk assessment are integrated throughout the codebase

|

||||

|

||||

## API Integration

|

||||

|

||||

The backend exposes a REST API via `manager_server.py` that enables:

|

||||

- Custom node management (install, update, disable, remove)

|

||||

- Model downloading and organization

|

||||

- System configuration

|

||||

- Snapshot management

|

||||

- Workflow component handling

|

||||

@@ -1,104 +0,0 @@

|

||||

import os

|

||||

import logging

|

||||

from aiohttp import web

|

||||

from .common.manager_security import HANDLER_POLICY

|

||||

from .common import manager_security

|

||||

from comfy.cli_args import args

|

||||

|

||||

|

||||

def prestartup():

|

||||

from . import prestartup_script # noqa: F401

|

||||

logging.info('[PRE] ComfyUI-Manager')

|

||||

|

||||

|

||||

def start():

|

||||

logging.info('[START] ComfyUI-Manager')

|

||||

from .common import cm_global # noqa: F401

|

||||

|

||||

if args.enable_manager:

|

||||

if args.enable_manager_legacy_ui:

|

||||

try:

|

||||

from .legacy import manager_server # noqa: F401

|

||||

from .legacy import share_3rdparty # noqa: F401

|

||||

from .legacy import manager_core as core

|

||||

import nodes

|

||||

|

||||

logging.info("[ComfyUI-Manager] Legacy UI is enabled.")

|

||||

nodes.EXTENSION_WEB_DIRS['comfyui-manager-legacy'] = os.path.join(os.path.dirname(__file__), 'js')

|

||||

except Exception as e:

|

||||

print("Error enabling legacy ComfyUI Manager frontend:", e)

|

||||

core = None

|

||||

else:

|

||||

from .glob import manager_server # noqa: F401

|

||||

from .glob import share_3rdparty # noqa: F401

|

||||

from .glob import manager_core as core

|

||||

|

||||

if core is not None:

|

||||

manager_security.is_personal_cloud_mode = core.get_config()['network_mode'].lower() == 'personal_cloud'

|

||||

|

||||

|

||||

def should_be_disabled(fullpath:str) -> bool:

|

||||

"""

|

||||

1. Disables the legacy ComfyUI-Manager.

|

||||

2. The blocklist can be expanded later based on policies.

|

||||

"""

|

||||

if args.enable_manager:

|

||||

# In cases where installation is done via a zip archive, the directory name may not be comfyui-manager, and it may not contain a git repository.

|

||||

# It is assumed that any installed legacy ComfyUI-Manager will have at least 'comfyui-manager' in its directory name.

|

||||

dir_name = os.path.basename(fullpath).lower()

|

||||

if 'comfyui-manager' in dir_name:

|

||||

return True

|

||||

|

||||

return False

|

||||

|

||||

|

||||

def get_client_ip(request):

|

||||

peername = request.transport.get_extra_info("peername")

|

||||

if peername is not None:

|

||||

host, port = peername

|

||||

return host

|

||||

|

||||

return "unknown"

|

||||

|

||||

|

||||

def create_middleware():

|

||||

connected_clients = set()

|

||||

is_local_mode = manager_security.is_loopback(args.listen)

|

||||

|

||||

@web.middleware

|

||||

async def manager_middleware(request: web.Request, handler):

|

||||

nonlocal connected_clients

|

||||

|

||||

# security policy for remote environments

|

||||

prev_client_count = len(connected_clients)

|

||||

client_ip = get_client_ip(request)

|

||||

connected_clients.add(client_ip)

|

||||

next_client_count = len(connected_clients)

|

||||

|

||||

if prev_client_count == 1 and next_client_count > 1:

|

||||

manager_security.multiple_remote_alert()

|

||||

|

||||

policy = manager_security.get_handler_policy(handler)

|

||||

is_banned = False

|

||||

|

||||

# policy check

|

||||

if len(connected_clients) > 1:

|

||||

if is_local_mode:

|

||||

if HANDLER_POLICY.MULTIPLE_REMOTE_BAN_NON_LOCAL in policy:

|

||||

is_banned = True

|

||||

if HANDLER_POLICY.MULTIPLE_REMOTE_BAN_NOT_PERSONAL_CLOUD in policy:

|

||||

is_banned = not manager_security.is_personal_cloud_mode

|

||||

|

||||

if HANDLER_POLICY.BANNED in policy:

|

||||

is_banned = True

|

||||

|

||||

if is_banned:

|

||||

logging.warning(f"[Manager] Banning request from {client_ip}: {request.path}")

|

||||

response = web.Response(text="[Manager] This request is banned.", status=403)

|

||||

else:

|

||||

response: web.Response = await handler(request)

|

||||

|

||||

return response

|

||||

|

||||

return manager_middleware

|

||||

|

||||

@@ -1,6 +0,0 @@

|

||||

default::https://raw.githubusercontent.com/Comfy-Org/ComfyUI-Manager/main

|

||||

recent::https://raw.githubusercontent.com/Comfy-Org/ComfyUI-Manager/main/node_db/new

|

||||

legacy::https://raw.githubusercontent.com/Comfy-Org/ComfyUI-Manager/main/node_db/legacy

|

||||

forked::https://raw.githubusercontent.com/Comfy-Org/ComfyUI-Manager/main/node_db/forked

|

||||

dev::https://raw.githubusercontent.com/Comfy-Org/ComfyUI-Manager/main/node_db/dev

|

||||

tutorial::https://raw.githubusercontent.com/Comfy-Org/ComfyUI-Manager/main/node_db/tutorial

|

||||

@@ -1,16 +0,0 @@

|

||||

# ComfyUI-Manager: Core Backend (glob)

|

||||

|

||||

This directory contains the Python backend modules that power ComfyUI-Manager, handling the core functionality of node management, downloading, security, and server operations.

|

||||

|

||||

## Core Modules

|

||||

|

||||

- **manager_downloader.py**: Handles downloading operations for models, extensions, and other resources.

|

||||

- **manager_util.py**: Provides utility functions used throughout the system.

|

||||

|

||||

## Specialized Modules

|

||||

|

||||

- **cm_global.py**: Maintains global variables and state management across the system.

|

||||

- **cnr_utils.py**: Helper utilities for interacting with the custom node registry (CNR).

|

||||

- **git_utils.py**: Git-specific utilities for repository operations.

|

||||

- **node_package.py**: Handles the packaging and installation of node extensions.

|

||||

- **security_check.py**: Implements the multi-level security system for installation safety.

|

||||

@@ -1,117 +0,0 @@

|

||||

import traceback

|

||||

|

||||

#

|

||||

# Global Var

|

||||

#

|

||||

# Usage:

|

||||

# import cm_global

|

||||

# cm_global.variables['comfyui.revision'] = 1832

|

||||

# print(f"log mode: {cm_global.variables['logger.enabled']}")

|

||||

#

|

||||

variables = {}

|

||||

|

||||

|

||||

#

|

||||

# Global API

|

||||

#

|

||||

# Usage:

|

||||

# [register API]

|

||||

# import cm_global

|

||||

#

|

||||

# def api_hello(msg):

|

||||

# print(f"hello: {msg}")

|

||||

# return msg

|

||||

#

|

||||

# cm_global.register_api('hello', api_hello)

|

||||

#

|

||||

# [use API]

|

||||

# import cm_global

|

||||

#

|

||||

# test = cm_global.try_call(api='hello', msg='an example')

|

||||

# print(f"'{test}' is returned")

|

||||

#

|

||||

|

||||

APIs = {}

|

||||

|

||||

|

||||

def register_api(k, f):

|

||||

global APIs

|

||||

APIs[k] = f

|

||||

|

||||

|

||||

def try_call(**kwargs):

|

||||

if 'api' in kwargs:

|

||||

api_name = kwargs['api']

|

||||

try:

|

||||

api = APIs.get(api_name)

|

||||

if api is not None:

|

||||

del kwargs['api']

|

||||

return api(**kwargs)

|

||||

else:

|

||||

print(f"WARN: The '{kwargs['api']}' API has not been registered.")

|

||||

except Exception as e:

|

||||

print(f"ERROR: An exception occurred while calling the '{api_name}' API.")

|

||||

raise e

|

||||

else:

|

||||

return None

|

||||

|

||||

|

||||

#

|

||||

# Extension Info

|

||||

#

|

||||

# Usage:

|

||||

# import cm_global

|

||||

#

|

||||

# cm_global.extension_infos['my_extension'] = {'version': [0, 1], 'name': 'me', 'description': 'example extension', }

|

||||

#

|

||||

extension_infos = {}

|

||||

|

||||

on_extension_registered_handlers = {}

|

||||

|

||||

|

||||

def register_extension(extension_name, v):

|

||||

global extension_infos

|

||||

global on_extension_registered_handlers

|

||||

extension_infos[extension_name] = v

|

||||

|

||||

if extension_name in on_extension_registered_handlers:

|

||||

for k, f in on_extension_registered_handlers[extension_name]:

|

||||

try:

|

||||

f(extension_name, v)

|

||||

except Exception:

|

||||

print(f"[ERROR] '{k}' on_extension_registered_handlers")

|

||||

traceback.print_exc()

|

||||

|

||||

del on_extension_registered_handlers[extension_name]

|

||||

|

||||

|

||||

def add_on_extension_registered(k, extension_name, f):

|

||||

global on_extension_registered_handlers

|

||||

if extension_name in extension_infos:

|

||||

try:

|

||||

v = extension_infos[extension_name]

|

||||

f(extension_name, v)

|

||||

except Exception:

|

||||

print(f"[ERROR] '{k}' on_extension_registered_handler")

|

||||

traceback.print_exc()

|

||||

else:

|

||||

if extension_name not in on_extension_registered_handlers:

|

||||

on_extension_registered_handlers[extension_name] = []

|

||||

|

||||

on_extension_registered_handlers[extension_name].append((k, f))

|

||||

|

||||

|

||||

def add_on_revision_detected(k, f):

|

||||

if 'comfyui.revision' in variables:

|

||||

try:

|

||||

f(variables['comfyui.revision'])

|

||||

except Exception:

|

||||

print(f"[ERROR] '{k}' on_revision_detected_handler")

|

||||

traceback.print_exc()

|

||||

else:

|

||||

variables['cm.on_revision_detected_handler'].append((k, f))

|

||||

|

||||

|

||||

error_dict = {}

|

||||

|

||||

disable_front = False

|

||||

@@ -1,256 +0,0 @@

|

||||

import asyncio

|

||||

import json

|

||||

import os

|

||||

import platform

|

||||

import time

|

||||

from dataclasses import dataclass

|

||||

from typing import List

|

||||

|

||||

from . import context

|

||||

from . import manager_util

|

||||

|

||||

import requests

|

||||

import toml

|

||||

|

||||

base_url = "https://api.comfy.org"

|

||||

|

||||

|

||||

lock = asyncio.Lock()

|

||||

|

||||

is_cache_loading = False

|

||||

|

||||

async def get_cnr_data(cache_mode=True, dont_wait=True):

|

||||

try:

|

||||

return await _get_cnr_data(cache_mode, dont_wait)

|

||||

except asyncio.TimeoutError:

|

||||

print("A timeout occurred during the fetch process from ComfyRegistry.")

|

||||

return await _get_cnr_data(cache_mode=True, dont_wait=True) # timeout fallback

|

||||

|

||||

async def _get_cnr_data(cache_mode=True, dont_wait=True):

|

||||

global is_cache_loading

|

||||

|

||||

uri = f'{base_url}/nodes'

|

||||

|

||||

async def fetch_all():

|

||||

remained = True

|

||||

page = 1

|

||||

|

||||

full_nodes = {}

|

||||

|

||||

|

||||

# Determine form factor based on environment and platform

|

||||

is_desktop = bool(os.environ.get('__COMFYUI_DESKTOP_VERSION__'))

|

||||

system = platform.system().lower()

|

||||

is_windows = system == 'windows'

|

||||

is_mac = system == 'darwin'

|

||||

is_linux = system == 'linux'

|

||||

|

||||

# Get ComfyUI version tag

|

||||

if is_desktop:

|

||||

# extract version from pyproject.toml instead of git tag

|

||||

comfyui_ver = context.get_current_comfyui_ver() or 'unknown'

|

||||

else:

|

||||

comfyui_ver = context.get_comfyui_tag() or 'unknown'

|

||||

|

||||

if is_desktop:

|

||||

if is_windows:

|

||||

form_factor = 'desktop-win'

|

||||

elif is_mac:

|

||||

form_factor = 'desktop-mac'

|

||||

else:

|

||||

form_factor = 'other'

|

||||

else:

|

||||

if is_windows:

|

||||

form_factor = 'git-windows'

|

||||

elif is_mac:

|

||||

form_factor = 'git-mac'

|

||||

elif is_linux:

|

||||

form_factor = 'git-linux'

|

||||

else:

|

||||

form_factor = 'other'

|

||||

|

||||

while remained:

|

||||

# Add comfyui_version and form_factor to the API request

|

||||

sub_uri = f'{base_url}/nodes?page={page}&limit=30&comfyui_version={comfyui_ver}&form_factor={form_factor}'

|

||||

sub_json_obj = await asyncio.wait_for(manager_util.get_data_with_cache(sub_uri, cache_mode=False, silent=True, dont_cache=True), timeout=30)

|

||||

remained = page < sub_json_obj['totalPages']

|

||||

|

||||

for x in sub_json_obj['nodes']:

|

||||

full_nodes[x['id']] = x

|

||||

|

||||

if page % 5 == 0:

|

||||

print(f"FETCH ComfyRegistry Data: {page}/{sub_json_obj['totalPages']}")

|

||||

|

||||

page += 1

|

||||

time.sleep(0.5)

|

||||

|

||||

print("FETCH ComfyRegistry Data [DONE]")

|

||||

|

||||

for v in full_nodes.values():

|

||||

if 'latest_version' not in v:

|

||||

v['latest_version'] = dict(version='nightly')

|

||||

|

||||

return {'nodes': list(full_nodes.values())}

|

||||

|

||||

if cache_mode:

|

||||

is_cache_loading = True

|

||||

cache_state = manager_util.get_cache_state(uri)

|

||||

|

||||

if dont_wait:

|

||||

if cache_state == 'not-cached':

|

||||

return {}

|

||||

else:

|

||||

print("[ComfyUI-Manager] The ComfyRegistry cache update is still in progress, so an outdated cache is being used.")

|

||||

with open(manager_util.get_cache_path(uri), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||

return json.load(json_file)['nodes']

|

||||

|

||||

if cache_state == 'cached':

|

||||

with open(manager_util.get_cache_path(uri), 'r', encoding="UTF-8", errors="ignore") as json_file:

|

||||

return json.load(json_file)['nodes']

|

||||

|

||||

try:

|

||||

json_obj = await fetch_all()

|

||||

manager_util.save_to_cache(uri, json_obj)

|

||||

return json_obj['nodes']

|

||||

except Exception:

|

||||

res = {}

|

||||

print("Cannot connect to comfyregistry.")

|

||||

finally:

|

||||

if cache_mode:

|

||||

is_cache_loading = False

|

||||

|

||||

return res

|

||||

|

||||

|

||||

@dataclass

|

||||

class NodeVersion:

|

||||

changelog: str

|

||||

dependencies: List[str]

|

||||

deprecated: bool

|

||||

id: str

|

||||

version: str

|

||||

download_url: str

|

||||

|

||||

|

||||

def map_node_version(api_node_version):

|

||||

"""

|

||||

Maps node version data from API response to NodeVersion dataclass.

|

||||

|

||||

Args:

|

||||

api_data (dict): The 'node_version' part of the API response.

|

||||

|

||||

Returns:

|

||||

NodeVersion: An instance of NodeVersion dataclass populated with data from the API.

|

||||

"""

|

||||

return NodeVersion(

|

||||

changelog=api_node_version.get(

|

||||

"changelog", ""

|

||||

), # Provide a default value if 'changelog' is missing

|

||||

dependencies=api_node_version.get(

|

||||

"dependencies", []

|

||||

), # Provide a default empty list if 'dependencies' is missing

|

||||

deprecated=api_node_version.get(

|

||||

"deprecated", False

|

||||

), # Assume False if 'deprecated' is not specified

|

||||

id=api_node_version[

|

||||

"id"

|

||||

], # 'id' should be mandatory; raise KeyError if missing

|

||||

version=api_node_version[

|

||||

"version"

|

||||

], # 'version' should be mandatory; raise KeyError if missing

|

||||

download_url=api_node_version.get(

|

||||

"downloadUrl", ""

|

||||

), # Provide a default value if 'downloadUrl' is missing

|

||||

)

|

||||

|

||||

|

||||

def install_node(node_id, version=None):

|

||||

"""

|

||||

Retrieves the node version for installation.

|

||||

|

||||

Args:

|

||||

node_id (str): The unique identifier of the node.

|

||||

version (str, optional): Specific version of the node to retrieve. If omitted, the latest version is returned.

|

||||

|

||||

Returns:

|

||||

NodeVersion: Node version data or error message.

|

||||

"""

|

||||

if version is None:

|

||||

url = f"{base_url}/nodes/{node_id}/install"

|

||||

else:

|

||||

url = f"{base_url}/nodes/{node_id}/install?version={version}"

|

||||

|

||||

response = requests.get(url, verify=not manager_util.bypass_ssl)

|

||||

if response.status_code == 200:

|

||||

# Convert the API response to a NodeVersion object

|

||||

return map_node_version(response.json())

|

||||

else:

|

||||

return None

|

||||

|

||||

|

||||

def all_versions_of_node(node_id):

|

||||

url = f"{base_url}/nodes/{node_id}/versions?statuses=NodeVersionStatusActive&statuses=NodeVersionStatusPending"

|

||||

|

||||

response = requests.get(url, verify=not manager_util.bypass_ssl)

|

||||

if response.status_code == 200:

|

||||

return response.json()

|

||||

else:

|

||||

return None

|

||||

|

||||

|

||||

def read_cnr_info(fullpath):

|

||||

try:

|

||||

toml_path = os.path.join(fullpath, 'pyproject.toml')

|

||||

tracking_path = os.path.join(fullpath, '.tracking')

|

||||

|

||||

if not os.path.exists(toml_path) or not os.path.exists(tracking_path):

|

||||

return None # not valid CNR node pack

|

||||

|

||||

with open(toml_path, "r", encoding="utf-8") as f:

|

||||

data = toml.load(f)

|

||||

|

||||

project = data.get('project', {})

|

||||

name = project.get('name').strip().lower()

|

||||

original_name = project.get('name')

|

||||

|

||||

# normalize version

|

||||

# for example: 2.5 -> 2.5.0

|

||||

version = str(manager_util.StrictVersion(project.get('version')))

|

||||

|

||||

urls = project.get('urls', {})

|

||||

repository = urls.get('Repository')

|

||||

|

||||

if name and version: # repository is optional

|

||||

return {

|

||||

"id": name,

|

||||

"original_name": original_name,

|

||||

"version": version,

|

||||

"url": repository

|

||||

}

|

||||

|

||||

return None

|

||||

except Exception:

|

||||

return None # not valid CNR node pack

|

||||

|

||||

|

||||

def generate_cnr_id(fullpath, cnr_id):

|

||||

cnr_id_path = os.path.join(fullpath, '.git', '.cnr-id')

|

||||

try:

|

||||

if not os.path.exists(cnr_id_path):

|

||||

with open(cnr_id_path, "w") as f:

|

||||

return f.write(cnr_id)

|

||||

except Exception:

|

||||

print(f"[ComfyUI Manager] unable to create file: {cnr_id_path}")

|

||||

|

||||

|

||||

def read_cnr_id(fullpath):

|

||||

cnr_id_path = os.path.join(fullpath, '.git', '.cnr-id')

|

||||

try:

|

||||

if os.path.exists(cnr_id_path):

|

||||

with open(cnr_id_path) as f:

|

||||

return f.read().strip()

|

||||

except Exception:

|

||||

pass

|

||||

|

||||

return None

|

||||

|

||||

@@ -1,108 +0,0 @@

|

||||

import sys

|

||||

import os

|

||||

import logging

|

||||

from . import manager_util

|

||||

import toml

|

||||

import git

|

||||

|

||||

|

||||

# read env vars

|

||||

comfy_path: str = os.environ.get('COMFYUI_PATH')

|

||||

comfy_base_path = os.environ.get('COMFYUI_FOLDERS_BASE_PATH')

|

||||

|

||||

if comfy_path is None:

|

||||

try:

|

||||

comfy_path = os.path.abspath(os.path.dirname(sys.modules['__main__'].__file__))

|

||||

os.environ['COMFYUI_PATH'] = comfy_path

|

||||

except Exception:

|

||||

logging.error("[ComfyUI-Manager] environment variable 'COMFYUI_PATH' is not specified.")

|

||||

exit(-1)

|

||||

|

||||

if comfy_base_path is None:

|

||||

comfy_base_path = comfy_path

|

||||

|

||||

channel_list_template_path = os.path.join(manager_util.comfyui_manager_path, 'channels.list.template')

|

||||

git_script_path = os.path.join(manager_util.comfyui_manager_path, "git_helper.py")

|

||||

|

||||

manager_files_path = None

|

||||

manager_config_path = None

|

||||

manager_channel_list_path = None

|

||||

manager_startup_script_path:str = None

|

||||

manager_snapshot_path = None

|

||||

manager_pip_overrides_path = None

|

||||

manager_pip_blacklist_path = None

|

||||

manager_components_path = None

|

||||

manager_batch_history_path = None

|

||||

|

||||

def update_user_directory(user_dir):

|

||||

global manager_files_path

|

||||

global manager_config_path

|

||||

global manager_channel_list_path

|

||||

global manager_startup_script_path

|

||||

global manager_snapshot_path

|

||||

global manager_pip_overrides_path

|

||||

global manager_pip_blacklist_path

|

||||

global manager_components_path

|

||||

global manager_batch_history_path

|

||||

|

||||

manager_files_path = os.path.abspath(os.path.join(user_dir, 'default', 'ComfyUI-Manager'))

|

||||

if not os.path.exists(manager_files_path):

|

||||

os.makedirs(manager_files_path)

|

||||

|

||||

manager_snapshot_path = os.path.join(manager_files_path, "snapshots")

|

||||

if not os.path.exists(manager_snapshot_path):

|

||||

os.makedirs(manager_snapshot_path)

|

||||

|

||||

manager_startup_script_path = os.path.join(manager_files_path, "startup-scripts")

|

||||

if not os.path.exists(manager_startup_script_path):

|

||||

os.makedirs(manager_startup_script_path)

|

||||

|