* ci: add Mac Intel (x86_64) build support * fix: auto-detect Homebrew path for Intel vs Apple Silicon Macs This fixes the hardcoded /opt/homebrew path which only works on Apple Silicon Macs. Intel Macs use /usr/local as the Homebrew prefix. * fix: auto-detect Homebrew paths for both DiskANN and HNSW backends - Fix DiskANN CMakeLists.txt path reference - Add macOS environment variable detection for OpenMP_ROOT - Support both Intel (/usr/local) and Apple Silicon (/opt/homebrew) paths * fix: improve macOS build reliability with proper OpenMP path detection - Add proper CMAKE_PREFIX_PATH and OpenMP_ROOT detection for both Intel and Apple Silicon Macs - Set LDFLAGS and CPPFLAGS for all Homebrew packages to ensure CMake can find them - Apply CMAKE_ARGS to both HNSW and DiskANN backends for consistent builds - Fix hardcoded paths that caused build failures on Intel Macs (macos-13) 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: add abseil library path for protobuf compilation on macOS - Include abseil in CMAKE_PREFIX_PATH for both Intel and Apple Silicon Macs - Add explicit absl_DIR CMake variable to help find abseil for protobuf - Fixes 'absl/log/absl_log.h' file not found error during compilation 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: add abseil include path to CPPFLAGS for both Intel and Apple Silicon - Add -I/opt/homebrew/opt/abseil/include to CPPFLAGS for Apple Silicon - Add -I/usr/local/opt/abseil/include to CPPFLAGS for Intel - Fixes 'absl/log/absl_log.h' file not found by ensuring abseil headers are in compiler include path Root cause: CMAKE_PREFIX_PATH alone wasn't sufficient - compiler needs explicit -I flags 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: clean build system and Python 3.9 compatibility Build system improvements: - Simplify macOS environment detection using brew --prefix - Remove complex hardcoded paths and CMAKE_ARGS - Let CMake automatically find Homebrew packages via CMAKE_PREFIX_PATH - Clean separation between Intel (/usr/local) and Apple Silicon (/opt/homebrew) Python 3.9 compatibility: - Set ruff target-version to py39 to match project requirements - Replace str | None with Union[str, None] in type annotations - Add Union imports where needed - Fix core interface, CLI, chat, and embedding server files 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: type * fix: ensure CMAKE_PREFIX_PATH is passed to backend builds - Add CMAKE_ARGS with CMAKE_PREFIX_PATH and OpenMP_ROOT for both HNSW and DiskANN backends - This ensures CMake can find Homebrew packages on both Intel (/usr/local) and Apple Silicon (/opt/homebrew) - Fixes the issue where CMake was still looking for hardcoded paths instead of using detected ones 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: configure CMake paths in pyproject.toml for proper Homebrew detection - Add CMAKE_PREFIX_PATH and OpenMP_ROOT environment variable mapping in both backends - Remove CMAKE_ARGS from GitHub Actions workflow (cleaner separation) - Ensure scikit-build-core correctly uses environment variables for CMake configuration - This should fix the hardcoded /opt/homebrew paths on Intel Macs 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: remove hardcoded /opt/homebrew paths from DiskANN CMake - Auto-detect Homebrew libomp path using OpenMP_ROOT environment variable - Fallback to CMAKE_PREFIX_PATH/opt/libomp if OpenMP_ROOT not set - Final fallback to brew --prefix libomp for auto-detection - Maintains backwards compatibility with old hardcoded path - Fixes Intel Mac builds that were failing due to hardcoded Apple Silicon paths 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: update DiskANN submodule with macOS Intel/Apple Silicon compatibility fixes - Auto-detect Homebrew libomp path using OpenMP_ROOT environment variable - Exclude mkl_set_num_threads on macOS (uses Accelerate framework instead of MKL) - Fixes compilation on Intel Macs by using correct /usr/local paths 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: update DiskANN submodule with SIMD function name corrections - Fix _mm128_loadu_ps to _mm_loadu_ps (and similar functions) - This is a known issue in upstream DiskANN code where incorrect function names were used - Resolves compilation errors on macOS Intel builds References: Known DiskANN issue with SIMD intrinsics naming 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: update DiskANN submodule with type cast fix for signed char templates - Add missing type casts (float*)a and (float*)b in SSE2 version - This matches the existing type casts in the AVX version - Fixes compilation error when instantiating DistanceInnerProduct<int8_t> - Resolves "cannot initialize const float* with const signed char*" error 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: update Faiss submodule with override keyword fix - Add missing override keyword to IDSelectorModulo::is_member function - Fixes C++ compilation warning that was treated as error due to -Werror flag - Resolves "warning: 'is_member' overrides a member function but is not marked 'override'" - Improves code conformance to modern C++ best practices 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: update Faiss submodule with override keyword fix * fix: update DiskANN submodule with additional type cast fix - Add missing type cast in DistanceFastL2::norm function SSE2 version - Fixes const float* = const signed char* compilation error - Ensures consistent type casting across all SIMD code paths - Resolves template instantiation error for DistanceFastL2<int8_t> 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * debug: simplify wheel compatibility checking - Fix YAML syntax error in debug step - Use simpler approach to show platform tags and wheel names - This will help identify platform tag compatibility issues 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: use correct Python version for wheel builds - Replace --python python with --python ${{ matrix.python }} - This ensures wheels are built for the correct Python version in each matrix job - Fixes Python version mismatch where cp39 wheels were used in cp311 environments 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: resolve wheel installation conflicts in CI matrix builds Fix issue where multiple Python versions' wheels in the same dist directory caused installation conflicts during CI testing. The problem occurred when matrix builds for different Python versions accumulated wheels in shared directories, and uv pip install would find incompatible wheels. Changes: - Add Python version detection using matrix.python variable - Convert Python version to wheel tag format (e.g., 3.11 -> cp311) - Use find with version-specific pattern matching to select correct wheels - Add explicit error handling if no matching wheel is found This ensures each CI job installs only wheels compatible with its specific Python version, preventing "A path dependency is incompatible with the current platform" errors. 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: ensure virtual environment uses correct Python version in CI Fix issue where uv venv was creating virtual environments with a different Python version than specified in the matrix, causing wheel compatibility errors. The problem occurred when the system had multiple Python versions and uv venv defaulted to a different version than intended. Changes: - Add --python ${{ matrix.python }} flag to uv venv command - Ensures virtual environment matches the matrix-specified Python version - Fixes "The wheel is compatible with CPython 3.X but you're using CPython 3.Y" errors This ensures wheel installation selects and installs the correctly built wheels that match the runtime Python version. 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: complete Python 3.9 type annotation compatibility fixes Fix remaining Python 3.9 incompatible type annotations throughout the leann-core package that were causing test failures in CI. The union operator (|) syntax for type hints was introduced in Python 3.10 and causes "TypeError: unsupported operand type(s) for |" errors in Python 3.9. Changes: - Convert dict[str, Any] | None to Optional[dict[str, Any]] - Convert int | None to Optional[int] - Convert subprocess.Popen | None to Optional[subprocess.Popen] - Convert LeannBackendFactoryInterface | None to Optional[LeannBackendFactoryInterface] - Add missing Optional imports to all affected files This resolves all test failures related to type annotation syntax and ensures compatibility with Python 3.9 as specified in pyproject.toml. 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: complete Python 3.9 type annotation fixes in backend packages Fix remaining Python 3.9 incompatible type annotations in backend packages that were causing test failures. The union operator (|) syntax for type hints was introduced in Python 3.10 and causes "TypeError: unsupported operand type(s) for |" errors in Python 3.9. Changes in leann-backend-diskann: - Convert zmq_port: int | None to Optional[int] in diskann_backend.py - Convert passages_file: str | None to Optional[str] in diskann_embedding_server.py - Add Optional imports to both files Changes in leann-backend-hnsw: - Convert zmq_port: int | None to Optional[int] in hnsw_backend.py - Add Optional import This resolves the final test failures related to type annotation syntax and ensures full Python 3.9 compatibility across all packages. 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: remove Python 3.10+ zip strict parameter for Python 3.9 compatibility Remove the strict=False parameter from zip() call in api.py as it was introduced in Python 3.10 and causes "TypeError: zip() takes no keyword arguments" in Python 3.9. The strict parameter controls whether zip() raises an exception when the iterables have different lengths. Since we're not relying on this behavior and the code works correctly without it, removing it maintains the same functionality while ensuring Python 3.9 compatibility. 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: ensure leann-core package is built on all platforms, not just Ubuntu This fixes the issue where CI was installing leann-core from PyPI instead of using locally built package with Python 3.9 compatibility fixes. * fix: build and install leann meta package on all platforms The leann meta package is pure Python and platform-independent, so there's no reason to restrict it to Ubuntu only. This ensures all platforms use consistent local builds instead of falling back to PyPI versions. * fix: restrict MLX dependencies to Apple Silicon Macs only MLX framework only supports Apple Silicon (ARM64) Macs, not Intel x86_64. Add platform_machine == 'arm64' condition to prevent installation failures on Intel Macs (macos-13). * cleanup: simplify CI configuration - Remove debug step with non-existent 'uv pip debug' command - Simplify wheel installation logic - let uv handle compatibility - Use -e .[test] instead of manually listing all test dependencies * fix: install backend wheels before meta packages Install backend wheels first to ensure they're available when core/meta packages are installed, preventing uv from trying to resolve backend dependencies from PyPI. * fix: use local leann-core when building backend packages Add --find-links to backend builds to ensure they use the locally built leann-core with fixed MLX dependencies instead of downloading from PyPI. Also bump leann-core version to 0.2.8 to ensure clean dependency resolution. * fix: use absolute path for find-links and upgrade backend version - Use GITHUB_WORKSPACE for absolute path to ensure find-links works - Upgrade leann-backend-hnsw to 0.2.8 to match leann-core version * fix: use absolute path for find-links and upgrade backend version - Use GITHUB_WORKSPACE for absolute path to ensure find-links works - Upgrade leann-backend-hnsw to 0.2.8 to match leann-core version * fix: correct version consistency for --find-links to work properly - All packages now use version 0.2.7 consistently - Backend packages can find exact leann-core==0.2.7 from local build - This ensures --find-links works during CI builds instead of falling back to PyPI 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: revert all packages to consistent version 0.2.7 - This PR should not bump versions, only fix Intel Mac build - Version bumps should be done in release_manual workflow - All packages now use 0.2.7 consistently for --find-links to work 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: use --find-links during package installation to avoid PyPI MLX conflicts - Backend wheels contain Requires-Dist: leann-core==0.2.7 - Without --find-links, uv resolves this from PyPI which has MLX for all Darwin - With --find-links, uv uses local leann-core with proper platform restrictions - Root cause: dependency resolution happens at install time, not just build time - Local test confirms this fixes Intel Mac MLX dependency issues 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: restrict MLX dependencies to ARM64 Macs in workspace pyproject.toml - Root pyproject.toml also had MLX dependencies without platform_machine restriction - This caused test dependency installation to fail on Intel Macs - Now consistent with packages/leann-core/pyproject.toml platform restrictions 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * chore: cleanup unused files and fix GitHub Actions warnings - Remove unused packages/leann-backend-diskann/CMakeLists.txt (DiskANN uses cmake.source-dir=third_party/DiskANN instead) - Replace macos-latest with macos-14 to avoid migration warnings (macos-latest will migrate to macOS 15 on August 4, 2025) - Keep packages/leann-backend-hnsw/CMakeLists.txt (needed for Faiss config) 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com> * fix: properly handle Python 3.13 support with PyTorch compatibility - Support Python 3.13 on most platforms (Ubuntu, ARM64 Mac) - Exclude Intel Mac + Python 3.13 combination due to PyTorch wheel availability - PyTorch <2.5 supports Intel Mac but not Python 3.13 - PyTorch 2.5+ supports Python 3.13 but not Intel Mac x86_64 - Document limitation in CI configuration comments - Update README badges with detailed Python version support and CI status 🤖 Generated with [Claude Code](https://claude.ai/code) Co-Authored-By: Claude <noreply@anthropic.com>

The smallest vector index in the world. RAG Everything with LEANN!

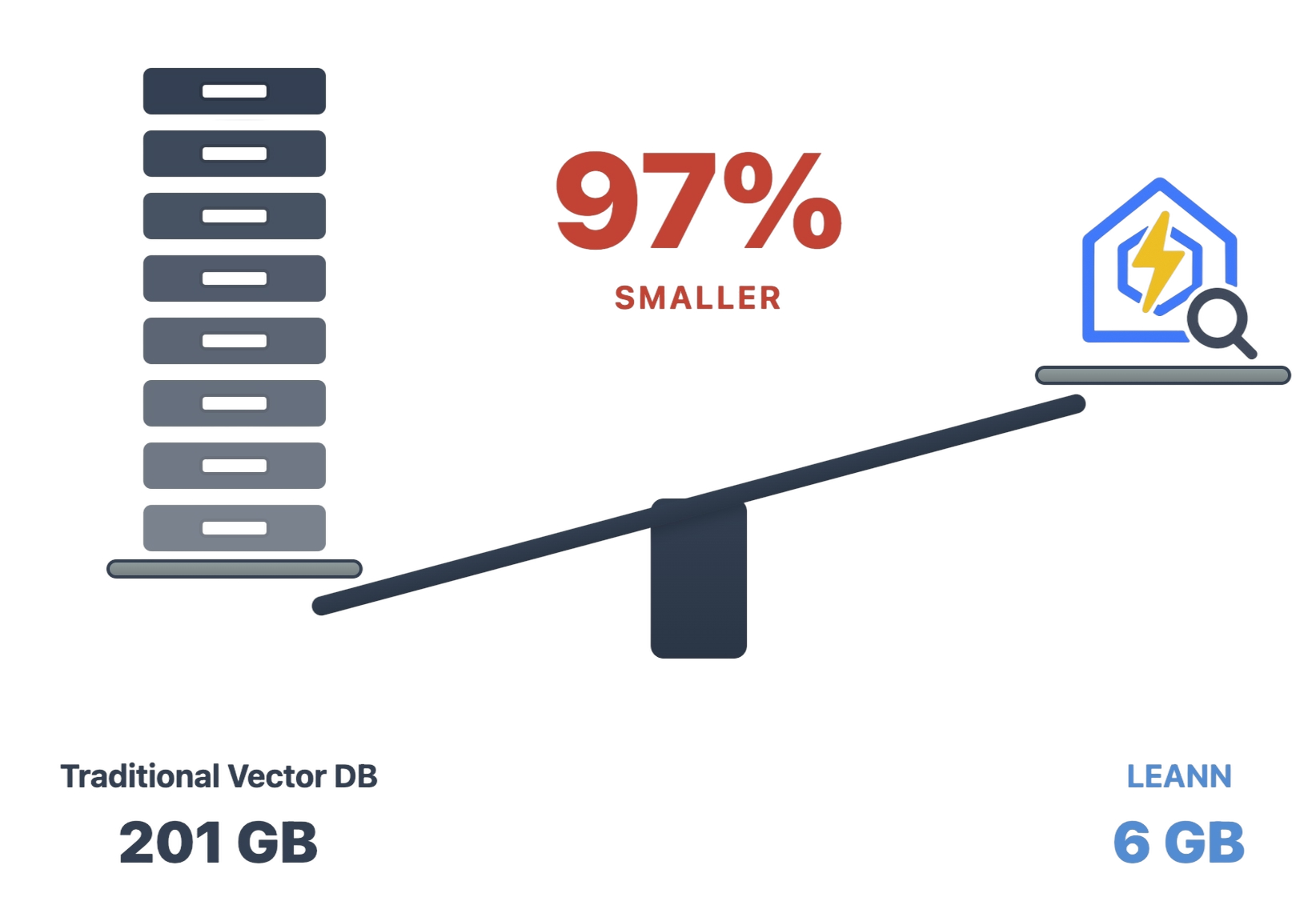

LEANN is an innovative vector database that democratizes personal AI. Transform your laptop into a powerful RAG system that can index and search through millions of documents while using 97% less storage than traditional solutions without accuracy loss.

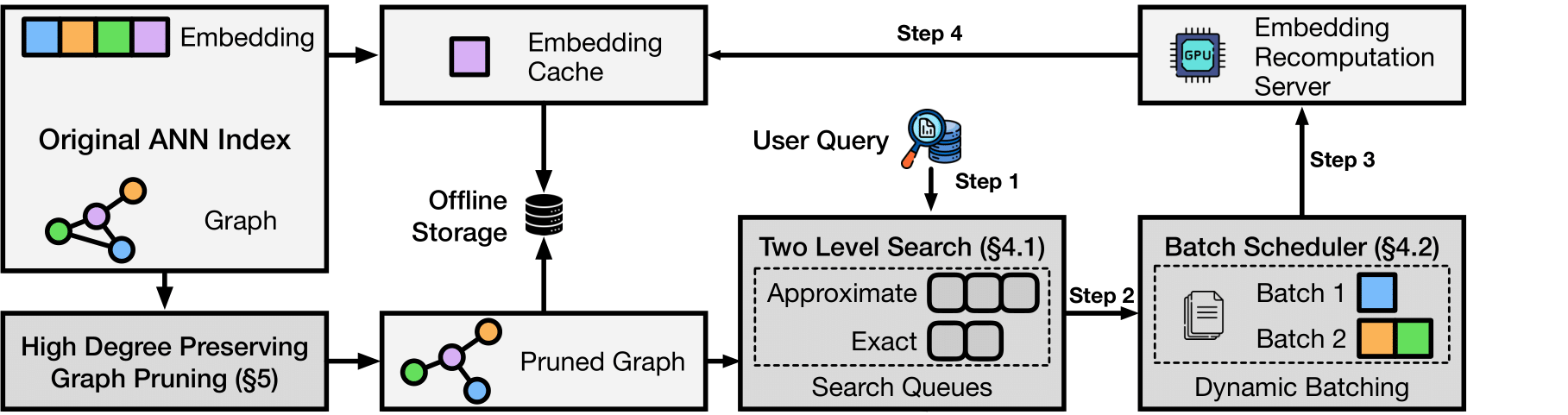

LEANN achieves this through graph-based selective recomputation with high-degree preserving pruning, computing embeddings on-demand instead of storing them all. Illustration Fig → | Paper →

Ready to RAG Everything? Transform your laptop into a personal AI assistant that can semantic search your file system, emails, browser history, chat history, codebase* , or external knowledge bases (i.e., 60M documents) - all on your laptop, with zero cloud costs and complete privacy.

* Claude Code only supports basic grep-style keyword search. LEANN is a drop-in semantic search MCP service fully compatible with Claude Code, unlocking intelligent retrieval without changing your workflow. 🔥 Check out the easy setup →

Why LEANN?

The numbers speak for themselves: Index 60 million text chunks in just 6GB instead of 201GB. From emails to browser history, everything fits on your laptop. See detailed benchmarks for different applications below ↓

🔒 Privacy: Your data never leaves your laptop. No OpenAI, no cloud, no "terms of service".

🪶 Lightweight: Graph-based recomputation eliminates heavy embedding storage, while smart graph pruning and CSR format minimize graph storage overhead. Always less storage, less memory usage!

📦 Portable: Transfer your entire knowledge base between devices (even with others) with minimal cost - your personal AI memory travels with you.

📈 Scalability: Handle messy personal data that would crash traditional vector DBs, easily managing your growing personalized data and agent generated memory!

✨ No Accuracy Loss: Maintain the same search quality as heavyweight solutions while using 97% less storage.

Installation

📦 Prerequisites: Install uv

Install uv first if you don't have it. Typically, you can install it with:

curl -LsSf https://astral.sh/uv/install.sh | sh

🚀 Quick Install

Clone the repository to access all examples and try amazing applications,

git clone https://github.com/yichuan-w/LEANN.git leann

cd leann

and install LEANN from PyPI to run them immediately:

uv venv

source .venv/bin/activate

uv pip install leann

🔧 Build from Source (Recommended for development)

git clone https://github.com/yichuan-w/LEANN.git leann

cd leann

git submodule update --init --recursive

macOS:

brew install llvm libomp boost protobuf zeromq pkgconf

CC=$(brew --prefix llvm)/bin/clang CXX=$(brew --prefix llvm)/bin/clang++ uv sync

Linux:

sudo apt-get install libomp-dev libboost-all-dev protobuf-compiler libabsl-dev libmkl-full-dev libaio-dev libzmq3-dev

uv sync

Quick Start

Our declarative API makes RAG as easy as writing a config file.

Check out demo.ipynb or

from leann import LeannBuilder, LeannSearcher, LeannChat

from pathlib import Path

INDEX_PATH = str(Path("./").resolve() / "demo.leann")

# Build an index

builder = LeannBuilder(backend_name="hnsw")

builder.add_text("LEANN saves 97% storage compared to traditional vector databases.")

builder.add_text("Tung Tung Tung Sahur called—they need their banana‑crocodile hybrid back")

builder.build_index(INDEX_PATH)

# Search

searcher = LeannSearcher(INDEX_PATH)

results = searcher.search("fantastical AI-generated creatures", top_k=1)

# Chat with your data

chat = LeannChat(INDEX_PATH, llm_config={"type": "hf", "model": "Qwen/Qwen3-0.6B"})

response = chat.ask("How much storage does LEANN save?", top_k=1)

RAG on Everything!

LEANN supports RAG on various data sources including documents (.pdf, .txt, .md), Apple Mail, Google Search History, WeChat, and more.

Generation Model Setup

LEANN supports multiple LLM providers for text generation (OpenAI API, HuggingFace, Ollama).

🔑 OpenAI API Setup (Default)

Set your OpenAI API key as an environment variable:

export OPENAI_API_KEY="your-api-key-here"

🔧 Ollama Setup (Recommended for full privacy)

macOS:

First, download Ollama for macOS.

# Pull a lightweight model (recommended for consumer hardware)

ollama pull llama3.2:1b

Linux:

# Install Ollama

curl -fsSL https://ollama.ai/install.sh | sh

# Start Ollama service manually

ollama serve &

# Pull a lightweight model (recommended for consumer hardware)

ollama pull llama3.2:1b

⭐ Flexible Configuration

LEANN provides flexible parameters for embedding models, search strategies, and data processing to fit your specific needs.

📚 Need configuration best practices? Check our Configuration Guide for detailed optimization tips, model selection advice, and solutions to common issues like slow embeddings or poor search quality.

📋 Click to expand: Common Parameters (Available in All Examples)

All RAG examples share these common parameters. Interactive mode is available in all examples - simply run without --query to start a continuous Q&A session where you can ask multiple questions. Type 'quit' to exit.

# Core Parameters (General preprocessing for all examples)

--index-dir DIR # Directory to store the index (default: current directory)

--query "YOUR QUESTION" # Single query mode. Omit for interactive chat (type 'quit' to exit), and now you can play with your index interactively

--max-items N # Limit data preprocessing (default: -1, process all data)

--force-rebuild # Force rebuild index even if it exists

# Embedding Parameters

--embedding-model MODEL # e.g., facebook/contriever, text-embedding-3-small, nomic-embed-text, mlx-community/Qwen3-Embedding-0.6B-8bit or nomic-embed-text

--embedding-mode MODE # sentence-transformers, openai, mlx, or ollama

# LLM Parameters (Text generation models)

--llm TYPE # LLM backend: openai, ollama, or hf (default: openai)

--llm-model MODEL # Model name (default: gpt-4o) e.g., gpt-4o-mini, llama3.2:1b, Qwen/Qwen2.5-1.5B-Instruct

--thinking-budget LEVEL # Thinking budget for reasoning models: low/medium/high (supported by o3, o3-mini, GPT-Oss:20b, and other reasoning models)

# Search Parameters

--top-k N # Number of results to retrieve (default: 20)

--search-complexity N # Search complexity for graph traversal (default: 32)

# Chunking Parameters

--chunk-size N # Size of text chunks (default varies by source: 256 for most, 192 for WeChat)

--chunk-overlap N # Overlap between chunks (default varies: 25-128 depending on source)

# Index Building Parameters

--backend-name NAME # Backend to use: hnsw or diskann (default: hnsw)

--graph-degree N # Graph degree for index construction (default: 32)

--build-complexity N # Build complexity for index construction (default: 64)

--no-compact # Disable compact index storage (compact storage IS enabled to save storage by default)

--no-recompute # Disable embedding recomputation (recomputation IS enabled to save storage by default)

📄 Personal Data Manager: Process Any Documents (.pdf, .txt, .md)!

Ask questions directly about your personal PDFs, documents, and any directory containing your files!

The example below asks a question about summarizing our paper (uses default data in data/, which is a directory with diverse data sources: two papers, Pride and Prejudice, and a Technical report about LLM in Huawei in Chinese), and this is the easiest example to run here:

source .venv/bin/activate # Don't forget to activate the virtual environment

python -m apps.document_rag --query "What are the main techniques LEANN explores?"

📋 Click to expand: Document-Specific Arguments

Parameters

--data-dir DIR # Directory containing documents to process (default: data)

--file-types .ext .ext # Filter by specific file types (optional - all LlamaIndex supported types if omitted)

Example Commands

# Process all documents with larger chunks for academic papers

python -m apps.document_rag --data-dir "~/Documents/Papers" --chunk-size 1024

# Filter only markdown and Python files with smaller chunks

python -m apps.document_rag --data-dir "./docs" --chunk-size 256 --file-types .md .py

📧 Your Personal Email Secretary: RAG on Apple Mail!

Note: The examples below currently support macOS only. Windows support coming soon.

Before running the example below, you need to grant full disk access to your terminal/VS Code in System Preferences → Privacy & Security → Full Disk Access.

python -m apps.email_rag --query "What's the food I ordered by DoorDash or Uber Eats mostly?"

780K email chunks → 78MB storage. Finally, search your email like you search Google.

📋 Click to expand: Email-Specific Arguments

Parameters

--mail-path PATH # Path to specific mail directory (auto-detects if omitted)

--include-html # Include HTML content in processing (useful for newsletters)

Example Commands

# Search work emails from a specific account

python -m apps.email_rag --mail-path "~/Library/Mail/V10/WORK_ACCOUNT"

# Find all receipts and order confirmations (includes HTML)

python -m apps.email_rag --query "receipt order confirmation invoice" --include-html

📋 Click to expand: Example queries you can try

Once the index is built, you can ask questions like:

- "Find emails from my boss about deadlines"

- "What did John say about the project timeline?"

- "Show me emails about travel expenses"

🔍 Time Machine for the Web: RAG Your Entire Chrome Browser History!

python -m apps.browser_rag --query "Tell me my browser history about machine learning?"

38K browser entries → 6MB storage. Your browser history becomes your personal search engine.

📋 Click to expand: Browser-Specific Arguments

Parameters

--chrome-profile PATH # Path to Chrome profile directory (auto-detects if omitted)

Example Commands

# Search academic research from your browsing history

python -m apps.browser_rag --query "arxiv papers machine learning transformer architecture"

# Track competitor analysis across work profile

python -m apps.browser_rag --chrome-profile "~/Library/Application Support/Google/Chrome/Work Profile" --max-items 5000

📋 Click to expand: How to find your Chrome profile

The default Chrome profile path is configured for a typical macOS setup. If you need to find your specific Chrome profile:

- Open Terminal

- Run:

ls ~/Library/Application\ Support/Google/Chrome/ - Look for folders like "Default", "Profile 1", "Profile 2", etc.

- Use the full path as your

--chrome-profileargument

Common Chrome profile locations:

- macOS:

~/Library/Application Support/Google/Chrome/Default - Linux:

~/.config/google-chrome/Default

💬 Click to expand: Example queries you can try

Once the index is built, you can ask questions like:

- "What websites did I visit about machine learning?"

- "Find my search history about programming"

- "What YouTube videos did I watch recently?"

- "Show me websites I visited about travel planning"

💬 WeChat Detective: Unlock Your Golden Memories!

python -m apps.wechat_rag --query "Show me all group chats about weekend plans"

400K messages → 64MB storage Search years of chat history in any language.

🔧 Click to expand: Installation Requirements

First, you need to install the WeChat exporter,

brew install sunnyyoung/repo/wechattweak-cli

or install it manually (if you have issues with Homebrew):

sudo packages/wechat-exporter/wechattweak-cli install

Troubleshooting:

- Installation issues: Check the WeChatTweak-CLI issues page

- Export errors: If you encounter the error below, try restarting WeChat

Failed to export WeChat data. Please ensure WeChat is running and WeChatTweak is installed. Failed to find or export WeChat data. Exiting.

📋 Click to expand: WeChat-Specific Arguments

Parameters

--export-dir DIR # Directory to store exported WeChat data (default: wechat_export_direct)

--force-export # Force re-export even if data exists

Example Commands

# Search for travel plans discussed in group chats

python -m apps.wechat_rag --query "travel plans" --max-items 10000

# Re-export and search recent chats (useful after new messages)

python -m apps.wechat_rag --force-export --query "work schedule"

💬 Click to expand: Example queries you can try

Once the index is built, you can ask questions like:

- "我想买魔术师约翰逊的球衣,给我一些对应聊天记录?" (Chinese: Show me chat records about buying Magic Johnson's jersey)

🚀 Claude Code Integration: Transform Your Development Workflow!

The future of code assistance is here. Transform your development workflow with LEANN's native MCP integration for Claude Code. Index your entire codebase and get intelligent code assistance directly in your IDE.

Key features:

- 🔍 Semantic code search across your entire project

- 📚 Context-aware assistance for debugging and development

- 🚀 Zero-config setup with automatic language detection

# Install LEANN globally for MCP integration

uv tool install leann-core

# Setup is automatic - just start using Claude Code!

Try our fully agentic pipeline with auto query rewriting, semantic search planning, and more:

Ready to supercharge your coding? Complete Setup Guide →

🖥️ Command Line Interface

LEANN includes a powerful CLI for document processing and search. Perfect for quick document indexing and interactive chat.

Installation

If you followed the Quick Start, leann is already installed in your virtual environment:

source .venv/bin/activate

leann --help

To make it globally available:

# Install the LEANN CLI globally using uv tool

uv tool install leann

# Now you can use leann from anywhere without activating venv

leann --help

Note

: Global installation is required for Claude Code integration. The

leann_mcpserver depends on the globally availableleanncommand.

Usage Examples

# build from a specific directory, and my_docs is the index name

leann build my-docs --docs ./your_documents

# Search your documents

leann search my-docs "machine learning concepts"

# Interactive chat with your documents

leann ask my-docs --interactive

# List all your indexes

leann list

Key CLI features:

- Auto-detects document formats (PDF, TXT, MD, DOCX)

- Smart text chunking with overlap

- Multiple LLM providers (Ollama, OpenAI, HuggingFace)

- Organized index storage in

~/.leann/indexes/ - Support for advanced search parameters

📋 Click to expand: Complete CLI Reference

Build Command:

leann build INDEX_NAME --docs DIRECTORY [OPTIONS]

Options:

--backend {hnsw,diskann} Backend to use (default: hnsw)

--embedding-model MODEL Embedding model (default: facebook/contriever)

--graph-degree N Graph degree (default: 32)

--complexity N Build complexity (default: 64)

--force Force rebuild existing index

--compact Use compact storage (default: true)

--recompute Enable recomputation (default: true)

Search Command:

leann search INDEX_NAME QUERY [OPTIONS]

Options:

--top-k N Number of results (default: 5)

--complexity N Search complexity (default: 64)

--recompute-embeddings Use recomputation for highest accuracy

--pruning-strategy {global,local,proportional}

Ask Command:

leann ask INDEX_NAME [OPTIONS]

Options:

--llm {ollama,openai,hf} LLM provider (default: ollama)

--model MODEL Model name (default: qwen3:8b)

--interactive Interactive chat mode

--top-k N Retrieval count (default: 20)

🏗️ Architecture & How It Works

The magic: Most vector DBs store every single embedding (expensive). LEANN stores a pruned graph structure (cheap) and recomputes embeddings only when needed (fast).

Core techniques:

- Graph-based selective recomputation: Only compute embeddings for nodes in the search path

- High-degree preserving pruning: Keep important "hub" nodes while removing redundant connections

- Dynamic batching: Efficiently batch embedding computations for GPU utilization

- Two-level search: Smart graph traversal that prioritizes promising nodes

Backends: HNSW (default) for most use cases, with optional DiskANN support for billion-scale datasets.

Benchmarks

Simple Example: Compare LEANN vs FAISS →

📊 Storage Comparison

| System | DPR (2.1M) | Wiki (60M) | Chat (400K) | Email (780K) | Browser (38K) |

|---|---|---|---|---|---|

| Traditional vector database (e.g., FAISS) | 3.8 GB | 201 GB | 1.8 GB | 2.4 GB | 130 MB |

| LEANN | 324 MB | 6 GB | 64 MB | 79 MB | 6.4 MB |

| Savings | 91% | 97% | 97% | 97% | 95% |

Reproduce Our Results

uv pip install -e ".[dev]" # Install dev dependencies

python benchmarks/run_evaluation.py # Will auto-download evaluation data and run benchmarks

The evaluation script downloads data automatically on first run. The last three results were tested with partial personal data, and you can reproduce them with your own data!

🔬 Paper

If you find Leann useful, please cite:

LEANN: A Low-Storage Vector Index

@misc{wang2025leannlowstoragevectorindex,

title={LEANN: A Low-Storage Vector Index},

author={Yichuan Wang and Shu Liu and Zhifei Li and Yongji Wu and Ziming Mao and Yilong Zhao and Xiao Yan and Zhiying Xu and Yang Zhou and Ion Stoica and Sewon Min and Matei Zaharia and Joseph E. Gonzalez},

year={2025},

eprint={2506.08276},

archivePrefix={arXiv},

primaryClass={cs.DB},

url={https://arxiv.org/abs/2506.08276},

}

✨ Detailed Features →

🤝 CONTRIBUTING →

❓ FAQ →

📈 Roadmap →

📄 License

MIT License - see LICENSE for details.

🙏 Acknowledgments

Core Contributors: Yichuan Wang & Zhifei Li.

We welcome more contributors! Feel free to open issues or submit PRs.

This work is done at Berkeley Sky Computing Lab.

⭐ Star us on GitHub if Leann is useful for your research or applications!

Made with ❤️ by the Leann team

-lightgrey)